CERN is set to go dark for a brief period in the 2018 timeframe to allow for a new sweep of technology upgrades, including the build-out of new datacenters, some of which might leverage non-standard architectures, including ARM processors.

There has been ongoing research about the feasibility of ARM-based processors for upcoming supercomputers, but the conversation will likely not gather more steam until the arrival of a robust 64-bit ARM ecosystem. Even still, the processor is continually evaluated as a more power-aware and potentially less costly X86 alternative—but there are a few caveats, and a lot of unknowns.

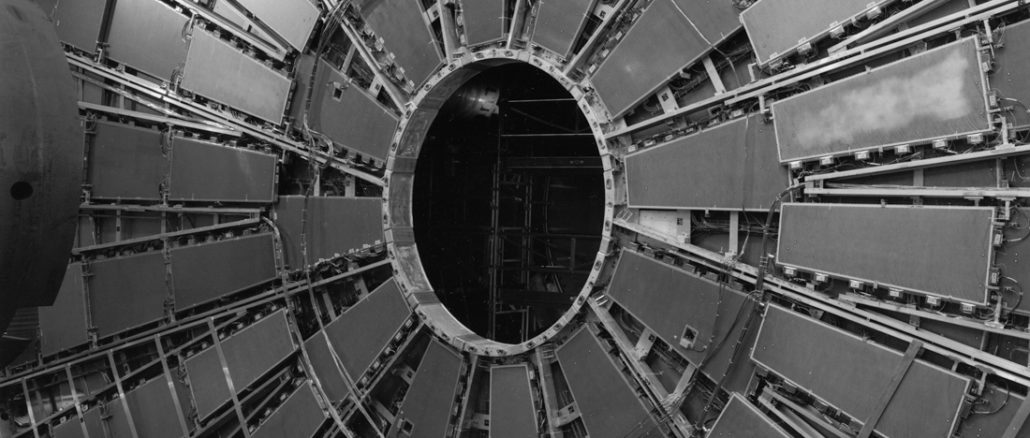

According to Joshua Wyatt Smith, a CERN researcher who has been validating and weighing the performance, power, price, and programming paradigms for switching away from Intel X86-based processors in future systems. According to Smith, his team working on the ATLAS experiment have crossed the first, and one of the most prominent barriers—they’ve been able to prove that most of their software can run on the low-power architecture. “We can benchmark as much as we want with this and other architectures, but at the end of the day, if it’s something that we have to put a lot of software work and effort into, especially since this is essentially multi-million dollar code to begin with, then even with attractive power reductions, it’s not going to be worth it.”

Although using the GCC compiler to make their code portable simplified the process, it is not a quick one-step solution to move big CERN workloads to ARM. The biggest challenge for porting is rewriting ATHENA, the software framework that supports ATLAS. “This framework was created over many years and does not, at any point, allow for ARM to be incorporated. This ranges from certain optimizations that only ARM can do like NEON and vector floating point flags, so at some point all of these things have to be added in, so it’s a complex issue of doing that, recompiling everything and ensuring these new flags are in—and doing all of this locally, so it ends up requiring a rewriting of a good part of the framework.”

But, the point is, it can be done. Even still, as things stand, there are some pesky performance problems that plague the 32-bit incarnations of ARM processors, and Smith says it’s still up in the air how the 64-bit versions will play out, although he is hopeful the performance per watt numbers will stack up to what CERN needs before it makes the decisions about its next generation of datacenters, which will be well before 2018. “There is a lot of maturity that won’t be there,” he explains, “and at this point it’s hard to say where we will move beyond what is essentially beta with the first generation of 64-bit ARM anyway.” Still, he notes that there are a few key vendors that are excited to help the teams push for this type of transition—especially since other alternatives to X86 at CERN are limited in the looming pre-2018 timeframe (optical processors, and quantum computing specifically, although he notes the quantum computing concept would not be an ideal fit for ATLAS experiments anyway).

“We don’t care about what the architecture is specifically. What we really care about, at the end of the day, is performance per watt. And with ATLAS there will never be a reduction in the amount of data, especially with new experiments on the horizon, so performance becomes more important. We need good power, good performance—that’s the target.”

Smith and team performed a range of benchmarking experiments on CPU versus current generation ARM processors and found that with the 32-bit devices, the cost for compute was far cheaper on ARM but the calculations took twice as long. “Power and performance are both of huge importance, but when it comes down to having to wait, for example, two weeks for analysis to run, even if it’s on a cost-effective and low-power solution, performance will win every time.”

In terms of their findings for current generation ARM processors, “the performance per watt of the A7 and A15 show poor results when compared to the A9 and Intel machines,” which is because of the quad-core design of the processor. Even still, it is “slow compared to traditional computers…the newer A15 quad core processors should show a dramatic improvement in overall performance and specifically, performance per watt.”

Smith says the ATLAS team and other parts of the LHC experiments have looked to other non-X86 processor concepts as well as various other accelerators over the years, but the teams are getting down to the research wire of what might actually fit their complex codes without massive software rewrites—and with the ability to tackle large sections of the software. For approaches like GPU computing, he says that performs well for the simulation parts, but is not applicable elsewhere, which does not work since the symphony of code requires communication of different parts of the analysis that must be timed appropriately (Amdahl’s Law at massive scale). Still, he says that when it comes to realistic assessments of what’s possible on the processor horizon, there is still a chance for a strong ARM in the CERN datacenter ecosystem—which should be plenty of impetus for the small ARM vendor community to ramp up its offerings and show a solid ARM ecosystem well in advance of CERN system selection time.

There is quite a bit available, beyond Smith and teams’ research, which shows the benchmarking work other groups at CERN at doing to look ahead at next-generation systems. For instance, there is a great visual tool here with various architectures here, a performance evaluation of ARM against the CERN software stack, and a great primer on the limitations and benefits of ARM for large-scale scientific computing here. Even though it’s from 2013, not much has changed—but just wait for next generation 64-bit…

They should ask either the AppliedMicro guys or perhaps Nvidia for their latest 64-bit ARM chips (would be best if they waited until they have a generation on a FinFET process for maximum power savings/money spent).

Otherwise, they should at least consider AMD APUs with HSA capability, which would allow them to do both GPU stuff as well as GPU compute, on the same chips. And AMD is also cheaper than Intel. Same on the FinFET process.