Back in the late 1980s, while working in the Adaptive Systems Research Department at AT&T Bell Labs, deep leaning pioneer, Yann LeCun, was just starting down the path of implementing brain-inspired machine learning concepts for image recognition and processing—an effort that would eventually lead to some of the first realizations of these technologies in voice recognition for calling systems and handwriting analysis for banks.

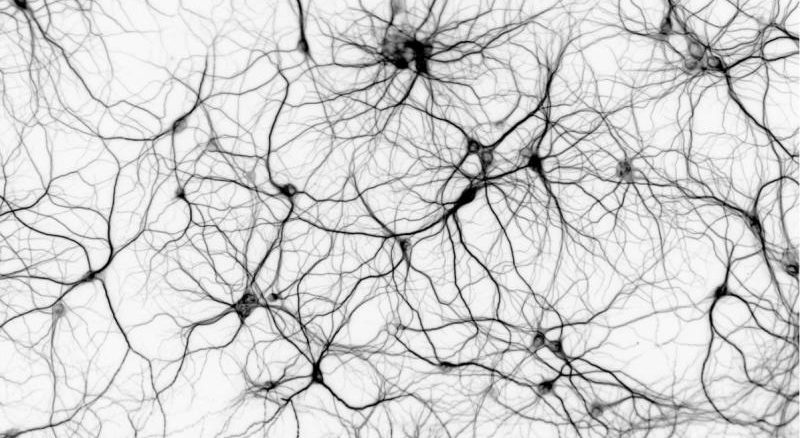

At the heart of LeCun’s developments were convolutional neural networks, which are only just now finally entering more mainstream adoption in natural language processing, image recognition, and rapid video analysis, and are becoming commonplace elements in consumer-facing services most of us use on a daily basis.

LeCun recalls the first days of using GPUs for neural networks, noting that the speedup at research centers like Microsoft in the early 2000s was between 50X and 100X—all before CUDA and other tools to make working with general purpose GPUs easier were available. Since then, he said the research relating to using GPUs for these applications has not only pushed performance, but with the addition of more memory on both the CPU and GPU, lets researchers create far larger networks than he thought possible back in 2000.

Even with that kind of progress and possibility, GPUs for large-scale neural network training are not ubiquitous, at least not for the customer-facing real-time applications we associate with such nets, including facial, speech, and image recognition. LeCun tell us that while companies like Facebook, Google, Baidu, and others are using GPUs to power neural nets that drive these services, it is on the backend only–and just for the demanding training tasks. “All but just a few of these companies that use GPUs for their neural nets aren’t doing it in production, they use it to train the networks, but because they want to rely on commodity hardware as much as possible, this is where the GPU’s role ends, except in a few small private cases.”

Neural networks have come a long way in the last several years on the algorithmic side, but the real key to pushing their performance and development lies in taking full advantage of hardware advances, including the use of multi-GPU systems for training the networks—an incredibly computationally intensive task that is further complicated by the parallelization challenges on the code front to let GPUs on separate systems share the burden.

But as the amount of data to feed increasingly large neural networks expands, so too do the demands, even if for backend neural network training, to have ever-larger, faster GPU-based nets. The issue is, breaking apart these tough deep leaning problems creates its own tangles.

As both the algorithms and systems for training and using neural networks have evolved, LeCun has remained active in further development, both in his role as founding director at the NYU Center for Data Science and as the director of AI Research at Facebook. He tells The Next Platform that while using GPUs on a single node for these workloads has been perfected, parallelizing across a number of a machines (and many thousands of GPU cores—one Nvidia Tesla K80 has over 4,800) is still a roadblock, especially for the training of neural networks, which can take several days.

LeCun and his team at the NYU Center for Data Science, are tackling this research challenge with a new eight-node Cirrascale cluster outfitted with 48 of the high-end Nvidia K80 GPU coprocessors. The goal is try to find efficient ways to maximize GPU computation across a cluster for rapid training of large neural nets. GPU computing problems have been addressed on some of the largest supercomputers to date, but LeCun says that the problem of using GPUs on large machines for deep learning is a bit different. “For us, running large neural nets, the whole problem can fit onto a single machine. What we’re missing is the ability to parallelize the training of the network, mostly because the communication is the killer.”

When it comes to parallelizing the training of large neural networks, LeCun says that the simplest way is to give each machine a little piece of the training data, which may be a subset of the images they are training for recognition, for example. While the work is split, the tough part is the correction process—making sure all the processors have the same set of parameters, despite the split. The problem is in the communication where there is a great deal of data to shuffle around and keep in synch, which is further complicated by the fact that the algorithm won’t work if that communication is delayed.

When training these massive neural nets, the CPU’s role in this training process is far more of an orchestrator as most of the processing is done on the GPU. “A lot of people are trying to solve this problem at Baidu, Facebook, Google, Microsoft and elsewhere,” says LeCun. “We are all looking for algorithms that can distribute this problem but keep fast communication between the processors themselves, so it both a hardware and software issue.”

On the hardware front, the NYU deep learning cluster makes use of the high speed GPU Direct bus, which rolled out several years ago across the GPU computing cards from Nvidia to allow, among other things, multiple GPUs to directly read and write to CUDA host and device memory, which cuts down on the overhead of the CPU. On the software side, LeCun said that three programming tools are central to using many GPUs to tackle large neural networks. CUDA is central, of course, but so too is a low-level C compiler for the CPU’s work and Lua for the interpreter language. At the system level, the team is using Torch 7, which is commonplace in deep learning following development at Facebook, Google, and Twitter, and which features a numerical backend with CUDA hooks that can make quick use of an expanding set of deep learning libraries.

Although the code itself is a target for the parallelization of deep learning tasks, an effort which is on the menu for the NYU Cirrascale K80 cluster, LeCun says that these refinements will be best laid in upcoming systems from major hardware vendors that are working on specialized chips for deep learning that couple the neural network accelerators on the same die as a CPU. While he said that technologies enabled by CAPI and NVLink on Power-based systems and the Knights family of upcoming processors that seek to cut through the communication chasm for single machine communication between GPUs and with the CPU are big advances, the most exciting innovations that pull in the GPU are coming from the mobile and embedded markets.

“Eventually a lot of the deep leaning task will be done on the device, which will keep pushing the need for on-board neural network accelerators that operate at very low power and can be trained and used quickly.” This is already being developed in computer vision circles to power autonomous vehicles, LeCun notes.

Be the first to comment