As we touched on last week, field programmable gate arrays (FPGAs) are not a silver bullet that will rip through the enterprise datacenter world anytime soon. Still, when it comes to a narrow but critical class of large-scale analytics problems, they are gathering fresh momentum.

What is interesting, however, is that as this “old” 1980s technology has resurfaced over the last few years in the wake of new data-intensive problems, the performance has been bolstered, but the programmability problem persists, even with the work that has been done to tie FPGAs to more common approaches like OpenCL. But despite the barriers, FPGAs are exploiting new opportunities in data analytics, giving a second wind to an industry that seemed to languish on the financial services, military and oil and gas fringes.

Backed by Altera, Xilinx, Nallatech, and other vendors and with hooks into OpenPower, the gates have opened for FPGAs to prove their mettle in a host of new web-scale datacenter environments, including Microsoft for its Bing search service. And it’s this type of hyper-dense search, along with neural networks, deep learning, and other massive machine learning applications, where they stand to shine. But again, back to reality, while the core value of FPGAs is that they are reconfigurable on the fly, this is also an Achilles heel, programmatically speaking. No matter how robust, high performance, and low power, if they cannot be programmed without specialized staff, what’s the point? Now, with Intel poised to potentially buy one of the two main FPGA makers, Altera, and momentum in the OpenPower sphere to bring FPGAs to a wider market, the impetus is greater than ever to make FPGAs available programmatically. But there could be a way of wrapping around that problem, at least for some algorithms.

Before we get to that, the Bing example along with the other financial and energy exploration codes where FPGAs have historically been found all share something else in common. The FPGAs are tied to a traditional sequential X86 processing environment that is based on clustering for scale and added performance. Further, the addition of FPGAs, while adding performance for specific problems, adds additional programming complexity, With this in mind, it’s not difficult to imagine building a supercomputer from these FPGAs, but according to Patrick McGarry, VP of engineering at FPGA-based data analytics server maker, Ryft, one of the main goals is to move away from both an X86 and clustering mindset and make the FPGA the analytics workhorse. And what’s notable here is that they may have made the programmability leap.

“Today’s sequential processing systems are forced to cluster in order to scale to the needs of the target community, which in turn, hampers performance.”

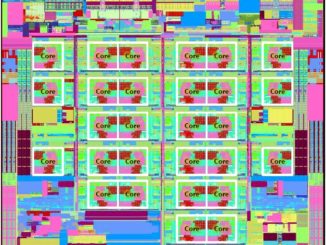

“We set out to design a 1U box that could minimize or even eliminate the need for clustering for the majority of an enterprise’s analytics problems,” explained McGarry. The Ryft ONE boxes his company makes look like a network appliance from the outside, but are outfitted with a X86 processor running Linux with no special software mojo other than the drivers for the FPGA. As a side note, the company ran a series of internal benchmarks on both Altera and Xilinx FPGAs and found that Xilinx was the clear winner for their specific needs based on how quickly it could be reconfigured. McGarry said they tested a few others, but their work with Xilinx has allowed to do something very interesting indeed—to develop a custom interconnect, which is their secret sauce, that allows for some pretty impressive results (at least based on their own benchmarks). “Xilinx does not know what we are putting in their FPGAs. It’s the interconnect, the logic, and how we’re passing data. There is no third-party interface or development platform that can do what we did, they’ve been a great partner but this is all a unique approach.”

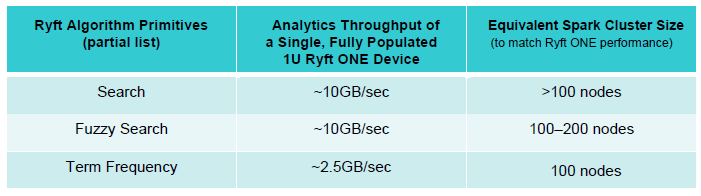

So what we have here is an FPGA-based system where the host processor handles basic tasks, allowing the FPGA to exclusively handle the bulk of the processing–all the while minimizing data movement, even when compared to how the fastest in-memory analytics approaches do so (Spark for instance). The numbers below are done within a 700 watt power envelope for the entire box. The efficiencies come in part from the FPGA, but this device is also the way the Ryft ONE it cuts down on data movement.

“Current large-cluster solutions using tools like Hadoop and Spark require an inordinate amount of ETL (extract, transform, and load) work. This often artificially inflates the size of the data significantly. And making matters worse, users are often forced to index the data to be able to effectively search it, which can further artificially inflate the data set size. (Not to mention the amount of time it takes to do those things – days and weeks in some cases, especially if multiple indexes are required.) With the Ryft ONE, you can analyze data in its rawest form,” McGarry highlighted. “You aren’t required to ETL or index it. That’s a huge differentiator. If you want to add some structure for your own purposes, you are certainly more than welcome (such as perhaps XML, or something along those lines), but it is by no means a requirement.”

Again, those numbers (and the entire FPGA field) are to be taken with a grain of salt (like all benchmarks) because these are very specific codes. If your core business is machine learning, certain types of search functions, or in coming months, image and video processing, this might be of interest. And while you can still make use of that unnamed but Intel “high performance” X86 processor inside and offload things to the FPGA on board, you could, but it would be a damned expensive way to do things (these are sold as hosted/on-prem rentals with an $80,000 configuration fee and $120,000 per year license that includes all updates, new primitives, etc.).

But of course, back the big question here—the efficiencies can be benchmarked in theory, but how do you actually program the thing, especially since this is one of the limiting factors for broader FPGA adoption? And further, how is it this small company claims to have figured out the secret to making FPGAs simple to program and use when the largest makers and their communities haven’t solved this problem?

The answer brings us back to the fact that this is, at its simplest, an appliance. It comes with “precooked” ways of handling a select set of highly valuable data analytics problems. But as McGarry added, the API Ryft is using is open (available on its site) and based on C, which means in theory, it’s possible to wrap almost any programming language in the world around it since many (Java, Scala, Python, etc) can all invoke C. The idea then is that the only thing required to use the FPGA is to make a high level function call—you call the routine and it’s there. “We tried to abstract away everything possible and by doing it this way, you don’t even need to know how to spell FPGA, and it also makes it easier on our end because we don’t have to support all of the other tools (visualization, etc), you can just write it. Our box is for performance purely.”

So with these potential advantages in mind in terms of programmability and performance, how can such systems—which again, were not built with traditional clustering and scalability in mind—still scale? The target workloads require processing of around 10TB on average, which is one of the reasons they decided to natively support up to 48TB in the 1U Ryft ONE. While it’s possible to do that across multiple systems, overstocking the machine means most of their users will never need to, in theory at least.

And even with all the data processing capability in the world, it’s meaningless without a way to move the data. The Ryft boxes have two 10 Gb/sec Ethernet ports that can be used for arbitrary data ingress and egress. “Since our analytics performance in our backend analytics fabric is on the order of 10 gigabytes per second, we can easily handle line rate 10 gigabit Ethernet (since using 8B10B encoding, that translates to only one gigabyte per second) – with room to spare. This means that should the market decide that we need higher network interface speeds, we can make that happen with a minimal amount of re-engineering.”

In terms of scaling beyond this, McGarry says that if a user needs more than the 48TB for data at rest, and more than two 10 Gb/sec network links, then you could utilize multiple Ryft boxes to scale. “However,” he notes, “you wouldn’t use them in what you traditionally think of as a ‘cluster’. You would instead implement a data sharding approach, determining which of the two (or more) Ryft ONE boxes you’d send the data for processing.”

Other companies dipped an early toe in the FPGA box waters, including high performance computing server maker, Convey, which did something similar for a select set of workloads. As OpenPower continues its development, we can expect a new crop of FPGA-powered systems to emerge. Further, if indeed it’s true that Intel will be buying Altera, one can only guess what lies on the horizon, but for now, we’ll wait in the wings for a user story to emerge from Ryft to provide some much-needed real-world insight.

Be the first to comment