For a company that was formed well before electricity, consumer products company, Procter and Gamble, has continued to modernize. The company has become well-known in high performance computing for its use of advanced modeling and simulation on leading-edge supercomputers. While it might be surprising how many core hours could possibly be thrown at baby diapers or dish soap, P&G has paved the way with open use cases highlighting how commercial HPC can improve product development–whether its a better engine or less sticky shampoo.

One of the reasons we hear about P&G and its supercomputing efforts is because they are one of only a select set of large public companies that are open about the work they do computationally with almost all of the national labs and supercomputing centers in the U.S. where everyday products are modeled for integrity, safety, and usability. The interesting thing is that even though they are often touted for these partnerships, their own in-house systems are on par with oil and gas, financial, and other arenas where large-scale HPC systems are most common.

While there is little data available about the architecture of all of its machines (we can assume that there are some that are not publicized), a few things are clear—they have clusters that are on parallel with some of the largest systems in the world that are running mostly commercial codes. Of the known systems at P&G. there is at least one Cray/Appro system, in this case a 5,120-core Infiniband-connected “Sandy Bridge” machine that was installed in 2012 without any accelerators.

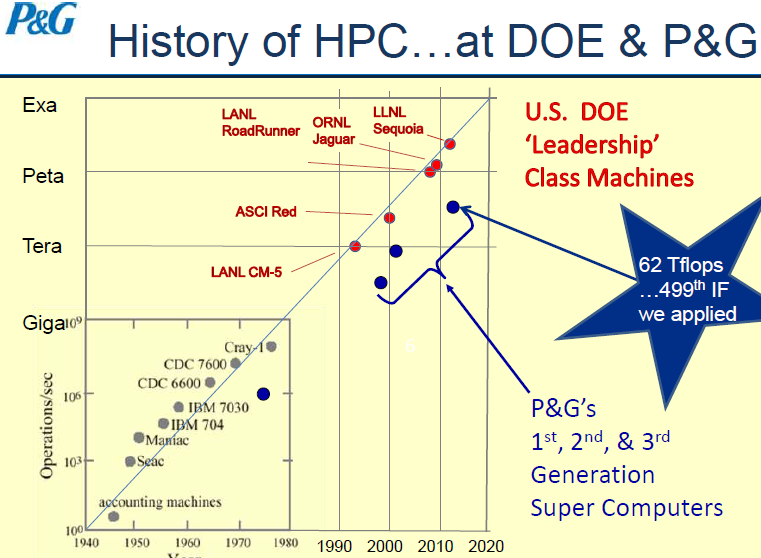

Although the chart below is a bit dated (three years) it does show the level of HPC investment at P&G in relation to other top-tier supercomputing sites. The company does not often run LINPACK to publicly list their machines on the Top 500 list of the fastest systems, but it is clear that they take HPC seriously, even if they are using commercial modeling and simulation codes that do not tend to have massive scalability. The guess here is that they use these systems for many different applications, thus tend to go for “vanilla’ HPC infrastructure to suit as many commercial manufacturing and modeling codes as possible. This could be part of the reason why they are only just dipping their toes into GPU computing waters (despite the fact that many of the top-tier systems are using some form of acceleration, either discrete GPUs or increasingly, Xeon Phi).

As we found out today during a presentation at the GPU Technology Conference (GTC), however, even though there are clear benefits to accelerating computational chemistry models with GPUs to improve the way products interact with the skin and change structurally when additives are integrated (perfumes, dyes, etc.) P&G has been relatively slow to adopt GPUs—for both select groups within the company that handle modeling and simulation of materials to the larger systems that handle other HPC applications. This, however, might be changing as more molecular dynamics and computational chemistry codes are ported to GPUs and still others are being scaled to ever-increasing core counts.

This reluctance to push GPUs into a mainstream role at P&G is due to the fact that there are currently only a small number of groups within the company that are leveraging GPU computing. Further, as Russell Devane, a senior scientist in the Corporate Functions Modeling and Simulation Group said today at GTC, even though there are several commercial codes that have been ported to GPUs, some that P&G uses extensively have only been recently been moved to run on Nvidia’s accelerators.

“We’re a small computational chemistry group within PG but we do have a huge computational engineering group and they do use a lot of commercial codes that have not been ported to GPUs. It’s hard to get the big HPC group in our company to commit to GPUs when only a small number of us are using them. But that is changing now that the commercial codes are being ported and in our larger company we won’t have many GPUs but we are going to have some coming up, which is a huge step forward,” Devane says.

Devane notes that when it comes to a group like his, it would not have been possible to handle the code modifications necessary to the LAMMPS and NAMD molecular dynamics packages they currently use—that work was already done. However, he says that in the upcoming systems at P&G, there will be some nodes that can take advantage of GPU acceleration, even though the number will be small to start.

The small group Devane works with models the lipids that comprise many of their products and how these interact with additives as well as how they penetrate the skin. While he did not provide details about performance using GPUs over traditional CPU-only systems or workstations, he did say that the work they are doing is proving a point to the larger HPC engineering groups at P&G. The big barrier, however, is simply a matter of code portability, even though many of the most common modeling and simulation codes are already primed for GPUs.

Ultimately, he says the work that P&G does on the materials modeling and computational chemistry side is about making very small improvements. When one considers that each day there are 4 billion uses of a P&G product, quality is a matter of scale—incremental or even tiny improvements in how products are engineered (even at the molecular level) can have a net effect on the bottom line for P&G, which can then be matched with a better, safer product for consumers. If GPUs can enable this at scale, Devane says he is hopeful that GPU computing will add a new dimension to P&G’s modeling and simulation strategy in coming years as they continue to inch toward more investments in an increased number of accelerated nodes for his team and others working with small models that over the course of many millions of individual products, will make a big difference.

Be the first to comment