Dell recently unveiled its datacenter liquid cooling technology under the codename of Triton. Dell’s Extreme Scale Infrastructure team originally designed and developed Triton as a proof of concept for eBay, leveraging Dell’s existing rack-scale infrastructure.

In addition to liquid-cooled cold plates that directly contact the CPUs, Triton is also designed with embedded liquid to air heat exchangers to cool the airborne heat of a large number of tightly packed and hot processor nodes using 80% of the cooling capacity of the heat exchangers. That leaves 20% of Triton’s cooling capacity as “overhead”. The overhead cooling capacity is then used to cool the warm air that has pulled heat from the conventionally air-cooled components – the “airborne heat load”.

Dell’s Triton water cooling system uses a standard, ASHRAE spec, industrial water supply. No external filters and conditioning are required. Inflow water can be as warm as 38°C / 100°F, which produces an ambient air temperature of 45°C / 113°F – which is the maximum input air temperature allowed to operate the servers. If Dell were running the Intel Xeon E5 processors in this pilot at a normal thermal load (they are not, much more detail below), then inflow water temperature can be as high as 65°C / 149°F.

The net effect is that Triton effectively neutralizes all of the heat produced by the server rack. In practical terms, a Triton server rack can recirculate air inside of an environmentally sealed box. All it needs is the input water to meet spec and ambient air temperature to be somewhat sane. If it’s not in a sealed box, then it can help cool adjacent equipment or at least not contribute any additional air-borne heat load to a room.

At the core of Triton’s design was the requirement to stuff as many semi-custom Intel Xeon E5 processing nodes as possible into a standard height rack. The demo rack we were shown was 30-inches wide, built specifically for one customer, but Triton can be implemented in a standard 24-inch wide rack. The 30-inch rack enabled Dell to cram 96 high performance 2P processor nodes – 192 processor sockets – into a 48U-tall rack.

How did Dell pull this off? At the Dell Enterprise Innovation Day in June, they let a few press and analysts behind the scenes to see how they accomplished this feat.

Chassis And Rack

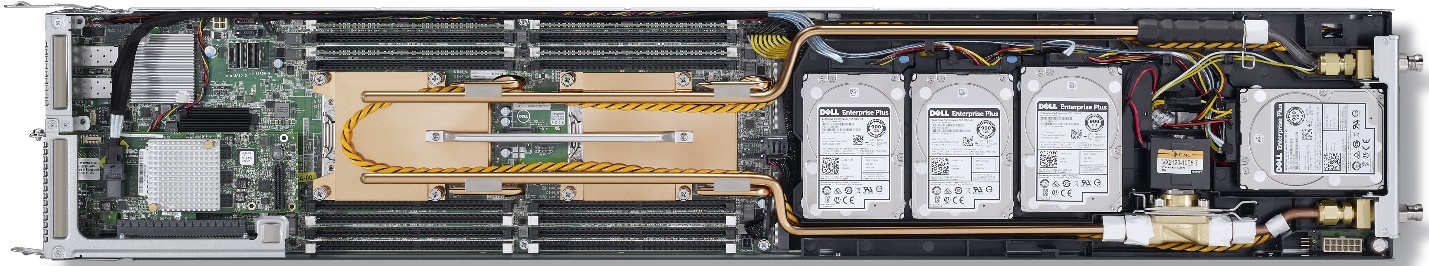

Instead of creating a new modular chassis for Triton, Dell’s system designers reused as much of their existing DSS 9000 rack-scale infrastructure as possible to keep costs low. As a result, Triton’s designers settled on one-third width, slightly taller than normal 1U high server sleds (50.4mm, where 1U is 44.5mm), and each DSS 9000 chassis holds twelve Triton sleds. With two processor sockets in each sled, each DSS 9000 chassis can host up to 24 processors.

Each Triton rack can support up to eight DSS 9000 chassis. A fully loaded rack with eight chassis does not have vertical space left for a top-of-rack (TOR) switch at the actual top of rack, so Dell designers mounted the TOR sideways just above the water filters on the left side of the customer owned rack enclosure used for the demo (TOR removed for our demo, but see below for water filter placement). Using a standard 24-inch wide, 42U high rack, TOR switches can be accommodated by only using seven DSS 9000 chassis in the rack.

Power Delivery

Triton is designed to cool 192 semi-custom 200-watt Xeon E5 v4 20-core processors running at a constant turbo-mode speed of 3.2 GHz (most of the processor’s P-states have been set to 3.2 GHz; max turbo-mode with only one core active has been set to 3.3 GHz). As a baseline, Intel’s stock 135 watt Xeon E5-2698 v4 20-core processor with 50 MB cache runs at 2.2 GHz with all cores active, but with only one core active can run at 3.6 GHz. Increasing power consumption by 48 percent increases operating frequency by 45 percent, and it is also likely that these semi-custom Xeon processors have the larger 55 MB cache and perhaps some other tweaks.

Each compute node also includes 16 DDR4 DIMM slots and four 2.5-inch SCSI hard disk drives (HDD) or solid-state drives (SSD), but only the processor sockets and their voltage regulators are directly cooled by water, the rest of the components are cooled by the DSS 9000’s somewhat normal airflow (there are nominal differences after Triton components have been added).

Dell says that Triton can deliver up to 59 percent greater performance than the Xeon E5-2680 v4 for similar costs, via Triton’s increase in clock speed, increase in processor packing density, and by maintaining full resource utilization.

However, delivering 200 watts to each processor socket in this demo requires 38.4 kilowatts of power dedicated solely to the processors. Add 16 DIMMs and four HDD/SSDs per sled, along with networking and other support functions, and my calculations indicated 56 kilowatts of power delivered per rack. We got close on that estimates. Dell says that it is “delivering 55 kilowatts in 2N redundancy – so 110 kilowatts is being delivered to the rack with 55 kilowatts being the worst case maximum for IT power support.”

This very high wattage power demand is why Dell had to invent a cooling solution that does not consume rack power. Consuming twice the power of many HPC racks, there is no “extra” power available for anything else in the Triton rack. Dell’s goal was to support the highest component heat loads possible while also achieving the highest efficiency possible. Eliminating a secondary coolant loop with rack-level pumps was the innovation that made Triton possible.

Water Cooling Architecture

Dell’s Triton water cooling system is powered by the pressure of the water entering the rack. In its current form there must be a water pump in the data center water loop to generate the required rack inflow pressure. The pump is typically located in a mechanical room inside the data center building, but can be located outside the building next to the water cooling towers. The water pump’s power consumption was not mentioned because the pump will be sized to meet specific customer needs and its power supply is completely separate from rack level power delivery systems.

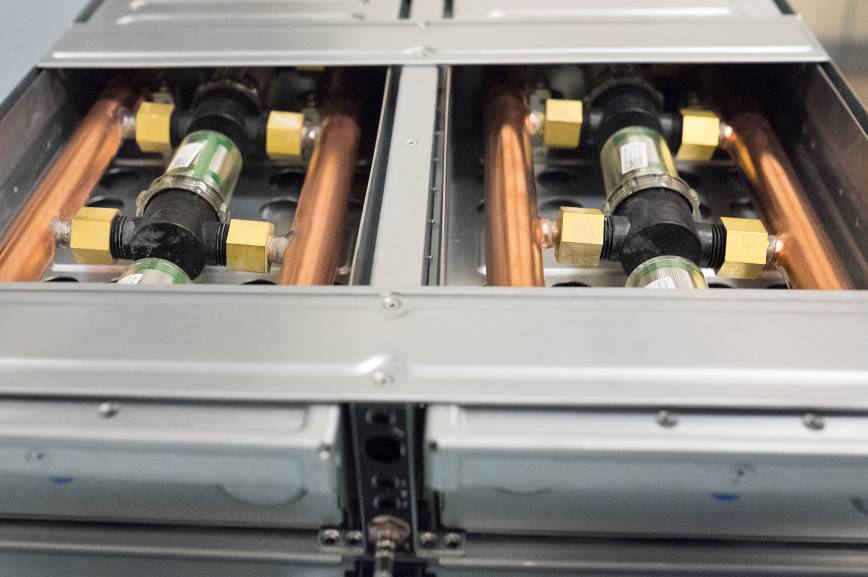

Water enters the rear of the rack though a splitter valve, which provides two independent feeds into the filters. The connectors marked with blue stripes are cool water inflow, those with red stripes are “warm” water outflow. Dell claims that Triton is unique for using standard ASHRAE spec facility water to cool servers without any additional water conditioning or cooling.

The two hot-pluggable water filters can be configured to operate in parallel to lessen their impact to water pressure, or they can be configured in serial for two-stage filtering where the inflow water is not ASHRAE compliant. When in serial mode, water flow stops when a sled is removed, but if it is replaced within a minute or so, it will have no impact to cooling or performance.

The standard filter removes particles of 100 microns diameter and larger. Two-stage filtering can take advantage of additional filters to remove corrosives and other hard to remove substances. Dell says that if data centers follow ASHRAE water quality guidelines, then the standard filters are likely to never need replacing.

Flow rate sensors and Triton’s management software evaluates flow rate and pressure differential across the filters to recommend filter replacement.

The base of each rack is a sealed tray to contain any rack-level leaks. Water sensors in the base are connected into the data center management network to report leaks. This containment and reporting strategy is a recurrent theme at each level of Triton’s architecture.

DSS 9000 Heat Exchanger

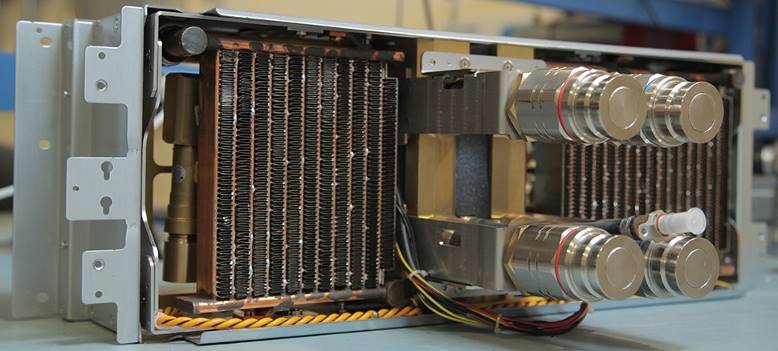

At Triton’s heart is the DSS 9000 chassis heat exchanger design. The heat exchanger is designed to fit into a stock DSS 9000 chassis (Dell says it will fit into any of their G5 chassis), leverage the stock chassis airflow, and provide a water connector ‘backplane’ for the hot-pluggable compute sleds. Dell’s DSS 9000 chassis incorporates built-in networking to simplify cabling and airflow, which also aids in routing water hoses.

The chassis heat exchanger fits between the rear fans and the sled compartments. It requires no extra physical space in the chassis. Behind the chassis, the water hoses connecting the chassis use the back of rack cabling space, so they don’t require extra rack space either.

The heat exchanger sits between the fans and the chassis’ sled connector backplane, and its water couplings take the place of the center four fans on the back of the chassis. It has integral leak detectors that also plug into rack management system (the yellow wires shown in the photo are part of the leak detection system). There are no fans in the heat exchanger, it takes advantage of the airflow created by the remaining back panel fans.

The front of the heat exchanger is designed as a “hot pluggable” water delivery backplane for the compute sleds. Each sled backs into inflow and outflow water plugs, as well as the electrical and signal plugs (not shown) located in front of the installed heat exchanger. They are hot pluggable because the plugs mechanically start and stop water delivery as a board is inserted or removed. Dell removed server sleds and other components and hoses during the operational demo; while there was a little moisture on the inside of the connectors, none of the connectors dripped water.

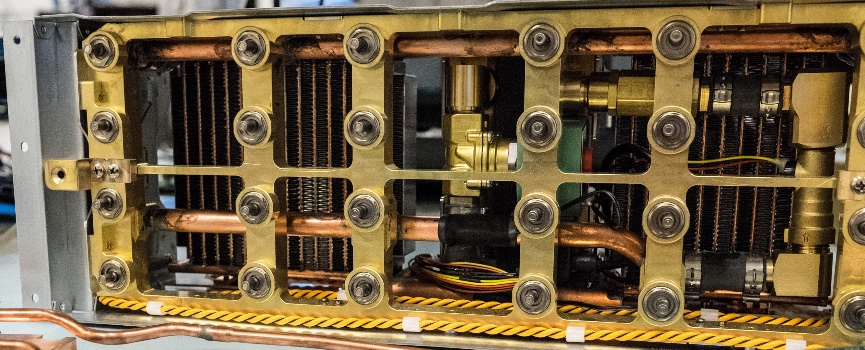

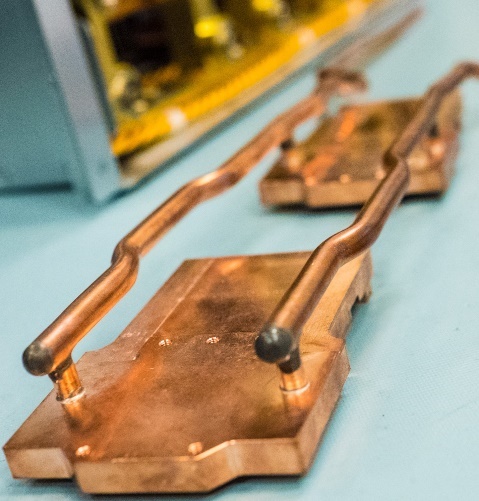

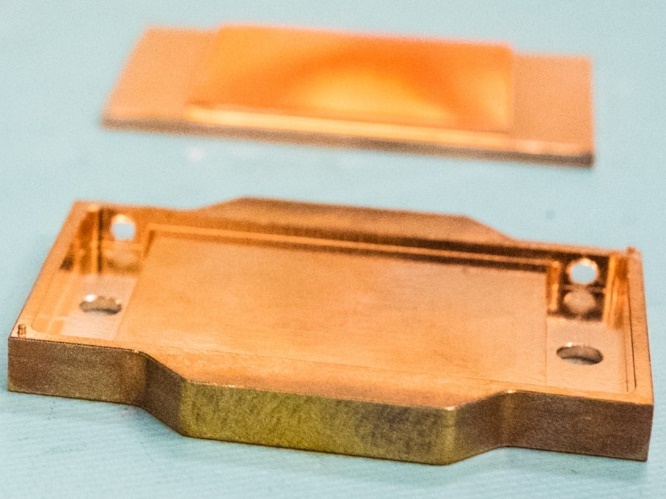

Compute Sled and Processor Heat Exchanger

Each Triton server sled has two water connectors at the back for inflow and outflow (shown on the right end of the sled in the photo below). Copper piping routes water through the processor and the processor voltage regulator heat exchanger plates. The sled has a sealed bottom, so that leaks will be contained inside the sled, and it also includes a leak detection sensor that is tied into Triton’s rack management system. If a leak is detected, an embedded solenoid in the sled cuts off both the sled’s water supply and its electrical connections. If the leak persists then the chassis leak detection circuitry cuts off the water supply to the chassis.

The tops of the processor and its voltage regulator are not coplanar, so each of the two heat exchanger plates (one for each processor) have offset surfaces. Inside the heat exchangers, small millimeter sized fins, called “microchannels”, efficiently transfer heat from the copper plate to the water flowing through the processor heat exchanger.

Dell completes HALT testing of its copper piping and fittings for the Triton server sleds to 350 pounds per square inch (PSI) of pressure. Under normal use the rack-level water cooling system operates at 60 to 70 PSI.

There are no heat exchanger plates on the HDDs, DIMMS, network processor, etc., so native airflow through the chassis and over the sled pulls heat from these parts conventionally. The warm air from the sleds is pulled through the chassis heat exchanger, which cools the air and expels it through the back of the chassis. Not much airflow is needed to cool the rest of the system conventionally. The result is that there is no net thermal footprint for an operational Triton rack (given that there is a heat exchanger for the rack, row or data center located somewhere nearby).

Fitting Solutions For Water

Triton’s designers have designed and tested components to the point that the most likely place for a leak is in the fittings – the connectors between subsystems – even to the point of testing components in a salt spray corrosion tank. During our demonstration, plugging and unplugging water filled hoses resulted in no leaks, but there was a little moisture on the plugs (seen on the right side fitting in the photo of the complete chassis hose, below).

Although the chassis’ water connections link the chassis in a rack together in series (each chassis is connected directly to the chassis above and below), each chassis contains a large bypass, so that most of the inflow water supply goes to the next chassis. Only a small portion of the water goes through an individual chassis’ heat exchangers. Water that has been heated while passing through a heat exchanger then exits the rack, it does not pass through another chassis heat exchanger. The result is that each chassis receives water at the rack inflow temperature.

The straight sections of Triton’s back of rack water hoses are flexible. It’s not immediately obvious why, but as Dell explained, racked equipment is not installed within millimeter tolerances. Plus, it takes a lot of pressure to connect a hose to a chassis heat exchanger, and if the two ends of a hose are not aligned properly it would be very difficult to connect a rigid hose. Flexible hoses are installation, administration and service friendly.

Because connectors are the weakest point in the Triton system, Dell designed a water catchment and monitoring system for their back of rack chassis hoses. The plastic housings shown in the photos are designed to capture drips and spray from leaks, and through an innovative catchment system and sensors in each water cable housing box, leakage flow rate can be measured to assess leak severity. Leaked water cascades through the catchment system to the base of the rack.

Failure domains

Dell’s Triton water cooling system can monitor performance and leaks at the base of the rack, at each chassis, at each sled in a chassis, and at the back of each chassis. Dell’s philosophy is “if you are going to use water, you have to plan for it to leak at some point.” So in addition to trying to build a 100 percent leak-proof system, Dell also built a reliable system that isolates subsystems into failure domains. Each failure domain operates both independently and in concert with the overall system.

Triton meters its water usage based on temperature and flow sensors throughout the system. Valves at each stage open and close dynamically, as needed. There are only a few gallons of water volume in a Triton rack’s pipes and hoses – if it was shipped full of water it would not weigh significantly more. Nevertheless, Dell ships their built Triton components with pressurized air instead of water.

End Note

We have seen a lot of innovative cooling systems – very expensive systems that heat whole buildings to much less expensive systems that enable smaller increases in density and performance. Triton strikes a balance, adding architectural complexity but limiting its impact to operational complexity.

Dell reports a power usage effectiveness (PUE) rating of less than 1.03 for their Triton pilot, including the facility pump’s power for pressurizing water into each Triton rack. This compares favorably to Facebook’s trailing twelve-month PUE rating for both its Forest City, North Carolina datacenter and their Prineville, Oregon datacenter, at PUEs of 1.08 and 1.09, respectively. However, a pump would not be required for an HPC installation of only a small number of Triton racks if the inflow water supply is above 60 PSI, as is typical for many municipal commercial water supplies.

Dell continually reminds us that pragmatic innovation can achieve dramatic breakthroughs.

About the Author

Paul Teich is an incorrigible technologist and a Principal Analyst at TIRIAS Research, covering clouds, data analysis, the Internet of Things and at-scale user experience. He is also a contributor toForbes/Tech. Paul was previously CTO and Senior Analyst for Moor Insights & Strategy.

For three decades Paul immersed himself in IT design, development and marketing, including two decades at AMD in product marketing and management roles, finishing as a Marketing Fellow. Paul holds 12 US patents and earned a BSCS from Texas A&M and an MS in Technology Commercialization from the University of Texas’ McCombs School.

Be the first to comment