Skyrocketing HBM Will Push Micron Through $45 Billion And Beyond

Micron Technology has not just filled in a capacity shortfall for more high bandwidth stacked DRAM to feed GPU and XPU accelerators for AI and HPC. …

Micron Technology has not just filled in a capacity shortfall for more high bandwidth stacked DRAM to feed GPU and XPU accelerators for AI and HPC. …

There are lots of ways that we might build out the memory capacity and memory bandwidth of compute engines to drive AI and HPC workloads better than we have been able to do thus far. …

If you don’t like gut-wrenching, hair-raising, white-knuckling boom bust cycles, then do not go into the memory business. …

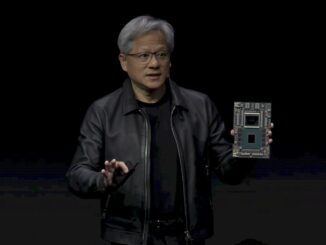

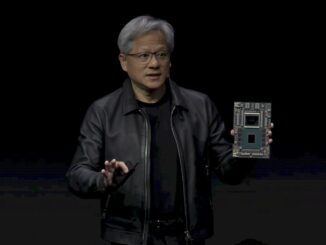

What is the most important factor that will drive the Nvidia datacenter GPU accelerator juggernaut in 2024? …

For very sound technical and economic reasons, processors of all kinds have been overprovisioned on compute and underprovisioned on memory bandwidth – and sometimes memory capacity depending on the device and depending on the workload – for decades. …

If large language models are the foundation of a new programming model, as Nvidia and many others believe it is, then the hybrid CPU-GPU compute engine is the new general purpose computing platform. …

All Content Copyright The Next Platform