Another Step Toward FPGAs in Supercomputing

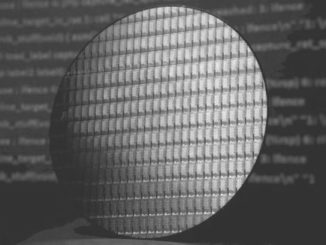

There has been plenty of talk about where FPGA acceleration might fit into high performance computing but there are only a few testbeds and purpose-built clusters pushing this vision forward for scientific applications. …