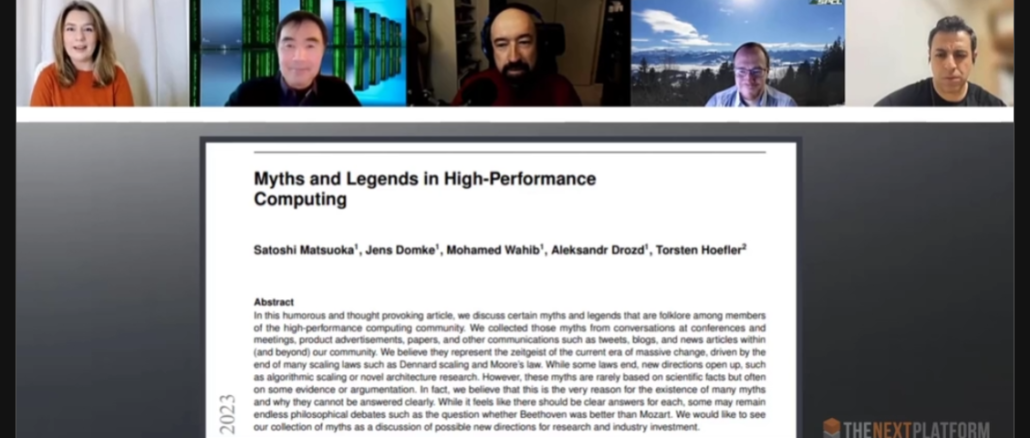

It’s rare in supercomputing to discover a paper that’s both insightful and amusing, but a distributed team led by Dr. Satoshi Matsuoka from RIKEN has managed to produce one.

“Myths and Legends in High Performance Computing” tackles the many angles of hype flying around HPC, from challenging the role AI and quantum computing might play in future large-scale systems, to questioning the role specialization of hardware might have in shaping next-gen supercomputing.

We sat down with Dr. Matsuoka and co-authors to pick apart some of the most pressing myths and legends. You can read them all in the recently published paper here.

Our thanks to the authors for taking time to hop on the line and lighten up without leaving these issues unexplored.

Superb interview and paper of the legendary five horsemen of HPCpocalypse mythology (including the invisible one)! In my opinion: Myth 13: RISC-V will imminently overtake HPC and every other computation, wiping the floor with all other CPU archs. False! Everyone knows that it will be RISC-VI instead … (encumbered by national security concerns; for the best given current geopolitics). Closing question: Will it ever be safe to open the RISC-VI ISA?

Thanks for this very nice video (with a few silly bits) and a quite sober (I think) article on hypes and realities in contemporary HPC. The many research axes, open questions, and how the “performance road” forks three-ways (and you need a knife?; to quote TPM) are well summarized, with ample refs, like Haus (2021, eg. slides 14-15) and Keyes (2022, 29th slide). I like how Figure 1 (page 4) illustrates compute-, vs bandwidth-, vs latency-bound workloads (as emphasized in Next Platform Comments by Paul I think), and how Figure 2, on efficiency, complements Keyes’ 8th slide, and slide 12 in Haus. Clearly, a lot of solid work has been done, and is underway, to improve hardware and code for performance and efficiency. It stands to reason that if “old” algorithms favored computational simplicity (PCG?) and “new” CPU/GPUs are fast relative to memory, then “new” HPC should benefit by using more computationally-intensive (“higher-order”, faster-converging) algorithms that re-tune kung-fu away from memory access (eg. Adaptive Mesh Refinement/AMR of Figure 3). In my mind, the bullets on p.4 of Shaw et al. (2007) (Myth 4 section) add two interesting bits (from past Next Platform discussions): computational memory and autonomous DMA engines. Interesting because Intel SR Max chips have DSA (autonomous DMA), and if it helped Anton’s MD, it might be good for them too (esp. in biomolecular sims). Finally (leaving the demise of FP64 to a later comment), I think that in the article, the words “stipulate” and “compostable”, should be “stimulate” and “composable” instead (but I could be mis-exfoliating ;^})? All in all, very interesting and well-presented!

Positive aspects can run long as math. or myth.

In a thought-provoking, humorous, wedgie-styled, thong-in-cheek declaration, the researchers, pretending to yield to the powerful and most obscure force of high-pressure-hype emanating from ANN/ML sorcerers’ witchcraft, or Frankenstein (to misquote Rosenblatt ’69), proclaim the ritualistic evisceration of IEEE-754 DP (aka FP64) from future hardware (esp. in the video). There certainly exists, contemporarily, an undercurrent of this, in paleo-luddite circles of retro-computing’s most puritan HPC sub-cults, where 8-bit is the pinnacle of modernity, 32-bits an orgasm too far, and FP64 evil incarnate. Such antediluvian, pre-cro-magnon, fossilized, tunnel vision may be found so profoundly biodigested, deep in the bowels of computational stratigraphy, that it may well manifest as entropic hydrocarbons indeed, or gas. The monks of computational kung-fu, by contrast, meditate through all existing and future functional units of the latest chips of fury, to effortlessly (as viewed from the outside) levitate through the solution of the most complex problems, those of greatest benefit to humanity, polar opposites to cheatGPT, and for which FP64 hardware is like the tree to a monkey: Lotka-Volterra, Hindmarsh-Rose, Fisher-Kolmogorov, Turing patterns, Navier-Stokes, etc… (incidentally 2022 was the 200th anniversary of Navier’s seminal 1822 paper, and of Fourier’s heat transfer paper, that later inspired Fick’s own 1855 diffusion paper; 2020 was the 100th anniv. of Lotka’s paper, 2028 will be that of Volterra’s; 2037 for Fisher and for Kolmogorov). Admittedly, I’ve let one rip here, from France as it were, where chatGPT is “le chat j’ai pété”, olfactorily undetectable after a short bout of turbulent diffusion (eh-eh-eh!) … while Fourier and Navier still thrive, 200-years on!