In case you haven’t figured it out yet, if you are not one of the hyperscalers or one of the biggest cloud builders, then you are a second class citizen, or maybe even third class, when it comes to the semiconductors that go into different part of the systems that run your organization.

In the old days – meaning less than ten years ago – it used to be that server CPU makers actually had announcement days that revealed their devices and opened the floodgates to general availability either at launch or soon thereafter.

Those days are gone, with the endless trickle of feature reveals and launch dates that really show what has been selling for one or two quarters, not what is just now for sale all shiny and new.

To be fair, the largest HPC centers of the world used to be the only ones getting such royal treatment – we remember when the Intel “Sandy Bridge” Xeon E5 processors launched in march 2012 debuted first in supercomputers from Appro International the prior November at the SC11 supercomputing conference. Appro’s success at getting in the front of the Intel Xeon and the AMD Opteron lines for many years was one of the reasons why Cray ate Appro in November 2012 for $25 million, giving it an instant commodity X86 cluster line to sell to customers that did not want – or need – its exotic interconnects.

Now, CPUs are in a kind of tight loop with the hyperscalers and cloud builders, who not only get to help co-design the CPUs and their SKU stacks, but they ensure they get the best (meaning lowest) pricing, the first availability, and as many vendor coffee cups as they need to make sure their server CPU competition knows the royal treatment they are getting. This shift from HPC to hyperscalers and clouds makes perfect sense given the HPC centers acquire a new machine every three years with several thousand nodes, a volume that is several orders of magnitude lower than what the hyperscalers and cloud builders buy each quarter.

In many ways, particularly when it comes to the overall profitability of a CPU line over time, it sucks to be a CPU maker. Everything is exactly backwards.

Now, on the front-end of any cycle, the profits are relatively low because of the huge volume price discounts – on the order of 50 percent over the years, depending on supply, which changes that equation in ways no one talks about publicly – that the hyperscalers and cloud builders normally command. And CPU makers have to rely on the other half of the volume ramp with enterprises and telcos and other service providers – who buy in much lower volumes and therefore pay higher unit prices against lowering chip manufacturing costs as yields improve.

In the old days, only the HPC centers and the most desperate large enterprises dove into a new CPU first, and the volumes were relatively low and the price CPU makers could command was high and in direct proportion to the cost of the chip. As the chip production ramped, the chip got cheaper at the same time customers started looking for deals – particularly in an earlier era when Xeons were competing against Opterons. The revenue and profit curves sort of mapped.

And now, they are inverted. Except when AMD has a little bit of leverage, as it most certainly does when Intel’s “Sapphire Rapids” Xeon SP processors are ramping their volume only now and are not expected to be formally launched now until January 10 of next year. This against a “Milan” Epyc 7003 processor that has been in the field since March 2021 and a “Genoa” kicker with a lot more oomph due to be launched on November 10 of this year. (And of course already shipping to the hyperscalers and cloud builders.) This is the one time that being a CPU vendor in the datacenter most certainly does not suck.

Even with higher operating expenses as AMD pushes its Epyc CPU roadmap and covers the cost of ramp of the 5 nanometer Genoa Epyc 9004s, operating income at the Data Center group rose faster than revenue. Part of that, we think, is that Milan is mature and still competitive with anything Intel can make and sell, so the lowering costs of Milan manufacturing are also translating to more profits as not just hyperscalers and clouds adopt this processor, but also the rest of the IT market.

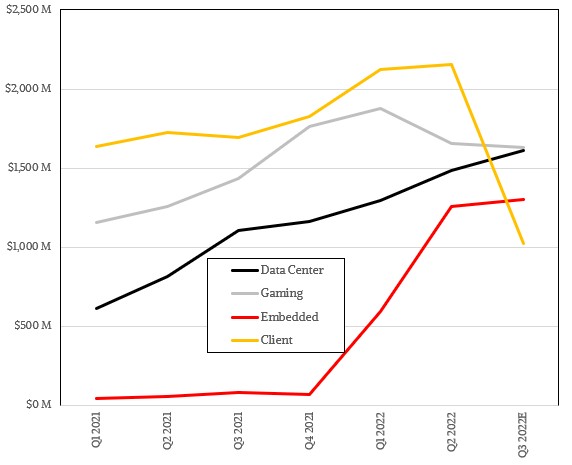

AMD has just posted final financial results for the third quarter – it put out preliminary results a month ago, when we made some specific estimates of sales and profits along with Wall Street – and as it turns out, the gaming GPU business is still a tiny bit larger than the datacenter business. But not for long.

When the beans were all finally counted up for the third quarter, AMD brought in $5.57 billion in overall sales, up 29 percent, but thanks to collapsing revenues in the PC chip market, a net amortization charge of $148 million for some of the assets acquired through the Xilinx and Pensando deals, higher research and development costs (up 62.9 percent to $1.28 billion), and higher sales costs (up 48.1 percent to $557 million), AMD had an operating loss of $64 million, but after an income tax benefit of $135 million, the company was able to book a net income of $66 million. This is compared to a $923 million net income in the year ago quarter when everything was booming and, as it turns out, the channel was starting to stuff itself with PC chips. And now that inventory has to burn down.

In the datacenter space, demand for high performance server CPUs remains high and we think AMD can basically sell every chip it can have Taiwan Semiconductor Manufacturing Co can make.

AMD’s Data Center group figures bear this out. Sales were up 45.2 percent to $1.61 billion, and operating income was up an even faster 64 percent to $505 million. Sequentially, revenues were up 8.3 percent but costs were up 7 percent. Operating profits in Q3 2022 were 31.4 percent of revenues, which is a little higher than the average across 2021 and 2022 thus far.

Drilling into datacenter sales, this was the 10th straight quarter of record server CPU sales for AMD, which was a mix of initial Genoa shipments and what we presume was a lot of Milan shipments. As a kind of leading indicator, Lisa Su, chief executive officer at AMD, said sales to the hyperscalers and cloud builders more than doubled year on year in Q3 and also increased sequentially from Q2, with more than 70 new instances based on AMD Epyc CPUs coming out from Amazon Web Services, Microsoft Azure, Tencent, Baidu, which she called out by name, and others, which she did not. Enterprise, service provider/telco, government, and academia accounts – which are all lumped into the same “enterprise” category – are being more cautious about spending at the moment, according to Su. And she added that spending among enterprises in particular for the datacenter group were down. That means sales of Epyc CPUs for enterprises were down.

Aaron Rakers, the semiconductor analyst at Wells Fargo, estimates that the hyperscalers and cloud builders account for at least 70 percent of Epyc CPU sales. If you do some math on that, extracting out some nominal GPU sales and even more nominal DPU sales, then we reckon a few things. To make cloud go up and enterprise go down, hyperscalers and cloud builders needed to account for at least 80 percent of Epyc sales in Q3 2022. That implies hyperscalers and clouds spent $1.23 billion on Epyc CPUs, up 105 percent year on year, and thus all other kinds of organizations together (again, that broader “enterprise” category) spent $307 million on Epyc CPUs, down only 2.6 percent over the period.

Su added that sales of Instinct datacenter GPU compute engines were “down significantly” compared to Q3 2021, when it was shipping the Instinct MI250X accelerators based on the “Aldebaran” GPU for the “Frontier” exascale-class supercomputer at Oak Ridge National Laboratories. Our model from last year before Xilinx and Pensando were added to AMD pegged datacenter GPU sales at $164 million in Q3 2021 and $148 million in Q4 2021, and then it returned to its normal run rate of around $70 to $75 million in Q1 2022 and beyond. But this is just intelligent guesswork. We think that over time and as the AMD ROCm software ecosystem gets more traction, datacenter GPU sales will do better. But it is a long way from taking a big bite out of Nvidia in AI, even though AMD GPUs are certainly being adopted for very powerful supercomputers at HPC centers.

The Embedded group, which is dominated by the Xilinx FPGA business but which does not include the custom processors made for Sony and Microsoft game consoles (as the old Enterprise and Embedded group did at AMD before the recent reorganization), brought in $1.3 billion in sales and had an operating income of $635 million. In the year ago period when Xilinx was an independent company, it had sales of $936 million and a net income of $235 million, so being part of AMD has certainly helped Xilinx rise at about 2X the rate it was growing when it was independent.

Looking ahead to Q4, AMD said that revenues are expected to be in the range of $5.5 billion, plus or minus $300 million, which is around 14 percent growth year on year and flat sequentially. Assuming that the Data Center and Embedded groups will grow – they could be supply constrained, so be careful here – that would seem to mean the Client group will continue to ship products below the channel consumption level to burn off excess inventory created by the sharp decline in PC sales in the second half of this year.

Be the first to comment