Data movement is the king of all challenges for supercomputing sites to hyperscale datacenters. Maneuvering around these bottlenecks while ensuring security has given rise to Smart Network Interface Cards (SmartNICs) that can provide solid high-speed connections to the network fabric and multiple hardware elements (CPU, accelerators, NVMe, etc.).

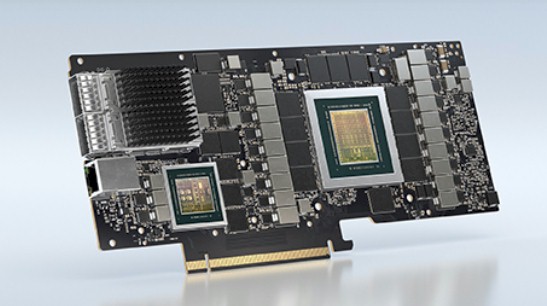

Part of what makes SmartNICs valuable as well is programmability for an application-specific edge to the networking environment. That means more efficient network functionality and specialized acceleration and security. Not all SmartNICs are created equally. One that has received the most attention is the (former Mellanox, now Nvidia) BlueField-2 SmartNIC card.

To get a better handle on the performance limitations and opportunities of the BlueField-2 cards, a team from Sandia National Lab and UC Santa Cruz connected the SmartNICs to a 100Gb/s network in the NSF’s CloudLab with the BlueField software stack. The goal was to test the maximum processing headroom for applications when transmitting packet batches and to see what operations got the most benefit from the BlueField-2 network boost. Their tests revealed that it is quite possible to overrun the SmartNIC’s processing power for certain operations.

“While BlueField-2 provides a flexible means of processing data at the network’s edge, great care must be taken to not overwhelm the hardware,” the researchers conclude. “While the host can easily saturate the network link, the SmartNIC’s embedded processors may not have enough computing resources to sustain more than half the expected bandwidth when using kernel-space packet processing.”

With that said, this doesn’t affect everything running through the SmartNIC or many computational processes. “Encryption operations, memory operations under contention, and on-card IPC operations on the SmartNIC perform significantly better than the general-purpose servers” the team used for their comparisons.

The test got off to a rocky start after it crashed with packet sizes ranging from 128KB to 10KB in separated host mode. “The results for the BlueField-2 surprised us. While we knew the BlueField-2 card’s embedded processors would have trouble saturating the 100Gb/s network link, we expected that many worker threads generating large packets would be able to fill a significant portion of the available bandwidth.” They say that “at best, the card could only generate approximately 60% of the total bandwidth.”

There are several configurations put to the test throughout the study that are worth taking a closer look at. No matter how they slice and dice it, the limitations are clear beyond certain basic operations. The conclusion, at least based on this assessment, is that “the advantage of the BlueField-2 is small.” They add that offloading functions to the SmartNIC requires careful consideration about the resource usage and operations of the functions to ensure the design is tailored to the given embedded environment. Their other conclusion is that this SmartNIC did not perform well at all with anything that was hitting local storage or file system-driven I/O, anything computationally (CPU) intensive), or operations that needed to pause for software or scheduler interference. For an HPC-tuned SmartNIC, that guidance doesn’t bode well.

Where this SmartNIC might fare better is in the more memory-intensive computing arena. As a concrete example, it would work particularly well with Apache Arrow (in-memory database) since it uses vectors to store data representations in a column with an IPC to transfer the arrays between processes.

They make the guess that this might be due to optimization of the Arm CPU. The real opportunity, however, is in encryption/decryption and compression/decompression. “Moving this function to the SmartNIC can significantly save CPU cycles for applications running on the host and at the same time, help reduce the function execution latency.”

A wealth of charts and analysis of the results can be found here.

Be the first to comment