About 15 years ago, as Swami Sivasubramanian was making his way from grad school back into the working world, he saw that developers and builders at enterprises were being held back not by their skills or their ideas, but by their inability to access the technology needed to bring those ideas to the fore. Too often they were waiting for IT departments to procure and deploy the necessary hardware and software to build their applications.

About four years after working for Amazon, Sivasubramanian made the jump to the Amazon Web Services subsidiary, which is the world’s largest public cloud provider, where he saw that the tools being deployed on the company’s cloud architecture – technologies like S3 storage and DynamoDB NoSQL database services – were giving developers easier, faster, and more cost-efficient access to the capabilities they were looking for. Such benefits derived from cloud computing has helped established businesses create and deploy new products and services and startups to launch themselves into the public market.

Now Sivasubramanian is vice president of artificial intelligence at AWS and he sees the same story playing out with artificial intelligence, and particularly its subset, machine learning.

“Until recently, it was widely accessible to the big tech firms and cold startups that have the resources needed to hire experts to build sophisticated ML models,” Sivasubramanian said this week during the ongoing virtual re:Invent 2020 event being hosted by AWS. “But freedom to invent requires that builders of all skill levels can reap the benefits of revolutionary technologies. The technologies themselves allow for experimentation, failures and limitless possibilities. So today we are enabling all builders, irrespective of the size of their company or their skill level, to unlock the power of the machine learning. Through feedback from our customers and our own experience implementing machine learning at Amazon, we have learned a lot about what it takes to create an environment that promotes boundless innovation.”

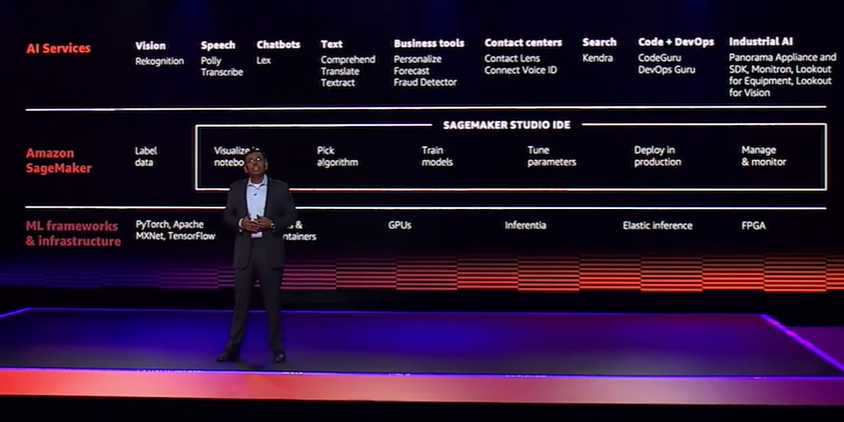

AWS and other big cloud providers – such as Microsoft Azure and Google Cloud – have been on a tear over the past few years building out the machine learning-based services they can offer organizations. For AWS, that has included everything from delivering the architecture – like CPUs, GPUs, FPGAs, containers, AI inference capabilities and support for ML frameworks like TensorFlow, PyTorch and MXNet – to services like Rekognition (computer vision), Comprehend, Translate and Textract (text recognition and extraction), Kendra (search) and CodeGuru and DevOps Guru (developers).

Foundational to much of this is SageMaker, the cloud-based machine learning platform that AWS launched three years ago. Through SageMaker and its SageMaker Studio IDE, developers have the tools to create, train and deploy machine learning models in the cloud and in edge devices. The platform also has been the focus of many of the AI-focused innovations by AWS in recent years, including during the first two weeks of this year’s re:Invent.

The massive amounts of data being generated – IDC is forecasting 175 zettabytes in 2025 – are helping to drive the embrace of AI and machine learning among organizations that need the advanced technologies to analyze it all (when developing machine learning models, the more data the better) to derive actionable business information. But machine learning and its various subset, like deep learning and neural networks, take a lot of compute capabilities by systems that are powered by various combinations of CPUs and GPU accelerators, which often are out of the range of what enterprises can afford. This is where cloud architectures are crucial, giving organizations to the compute power they need without having to invest in the infrastructure themselves and paying for it through consumption models.

As we wrote, AWS over the first three weeks in December is rolling out one wave after another of new products and services, enhancements, upgrades and future plans all designed to ensure that in the rapidly expanding world of public clouds, it will be able to offer enterprises and other organizations all the services they need as more of their workloads, data and development happen outside of core datacenters. In the realm of AI and machine learning, that included Trainium, an AWS-designed custom chip for ML training, and EC2 instances powered by Gaudi neural network accelerators, fruit from Intel’s $2 billion acquisition a year ago of Habana Labs and its programmable deep learning accelerators.

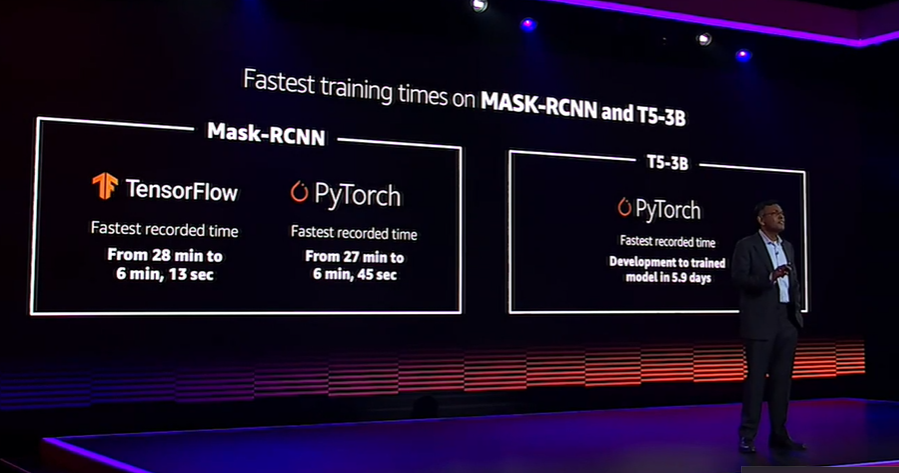

The cloud provider this week went beyond the hardware, delving into the software stack and frameworks. That includes new capabilities in SageMaker, including accelerating the training times on the Mask R-CNN and T5 deep learning training models. These are used for distributing training, but even when using multiple GPUs, there are challenges that can add time to the task. However, SageMaker now – through the SageMaker Data Parallelism library – has capabilities to enable organizations to train models faster by using a few more lines of code in TensorFlow and PyTorch scripts and more fully leveraging AWS’ p3 and p4 GPU instances.

SageMaker also has other new capabilities. SageMaker Data Wrangler, which brings a visual interface to make easier and faster for developers and data scientists to prepare data for machine learning and is integrated into SageMaker Studio. It includes more than 300 built-in transformers, such as finding and replacing data and scaling numerical values, that can be selected from a pull-down list. SageMaker Feature Store is a central repository for storage and discovering curated data for training and prediction workflows. The features stored are organized in groups and tagged with metadata for easy discovery.

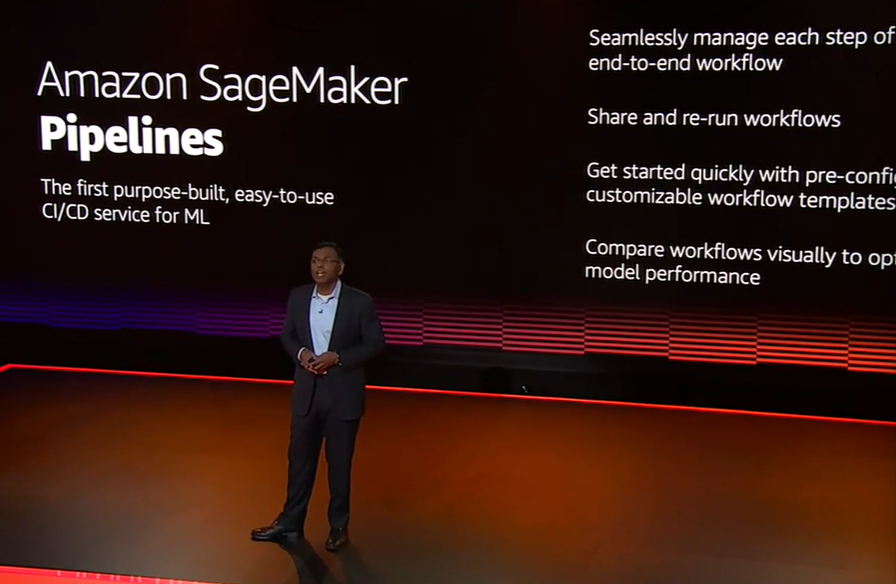

Developers also get a continuous integration and continuous deliver (CI/CD) service for machine learning in SageMaker Pipelines and a way to limit bias in their machine learning models – in everything from datasets to measuring – and explain predictions via SageMaker Clarify.

SageMaker Debugger can now profile machine learning models to ensure training performance with deep profiling capabilities while SageMaker Edge Manager drives machine learning model monitoring and management for services as the fast-growing edge using the same tools for either cloud or edge environments. SageMaker JumpStart is a developer for pre-trained models and pre-built workflows. It includes more than 15 solutions for common machine learning use cases like fraud detection and predictive maintenance, more than 150 models from the TensorFlow Hub and PyTorch Hub for computer vision and natural language processing, and sample notebooks for built-in algorithms available for SageMaker.

Be the first to comment