The Department of Energy has formed a partnership between Lawrence Livermore National Laboratory (LLNL) and Los Alamos National Laboratory (LANL) and AI chip startup, SambaNova to deliver systems with acceleration for AI and HPC workloads. The new box from SambaNova is currently attached to the Corona supercomputer and has already been primed and chewing on early workloads.

Recall that LLNL in particular has been out front with adoption of some notable AI acceleration architectures, including the wafer-scale hardware from Cerebras, which we discussed in detail here. The Cerebras-accelerated machine is attached to the Lassen supercomputer at LLNL and has been shown to be useful for more general-purpose workloads in HPC as well as its intended AI purpose, as described in the linked article from May.

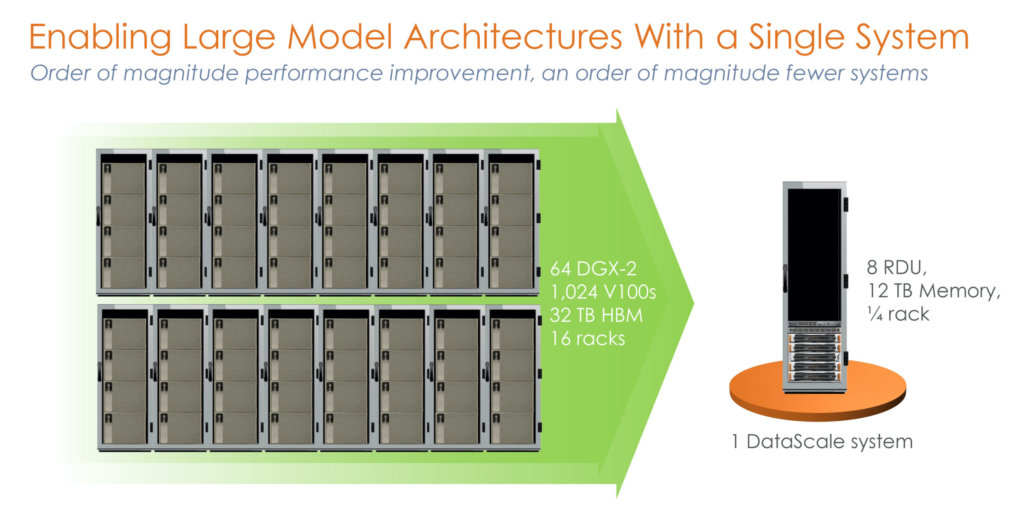

One of the hardware-centric leads for the new DataScale system is Ian Karlin, a computer scientist at LLNL. He tells us his team had been talking with SambaNova for two years before they made the commitment to deliver a machine at the end of the summer. “It was brought on site in September, we turned it on and the same day we ran some tests, acceptance testing over the weekend, and it was fully running by Monday. We’ve been putting it through the paces and have evolving data that’s showing promising results at the high level.” Of particular interest, Karlin says early tests have shown the SambaNova system has been 5X or better when normalized against Nvidia V100 GPUs.

The original plan had been to add the SambaNova machine to Lassen for a true apples-to-apples comparison between Nvidia V100 GPUs, Cerebras, and the new SambaNova acceleration engines but as Karlin notes, the expanded CARES Act funding for the Corona super and the need to start investigating how the all-AMD CPU and GPU stacks might interface with new accelerators in advance for the future AMD-based El Capitan supercomputer pushed the decision to supplement Corona. It will also give teams a chance to explore how to keep expanding their homegrown Flux resource management framework to flip and schedule between compute resource types.

There are only a certain number of AI chip startups that have stuck it out and continued to focus on the datacenter. While hardware performance is paramount (and gets all the attention) for the LLNL team, it is SambaNova’s software environment that appears to have made the difference in the competitive bid. The team couldn’t tell us who else responded to the initial RFP for AI accelerators that could snap into HPC systems, but we have to figure that if the conversation turned to software as much as it did, it was far and away the differentiating factor over startups including Groq, for instance, which has long held its assertion that it could excel for small batch sizes.

As Brian Van Essen, LLNL Informatics Group Leader and computer scientist who has been toiling away on the software side with both the Cerebras and SambaNova systems tells us, “We selected SambaNova for this procurement because one of the key features is they have the ability to do training and inference on small batch sizes. Inference at small scales is key, training on small batches is important for retraining and fine-tuning models. That’s something we’ll be doing.” He adds that key to the two-year engagement they’ve had with SambaNova so far has been the “maturity of the programming model and the team’s expertise with the software stack.”

Karlin added that the programming model is solid and has good integration opportunities, especially for some of the “in the loop” use cases (taking high fidelity physics and replacing it with high-quality data-driven ML models for tight inference at fast rates while directly coupled with multiphysics packages). “One thing we’ve seen is that to offload the physics you don’t just have a single model you’re calling into; it’s an ensemble of models trained to represent different types of physics or those of different atoms or key characteristic elements in the simulations. So the ability to host multiple models on the SambaNova system and be able to farm off individual requests and take advantage of the very low latency, especially at small batch sizes, is one of the differentiating features over something like a GPU architecture.”

There are some unexpected angles to the software stack that the LLNL team found compelling, especially as they look to integration with future systems. “The ability to support many different models with a strong resource management perspective is something we’re looking to exploit and explore with SambaNova. They have a lot of cloud-like features and more of our HPC environment is moving toward taking advantage some cloud technologies—the ability to virtualize and share resources on a disaggregated accelerator was a key evaluation point,” Karlin explains.

Aside from the software stack, SambaNova’s ability to do training and inference as well as fit into LLNL’s vision for disaggregated infrastructure were at the heart of the win. “We wanted a fully capable system that could do both training and inference so we can learn what is best for our workloads and how to optimize future systems.” Karlin says, in reference to a question about Groq and whether they evaluated their offerings given their emphasis on batch size 1 and variable precision capabilities, “We could model away a lot of things that Groq would have given us, especially in terms of inference only acceleration. We can use both CPUs or GPUs or even SambaNova to figure out how much speedup we need from inference only and what makes sense.” He adds that they do not want to have separate training and inference accelerators now, they’re stull learning and thinking about how to build systems with AI components and evaluating the maturity of software stacks and what is possible in terms of flexible designs that mesh with their vision for disaggregated resources.

When asked about how general purpose a SambaNova machine might be for more general purpose workloads in HPC that don’t require double-precision, Van Essen points to the work happening already on the Corona supercomputer. “Some of the COVID work is nicely suited because a lot is done in single or mixed single-double precision already. We don’t have to worry about a lack of double-precision for a lot of the molecular dynamics or docking calculations.” Overall, he says they have ideas about a lot of things that might be well-suited to the AI architecture that aren’t deep learning specifically but these will all take a lot of work, especially since much of the SambaNova stack is geared toward PyTorch and TensorFlow.

When asked about how general purpose a SambaNova machine might be for more general purpose workloads in HPC that don’t require double-precision, Van Essen points to the work happening already on the Corona supercomputer. “Some of the COVID work is nicely suited because a lot is done in single or mixed single-double precision already. We don’t have to worry about a lack of double-precision for a lot of the molecular dynamics or docking calculations.” Overall, he says they have ideas about a lot of things that might be well-suited to the AI architecture that aren’t deep learning specifically but these will all take a lot of work, especially since much of the SambaNova stack is geared toward PyTorch and TensorFlow.

Looking Ahead to Future Systems, Integration of AI Hardware

One thing is for certain as we look at the few academic and government sites that have made investments in AI accelerators for do double duty with HPC applications: there is no desire to have separate clusters for training and inference. The software overhead is too high, for starters.

“What we’re trying to do [with Cerebras and SambaNova] now is to figure out how AI accelerators fit into our system design,” Karlin explains. “We’re building disaggregated heterogeneous system architectures because of the building blocks is a full computer. You could, in theory, stick SambaNova on the side and do great ML and potentially HPC on its own, standalone. Corona is a great HPC machine that can also do machine learning as well as be used as a standalone machine.”

LLNL began some of its work with this on Lassen with Cerebras, targeting a couple of different concepts. We already described the in the loop calculations where AI has a fit, but there is also an “around the loop” use case that could be where AI accelerators shine in HPC and take a broader role. At the heart of these use cases, models take data generated from simulation campaigns to learn from and refine on, which can then be brought back with some computational steering and moved into in the loop inference might be prevalent.

“Our desire to integrate the SambaNova system into Corona was driven by the same desires from our previous platform, we wanted to couple this accelerator tightly to an HPC system where we’re able to do these multiphysics simulations and see how the offload might work,” Van Essen says.

He adds, “the opportunity for AI accelerators is a beautiful convergence between the rise of deep learning as a key application that can act as a domain specific language for targeting these types of architectures…When we look at architectures like SambaNova we can see a clear path for how they accelerate AI workloads, low precision in particular, but with a high probability they’re not going to generalize to the entire HPC software space. There will be spaces they do well and others where GPUs will keep ruling the roost. But this is all why we are focused on this idea of a heterogeneous disaggregated platform.”

Karlin says that what we’re seeing now is akin to where GPUs were 15 or even 20 years ago. “Will they take over the HPC space eventually? Maybe. But that’s not going to happen for a decade. It will start where GPUs did, being successful running a few simple things and building over time. They’ll need new features and it might not even be economically feasible to have HPC SKUs.”

Be the first to comment