The field programmable gate array has always been a different sort of animal in the semiconductor market. While it has evolved from just a bunch of logic gates that can emulate other hardware and therefore run software, FPGAs have followed their own evolutionary path while at the same time making use of other technologies developed for general purpose CPUs and custom ASICs.

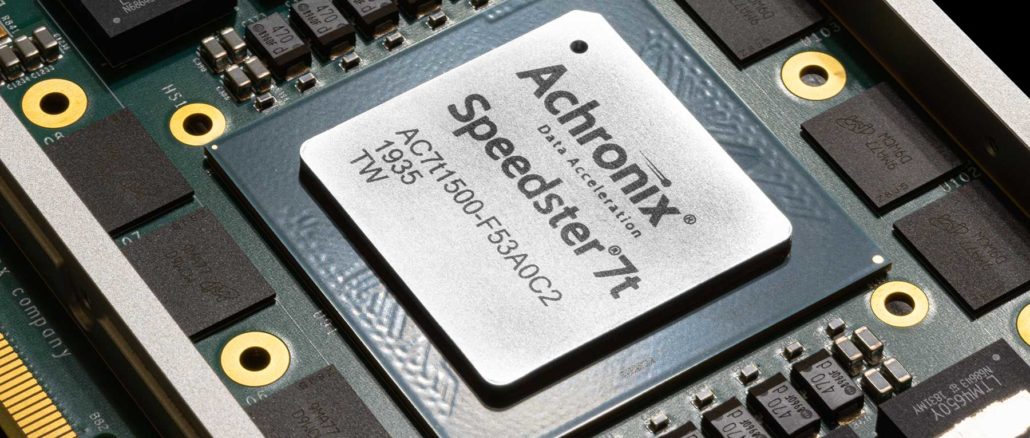

This will be one of the topics of conversation when we have a chat with Manoj Roge, vice president of product planning and business development at Achronix, at The Next FPGA Platform event at the Glass House in San Jose on January 22. (You can register for the event at this link, and we hope to see you there.) Achronix, established in 2004, is a relative newcomer to the FPGA market that is starting to get some traction against its much bigger rivals.

Roge is no stranger to the products and strategies of the key FPGA makers. From the mid-1990s through the mid-2000s, Roge was a product line manager at Cypress Semiconductor for a bunch of different product lines and in a bunch of different management roles. In 2005, Roge was senior manager of product planning at Altera, steering the company’s 65 nanometer and 40 nanometer products to market. (This was just after Achronix was founded.) Five years later, Roge became director of product planning at Xilinx, driving the company’s roadmap and ecosystem, and four years later he became director of its planning and marketing efforts for the datacenter and communications product lines. In the summer of 2017, Roge took over his current job at Achronix, driving the strategy and roadmap for yet another FPGA maker. This experience gives him a unique view, and rather than being jaded, Roge is jazzed.

“There are several reasons why there is so much excitement,” Roge tells The Next Platform. “The number one reason is all of the key workloads in the datacenter are changing at a rapid pace and new ones, like machine learning, are coming of age. Everybody is looking for an order of magnitude reduction in power and improvement in cost efficiency. You just cannot scale datacenters by deploying more and more CPUs, and there is, I think, now general agreement in the industry that you need to have heterogeneous accelerators. An FPGA is one option, and there are many benefits of deploying FPGA for future proofing your datacenters.”

This malleability – we have heard more than a few people refer to FPGAs as “dynamic ASICs” and we would say “dynamic virtual ASICs” inasmuch as they are presenting what often amounts to a virtual CPU with a virtual instruction set that can be programmed – is of course one of the key features of the FPGA. Whenever you have workloads changing fast — but not too fast — and low latency and parallel computing are required, then an FPGA is something that has to be considered.

The way that Roge looks at it, there have been three eras of FPGAs, and we are still in the third, which only hit a few years ago.

“The FPGA 1.0 phase was about glue logic, running from the 1980s through to the mid-1990s,” explains Roge. “This era included low-end CPLD and PLD devices that customers used for some kind of glue logic, some kind of programable I/O, and that represented a $1 billion to $2 billion total addressable market, roughly speaking. From the mid-1990s to about 2017 was the FPGA 2.0 era, which we call the connectivity wave, when FPGAs were used to implement interfaces for networking or memory. There were so many flavors of Ethernet or DDR memory, and as FPGAs grew in density and performance, they were used for some of the complex functions and not just as glue logic. This expanded the TAM to about $5 billion.”

Depending on how you count it up, the major FPGA suppliers – Xilinx, Altera/Intel, Lattice Semiconductor, and Achronix – account for about $6.5 billion in sales and they are chasing a much larger TAM, of course. How much is really the subject of debate, and one of the reasons we are hosting an event centered on FPGAs.

“Starting around 2017, we entered the FPGA 3.0 era, which is all about data acceleration. Now, FPGAs are not just used for some glue logic or prototyping, but are becoming compute engines in their own right and they are getting deployed in high volumes inside datacenters at Microsoft Azure and Amazon Web Services, too.” The TAM for FPGAs, is conservatively anywhere from $10 billion and possibly two or three times that, says Roge.

The question now is how to change that TAM into reality. And that takes hardware and software engineering.

“Whether you are building systems like HPC clusters or analytics for hyperscale datacenters, it all boils down to these three elements: very efficient compute, memory hierarchy and bandwidth, and efficient data transfer. And we have taken a real careful look at how do we optimize these things. We are using 7 nanometer processes from Taiwan Semiconductor Manufacturing Corp, so we will get the benefit of that and have a level playing field with Xilinx; Intel is at 10 nanometers with its current Agilex FPGAs, which is about the same. But our differentiation is with architecture and some of the features that we picked. We decided to focus on a few workloads and we wanted to do the best job of delivering performance per watt per dollar. We really thought through some of these aspects and make it easier for architects to pick up us versus Xilinx or Intel. We were not as concerned with direct feature-to-feature comparisons – logic elements, DSP blocks, memory blocks, and so forth. Teraops is a marketing number, it really doesn’t mean anything. What matters is how you can accelerate the whole application. And because you have the right memory bandwidth and the efficient machine learning computation, we can, for instance, deliver a good end-to-end application performance for image recognition.”

We would say that memory bandwidth, I/O bandwidth, and teraops (or teraflops) are table stakes for any device, and what you do with them – in what unique combination of hardware and software – is what ultimately matters. Which is another way of saying we think that FPGAs will find a lasting but ever-changing home in the datacenter.

Be the first to comment