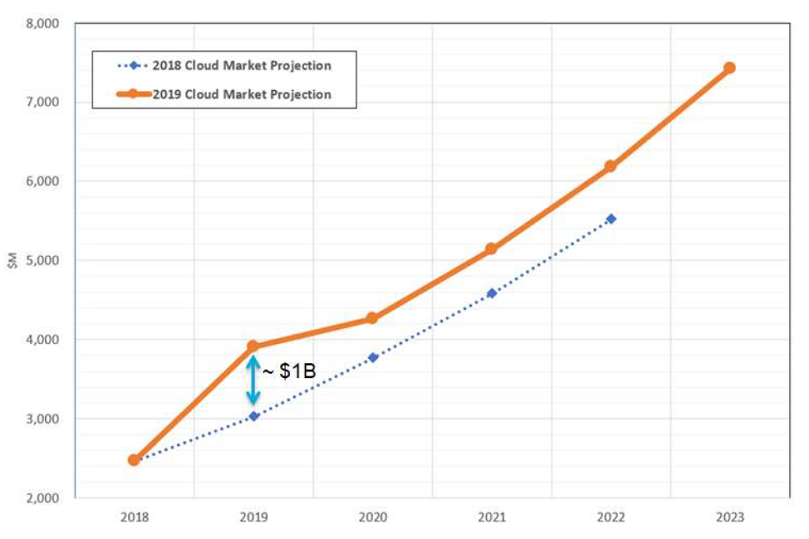

Hyperion Research has declared 2019 as the year that high performance computing in the cloud hit a “tipping point.” Cloud spending for HPC work is projected to jump from just under $2.5 billion in 2018 to approximately $4 billion by the end of 2019. That’s a 60 percent jump.

Hyperion’s five-year forecast would put HPC cloud revenue at $7.4 billion in 2023, reflecting a compounded annual growth rate (CAGR) of 24.6 percent. The increase represents a significant departure from the market research firm’s previous forecast, which had HPC cloud spending pegged at just $3 billion in 2019.

The 24.6 percent CAGR over the next five years is relatively consistent across application domains, although Hyperion is forecasting somewhat faster growth in areas like geosciences (27.3 percent), electronic design automation (26.0 percent), and biosciences (25.6 percent). The breakdown also shows relatively slower cloud growth (21.3 percent) for HPC performed in university/academic settings.

Actual cloud spending for these workloads is going to be somewhat higher than those projections indicate, however, since the Hyperion numbers only includes revenue for HPC users that currently perform some of their work on-premise. Those users without any in-house capability, which exclusively rely on cloud resources for their work, are not counted. Also, note that Hyperion is tracking spending in clouds comprised of third-party hosted resources. They may include public clouds, private clouds, or hybrids between the two.

The big bump in cloud spending was not entirely unexpected. Hyperion has been closely tracking this trend for some time to determine how user behavior is shifting. One recent survey showed that for HPC users currently using the cloud in some fashion, 33 percent of their workloads now run there. According to Hyperion VP, Steve Conway, that means approximately 20 percent of all HPC workloads are now cloud-based.

“That is a very big jump from 18 to 24 months ago, when that number stood at just under 10 percent,” said Conway. “And it had stayed at just under 10 percent for the better part of a decade.”

About 40 percent of these same users reported that all of their workloads could technically be run in the cloud. (Of course, that doesn’t mean they will.) The other 60 percent, however, say a least some of their workloads are not cloud-appropriate. There are a variety of reasons that this is the case, including that fact that some applications are deemed too mission-critical or have special security requirements that cannot be met by external clouds. (Don’t expect to see the NNSA’s nuclear weapons simulations to be running on AWS anytime soon.)

But according to Conway, the biggest remaining obstacle has to do with data locality. He’s referring to applications that are dependent on large volumes of data that need to be in close proximity to supercomputing resources. In these cases, it’s impractical from a time and cost perspective to move that data to a cloud center. Conway said this is particularly important for global corporations that have large datasets at multiple locations around the world.

So why are seeing this big shift of HPC workloads to the cloud?

One reason has to do with the convergence of the HPC and enterprise markets, both of which seem to be converging in the cloud. There’s a real dichotomy of application types here. Whereas traditional HPC is associated with batch-oriented upstream R&D, enterprise HPC is part of real-time business operations. In both cases though, the cloud has become an important platform. When Hyperion surveyed traditional and enterprise HPC users, they found that 19 percent of all users employed an external cloud and even a greater number, 29.3 percent, used more than one.

Perhaps the biggest thing fueling HPC cloud growth comes from the supply side. Cloud service providers are now offering the type of infrastructure that is much better suited to high performance computing than ever before. That includes things like faster, lower latency interconnects (Ethernet and InfiniBand), GPU acceleration, more bare metal offerings, and, in the case of Azure, even entire Cray supercomputers.

According to Conway, this is being driven by two major market trends. First, traditional HPC has become a sizable market on its own, and second, because of its intersection with AI, the opportunity has become even larger. In particular, high performance computing is seen as driving force for a number of economically important AI use cases, including precision medicine, automated driving, business intelligence, fraud and anomaly detection, and affinity marketing, among others. “In every one of those use cases, high performance computing is indispensable at the forefront of research and development,” explained Conway.

AI, which encompasses machine and deep learning, is without a double the fastest growing cloud workload category. That’s according to both traditional cloud providers and system vendors offering their own cloud services. As a result, both now perceive high performance computing as key to their AI ambitions.

“Cloud service providers are now paying attentions to HPC,” said Conway.

Be the first to comment