The apocryphal Chinese curse – “May you live in interesting times” – certainly applies to the datacenter of the early 21st century. Never before have the demands on compute, storage, and networking been so high, and never before have we come up against so many limits to physics that we are not quite sure how to proceed. To be fair, the barriers separating the present from the future have often seemed insurmountable, and it always seems like the current time is worse.

Perhaps we are right this time and these really are barriers. Or perhaps we will get lucky again. But we would say we get clever and innovative, not lucky, because real luck, as baseball great Branch Rickey said, is the residue of design.

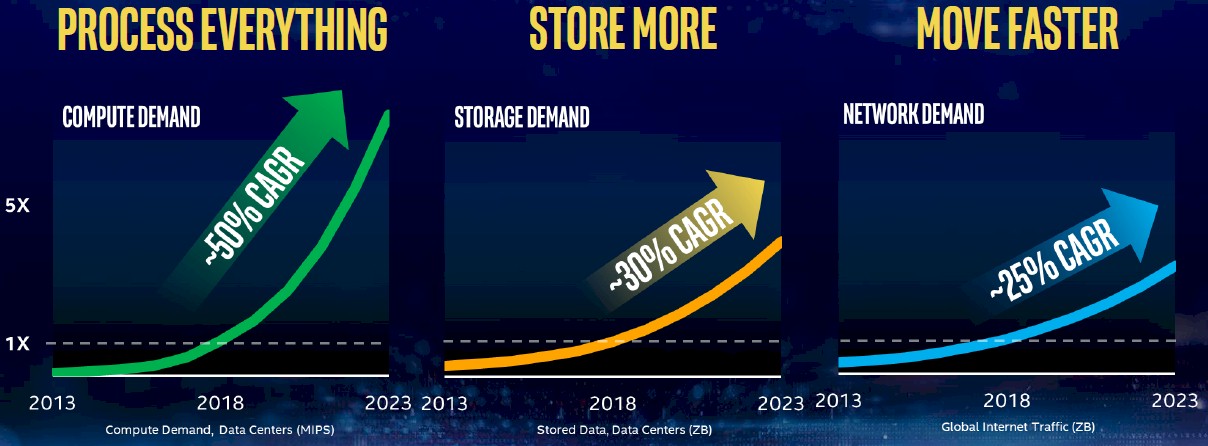

Regardless of the philosophical considerations, the fact remains that the virtuous cycle of the massive data collection that organizations the world over are engaged in is a vicious one, too. It puts stresses on storage systems and the interconnects inside of systems as well as those linking them together. Workloads are diversifying and the types of compute are proliferating to try to optimize for them, and we are now at a point where the industry realizes we need a step function in the improvement in I/O or we are going to hit a massive bottleneck that gums up the works.

We are gearing up to host our Next I/O Platform event in San Jose on September 24, which you can register to attend here, and the keynote for the second part of the day, which is dedicated to datacenter networking, will be given by Mike Zeile, vice president in Intel’s Data Center Group and leads the strategy office for the Connectivity Group. Zeile will explain how the chip maker, which drives datacenter compute and which has increasing influence in networking and storage, sees the networking landscape and what it intends to do to create balanced distributed systems that can process these mountains of information we all keep creating with the hope of deriving value from them.

“The I/O requirements are hitting us everywhere,” says Zeile. “It’s node to node on distributed compute and storage, its within the node to memory, to storage, and out to the network. Every one of these links has hit a point where we need more than the incremental vectors we are used to getting every couple of years. Think about it. We went from parallel interfaces to 1 Gb/sec SERDES through 10 Gb/sec SERDES to 25 Gb/sec SERDES, and then we started layering on top of those I/Os multi-level signaling so you can get more data down the same lane. So now we have 50 Gb/sec with PAM-4 and then 100 Gb/sec with PAM-4. The bandwidth is going up, but we’re getting shorter and shorter distances, we’re getting higher and higher power, we’re getting a bigger footprint in silicon to support these super-sophisticated SERDES technologies. And we are getting to a place where you just can’t incrementally improve that that electrical interface. It a challenge at every interface around add inside a platform.”

Intel is not new to networking, of course, and has developed its own technologies as well as acquiring many others. The company created its own Ethernet controllers for processors as well as its own interface cards for servers, and in the earlier part of this decade, Intel acquired Fulcrum Microsystems for its Ethernet switch ASICs, the InfiniBand business from QLogic, and the “Gemini” XT and “Aries” XC supercomputer interconnects from Cray. And just this year, Intel acquired switch ASIC startup Barefoot Networks and has, of course, done foundational work in silicon photonics and in system interconnects like Compute Express Link (CXL). The question is, what is Intel going to do now?

“It took a little bit of time and energy for everybody around Intel to really appreciate and understand the importance of connectivity to compute,” Zeile concedes. “To continue to scale compute and storage – and to do so at high performance – that’s a hard problem, and it is getting harder as datacenters get bigger and bigger. Just getting across the datacenter now, because of the limitations of the physics of electrical and optical signaling, is microseconds. This is one of the reasons why we are investing heavily in silicon photonics, which I would say is going to be an important discontinuity for the industry and for Intel. Photonics gives you the distance and the throughput per lane that you will need in the future, but it is an interesting challenge because when you stack up today’s version of photonics against the best case SERDES we have, the photonics technology is bigger, draws more power, and has higher cost per lane or interface. But we are getting to a place where we can’t get to the next step in the current SERDES technology – no one thinks we can go higher than 100 Gb/sec signaling – and we have to face that discontinuity and ride that inflection and realize that photonics is continuing to dramatically improve on all of these different vectors with each generation that is being developed.”

Hardware designers always have faith in the future and their ability to engineer their way out of a tough spot, and that is a lot more useful than just giving up. And it also tends to work, so there is that. Right now, the focus of silicon photonics research and development is on getting the photonics down onto the processor or very close to it in the package.

“This is certainly the investment we are making and the bet we are taking,” says Zeile. “And to be fair, the networking industry is also reasonably aligned with this bet even if there is a debate about the timing. We’ve got 100 Gb/sec modules in the market today, which use 25 Gb/sec lanes, and we have 400 Gb/sec modules that use 100 Gb/sec lanes sampling to customers. Intel and the industry are investing heavily in proofs of concept with this next level of integration to the processor and the switch. Along the way we’ve had to do things like reinvent the modulators to get to a smaller footprint and higher performance. We had to deal with thermal management because you know lasers are have historically been somewhat sensitive to thermals. But a key element of differentiation for Intel is that we have lasers in silicon already, while almost everybody else has an external laser that gets coupled in. So for us it’s rather straightforward to integrate lasers into a package, whether it is a chip or a multichip module. In any event. These are the sorts of things that we’re all investing in so that we can get to a breakthrough to make silicon photonics practical. We think we can get it into customer hands in a couple of years, while others would argue it’s more like five.”

So even if we can, within the next few years, switch over to silicon photonics to keep the bandwidth growing, the distances stretching, and the latency dropping in interconnects, here’s the funny thing about the current situation with compute, storage, and networking in the datacenter. Zeile cites the statistics that we have seen from time to time in Intel presentations that over half of the world’s data was created in the past two years and only 2 percent of that data has been analyzed. But the situation is a little more subtle than that, according to Zeile.

“Even if all of that data has not been analyzed, it still has to be moved and I think that point is lost sometimes,” says Zeile. “This data movement puts tremendous amount of pressure on the networking infrastructure, starting at the edge and moving back up into the datacenter. The fact that all of it has to be moved gives us this crazy inflection of I/O requirements, and the irony is that while you may move the data, you may not be able to store it all. And so a lot of it ends up being on the floor.”

Once the network bottlenecks are dealt with, it looks like storage costs are going to have to come down to catch that data before it hits the floor. But that is a problem for a different division of Intel and the storage industry as whole.

Be the first to comment