Of the three pillars of the datacenter – compute, storage, and networking – the one that consistently still has some margins and yet does not dominate the overall system budget is networking. While these elements affect each other, they are still largely standalone realms, with their own specialized devices and suppliers. And so it is important to know the trends in the technologies.

Until fairly recently, the box counters like IDC and Gartner have been pretty secretive about the data they have about the networking business. But IDC has been gradually giving a little more flavor than just saying Cisco Systems dominates and others are trying to get a piece of the action. In fact, the staunch competition that Cisco has been facing in the past several years in datacenter networking is the reason there is interesting statistics to look at. So we took some time and gathered up and organized the past several years of publicly available data about Ethernet switching and routing from IDC so we could look at the trends that are not obvious from the quarterly reports that it puts out.

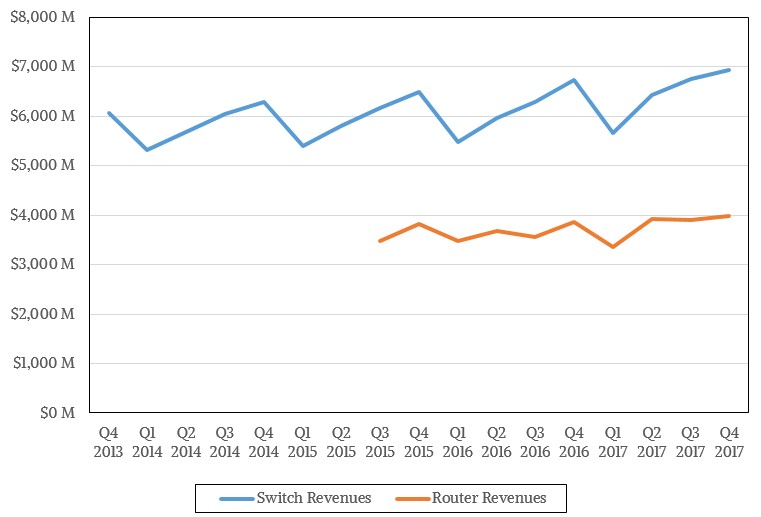

Let’s start with the latest statistics, in this case for the fourth quarter of 2017. Sales of Ethernet switches that operate at Layer 2 and Layer 3 of the network (which these days include some routing functions that blur the lines with routers), all vendors combined in the world peddled some $6.94 billion in equipment, up a modest 3.2 percent compared to the year ago period. We were able to cast the switching revenues back to the fourth quarter of 2013 using the statements put out by IDC, and we paired this to the router sales figures, which do not go back as far. (IDC has such data, to be sure, but it isn’t free.) Here is what the trend looks like:

In the final quarter of last year, router sales to enterprises and service providers kissed $4 billion in revenues, up only 2.4 percent year on year. The relatively growth in sales of Ethernet switches and routers stands in stark contrast to the explosive growth in server sales during the same period, and it masks the huge amount of innovation that is going into network devices to help reduce application latency on distributed systems and provide the ever-increasing bandwidth needed for them. Just as a reminder, server revenues rose by 26.4 percent to $20.65 billion in Q4 2017 against a shipment rise of 10.8 percent to 2.84 million units. This is the best quarter in history for servers.

Routers are under pressure for a lot of reasons, but mainly because hyperscalers are building monstrous datacenters with extremely flat leaf/spine Clos networks that have enough pipes and bandwidth to cross-connect 100,000 servers. The routing functions that they need are often in the Layer 3 switch devices, running as software functions, alleviating the need for so many routers. This has curtailed router sales, to be sure, but don’t get confused. Router sales are pretty steady even though Cisco, Juniper Networks, and Huawei Technologies are fighting hard to get their shares. (Cisco dominates but is slipping, as is Juniper, as Huawei gets more aggressive and is having more success at home in China and abroad.)

With switching being so vital to distributed systems – it is what makes scale out possible, after all – we are more concerned with that, and that is why we follow Ethernet, InfiniBand, and other interconnects so closely here at The Next Platform. Switching is not just in the datacenter, of course; it is out on the campus and in the small and medium business. It is the upper echelon of networking that we are most concerned with, and frankly, no one is deploying anything above 10 Gb/sec Ethernet in those use cases.

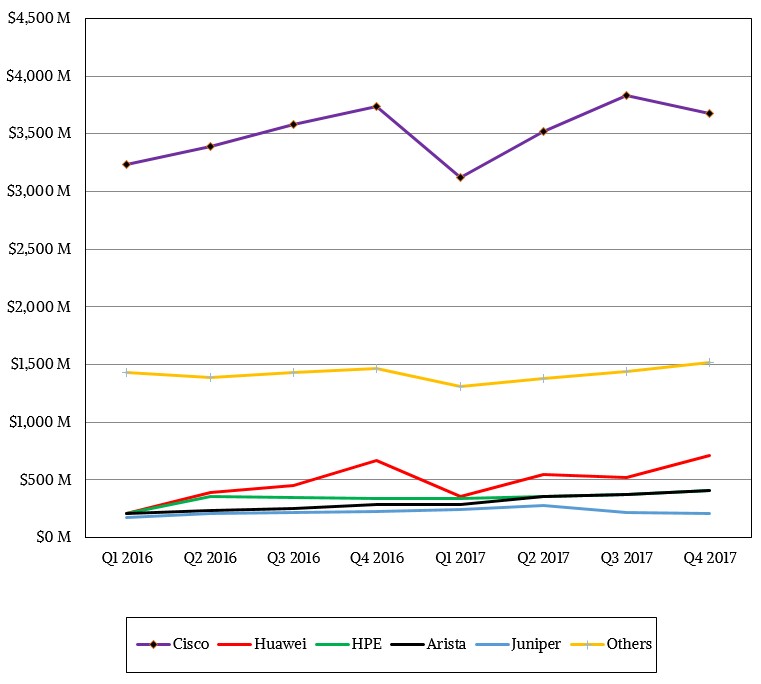

Here is a look at Ethernet switch sales by vendor:

Cisco has always bragged that it doesn’t play in markets where it doesn’t command a 65 percent market share and a 65 percent operating margin, but you won’t hear the new top brass at Cisco saying that. The reason is simple: That ain’t ever gonna happen again. When enterprises and service providers (telecommunications firms as well as other hosting and Internet service providers) were the main customers buying switches and routers, the margins were easy because there was not the kind of demanding buyer that the hyperscalers and the cloud builders represent these days. When networking went from 10 percent to 15 percent of the total cluster budget, and then pushed up into 20 percent and on its way up to 25 percent at the hyperscalers – by the way, reflective of the overall split of compute, storage, and networking in the overall IT market back then – they put their foot down and demanded a faster pace of innovation for switch ASICs, copper and optical transceivers and cables, and network interface cards. They demanded more bandwidth and more consistent latency because this helps their cause, and 40 Gb/sec Ethernet with four lanes of 10 Gb/sec signaling and 100 Gb/sec Ethernet with four lanes of signaling were largely created because these customers demanded it.

These and other innovations, starting with the 10 Gb/sec Ethernet ramp a decade ago, are why Huawei, Hewlett Packard Enterprise (if you include Aruba Networks wireless Ethernet stuff, as IDC does), and Arista Networks were relatively small and in a three-way tie back in early 2016, and now Huawei is now 3.5X bigger and HPE and Arista are twice as big; they are also one reason why Juniper is not two or three times larger.

And we think they will demand 800 Gb/sec Ethernet with four lanes of 100 Gb/sec signaling with PAM4 encoding, too, pushing the technology ahead. The beauty of this is that everyone – meaning the customers – benefits. But companies like Cisco can no longer sit back and slow the pace of innovation and milk their technologies for an extra year or two and extract the maximum profits from them.

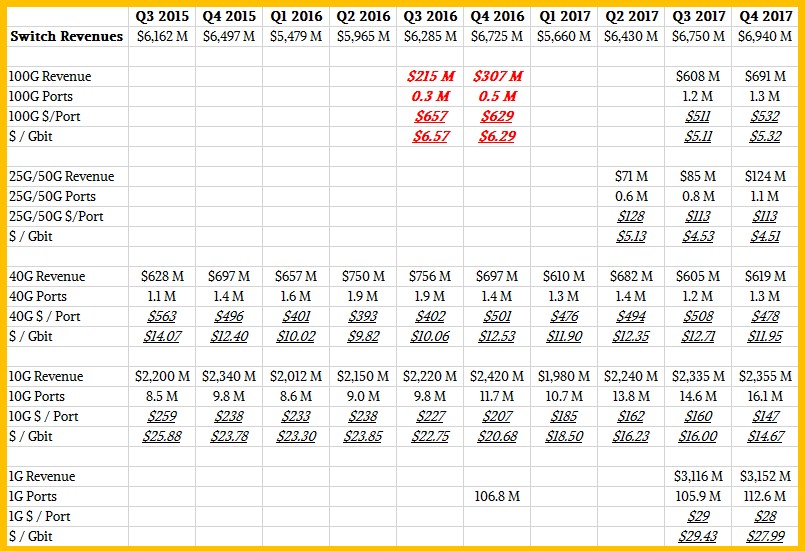

The benefits of this faster pace of change are obvious in the numbers if you do a little math on them, as we have done here:

If you want to see the larger version of this table that goes back to Q4 2013, click here.

Obviously, there are gaps in this data, and intentionally so because IDC has to make a living, too. The ramp of 100 Gb/sec Ethernet is finally under way, and in the final quarter of last year represented almost a tenth of the revenues even though it only comprised about one point of share of the ports. That just goes to show you how many 1 Gb/sec ports (and presumably this is lumping in the 2.5 Gb/sec ports used in campus networks here) there are still sold in the world. Precision in the data for port speed for these low-end switches is not important for distributed computing anyway. The point that needs to be made is that nearly half of the revenues in the Ethernet switch market are for such low-end gear. We only care about the top half. But we reckon off the bottom half for the price/performance improvements because two decades ago, Gigabit Ethernet was the stuff.

Even at today’s prices and with so many decades of pushing the cost of this technology down, if you look at the cost of providing a gigabit of bandwidth per port, it was hovering at just under $30. Back in 2013, after considerable efforts to drive the cost of a port and the bit rate down using faster lanes driven by Moore’s Law, the cost of a 10 Gb/sec port was on the order of $400 and therefore the cost of a gigabit of bandwidth on a port was $40. It has come down steadily over the past several years, and was more like $15 per gigabit per port as 2017 came to a close. With 40 Gb/sec devices, which ganged up four 10 Gb/sec lanes, driving performance and thermal efficiencies and driving down costs, the cost per gigabit per port was half that of 10 Gb/sec devices by 2015 but the gap between the two has slowed because there is limited innovation in 10 Gb/sec or 40 Gb/sec Ethernet these days. So 40 Gb/sec is hovering around $12 per gigabit per port, and around $500 per port, and about 3X the cost of a 10 Gb/sec port.

Now look at the limited data – only three quarters – that we can see for the 25 Gb/sec Ethernet standard, with a single lane of 25 Gb/sec signaling or two for 50 Gb/sec of bandwidth per port. The number of ports is quickly rising, faster than revenues, and the cost per port is approaching $100 and the cost for a gigabit of bandwidth on a port is down below $5. A factor of 2.5X lower than for 40 Gb/sec Ethernet, as the hyperscalers and cloud builders demanded when the 25G Ethernet Consortium was founded back in 2014. And thanks to the innovation with 25G, which puts four lanes together to make a 100 Gb/sec port, the cost of a 100 Gb/sec port is approaching that of a 40 Gb/sec port, and the cost per gigabit per port is also closing in on $5.

Again, this was absolutely intentional driving of the technology by the hyperscalers and cloud builders, and everyone else is going to benefit from this, either directly by using their services or indirectly by being able to buy similar gear. But, of course, at nowhere near the volume markdown.

What a thoughtful article! Another way switching trends could change is with heterogeneous topologies connected directly using servers (eg. synchroknot). It would be interesting to watch the TCO and its correlation to performance.