It has taken nearly four years for the low end, workhorse machines in IBM’s Power Systems line to be updated, and the long awaited Power9 processors and the shiny new “ZZ” systems have been unveiled. We have learned quite a bit about these machines, many of which are not really intended for the kinds of IT organizations that The Next Platform is focused on. But several of the machines are aimed at large enterprises, service providers, and even cloud builders who want something with a little more oomph on a lot of fronts than an X86 server can deliver in the same form factor.

You have to pay for everything in this world, and the ZZ systems, code-named after the rock band ZZ Top if you were wondering, pay for it with the amount of heat they expire when they are running. But IBM’s Power chips have always run a little hotter than the Xeon and Opteron competition, and they did so because they were crammed with a lot more features and, generally speaking, delivered a lot more memory and I/O bandwidth and therefore did more work for the heat generated and the higher cost.

With the Power9 machines, IBM wants to take on Intel’s hegemony in the datacenter, and that means attacking the midrange and high end of the “Skylake” Xeon SP lineup and also taking on the new “Naples” Epyc processors from AMD for certain jobs. We will see how well or poorly IBM does at this when some performance benchmarks start coming out around the end of February and on into March, when IBM is hosting its Think 2018 event in Las Vegas and is making its new Power Systems iron the star of the show. IBM has done a lot to make Power Systems more mainstream, including lowering prices for memory, disk, flash, and I/O adapters and, importantly, moving to unbuffered, industry standard DDR4 main memory. IBM is also extending the Power architecture with features not seen on Xeon, Epyc, or ARM architectures, including the first PCI-Express 4.0 peripheral controllers, which interface with PCI-Express switches to offer legacy PCI-Express 3.0 support in some of the ZZ systems. IBM is also offering its “Bluelink” 25 Gb/sec ports (which are rejiggered to provide NVLinks out to GPUs in certain machines, such as the “Newell” Power AC922 that was announced in December) for very fast links to peripherals and supporting its OpenCAPI protocol. The prior generations of coherence protocol, CAPI 1.0 and CAPI 2.0, run atop PCI-Express networking. All of them offer a means of providing memory coherence between the Power9 chip’s caches and main memory and the memory or storage-class memory on external devices, such as GPUs and, when networked correctly, NVM-Express flash.

We are not going to review all of the features of the Power9 chip, which we went into great detail about back in August 2016 when Big Blue revealed them. We talked generally about the Power9 ZZ machines earlier this week, and gave a sense of the rest of the rollout that will occur this year to complete the Power9 line. We already detailed the Power AC922 and its initial benchmarks on HPC and AI workloads. In this story we are going to focus on the feeds and speeds of the Power Systems ZZ iron, looking at the guts of the systems and what IBM is charging for them. Eventually, when more information is available, we will be able to do what we have been wanting to do for a long time: see how they stack up to the Xeon and Epyc iron for clusters running modern software for various kinds of data processing and storage.

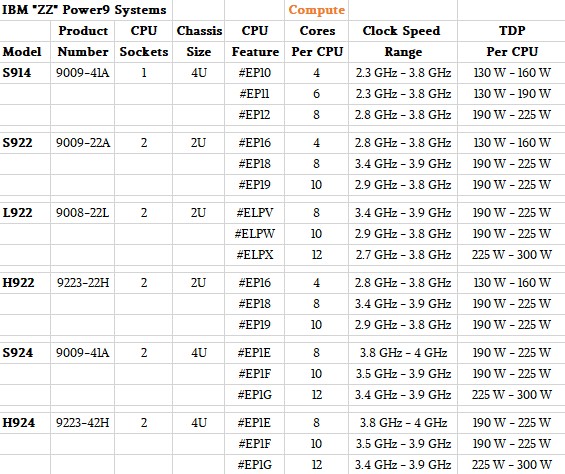

While IBM has launched six distinct flavors of the ZZ systems, in reality there are only two physical machines, with some variations in packaging and pricing to distinguish them.

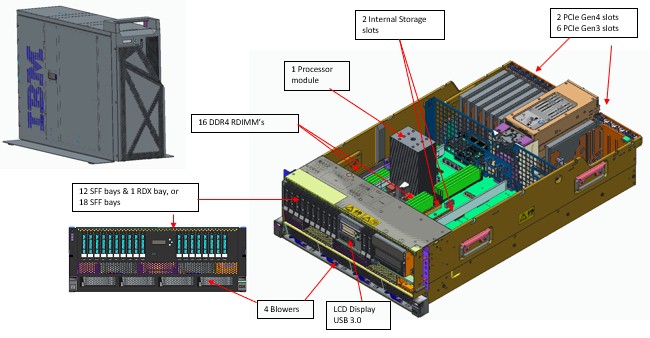

The entry machine in the ZZ line, which is really aimed at small and midrange IBM i and AIX shops that use it to run their core databases and applications and that, frankly, do not have huge performance requirements for their transaction processing and analytical workloads. Not at least by comparison to large enterprises or hyperscalers or HPC centers. Here is the block diagram of this single-socket Power S914 machine:

As you can see, the Power S914 has one Power9 processor and sixteen DDR4 memory slots hanging off of the processor. The chip has four PCI-Express 4.0 controllers, two of which are used to implement PCI-Express 4.0 slots with two x16 connections and two others that are used to link to PCI-Express 3.0 switches on the board that in turn implement a slew of legacy PCI-Express 3.0 legacy slots. There are also two PCI-Express 3.0 x8 storage controller slots that hang off these pair of switches, and they can have RAID controllers or two M.2 form factor NVM-Express flash boot drives plugged into them. The I/O backplane can be split for redundancy and further RAID 10 protection across the split (a pair of RAID 5 arrays mirrored, basically). Eventually, perhaps with the Power9+ and maybe with the Power10 chips, it will no longer be necessary to have this legacy PCI-Express 3.0 support and the switches won’t be necessary. We shall see. PCI-Express 5.0 is due sometime in 2019, so by then, PCI-Express 4.0 might be the legacy.

The Power S914 machine comes in a 4U form factor, as the name suggests that’s S for scale out, 9 for Power9, 1 for one socket, and 4 for 4U chassis. That chassis can be mounted in a rack or tipped up on its side and put into a tower case, like this:

As you can see, there is a space on the right hand front of the system board where there are two internal storage slots, and this is where on the Power S924 and its related Power H924 variant designed to run SAP HANA in-memory databases and their applications puts a second processor. That extra space in the back is used for the extra peripherals that hang off of the second Power9 processor that is added to them. Take a look and see:

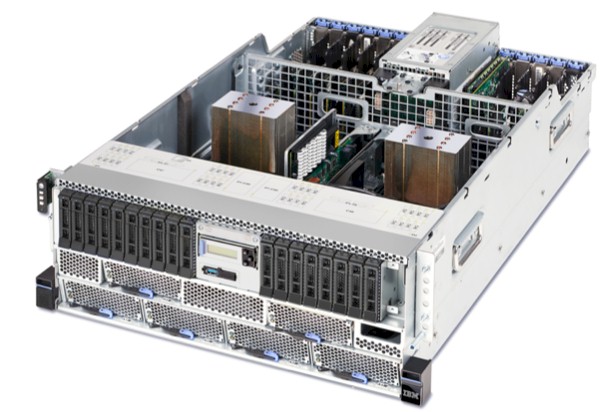

And this is what the Power S924 actually looks like implemented in steel with its covers off:

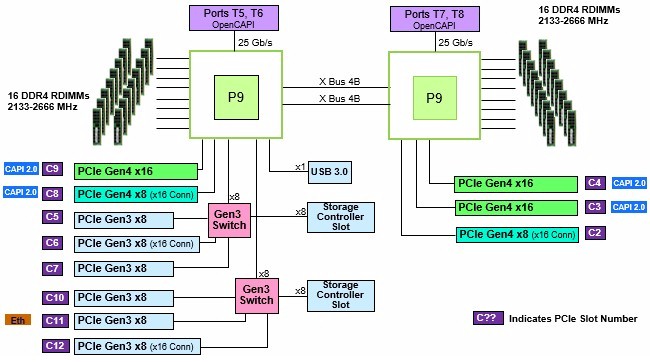

For the sake of completion, here is the block diagram of the system board in the Power S924:

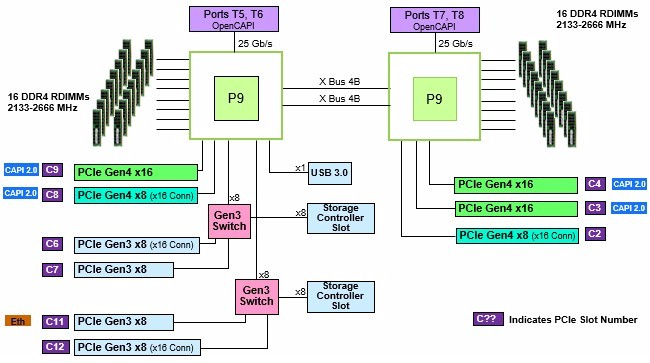

On the two-socket Power S924, and in the Power S922 and its H922 and L922 variants we will talk about in a second, the two sockets are linked to each other in a glueless fashion using NUMA interconnects, in this case based on a pair of X Bus links that are running at 16 Gb/sec. Yes, you see it. The buses for many of the external interconnects used for OpenCAPI and NVLink are running faster than the NUMA interconnects between the processors.

The leaves the more dense 2U version of the Power9 ZZ system, which is implemented as the Power S922 for AIX, Linux, and IBM i; the Power L922 for Linux-only machines; and the Power H922 for SAP HANA nodes that can, if needed, support some IBM i and AIX workloads so long as they do not take up more than 25 percent of the aggregate computing capacity.

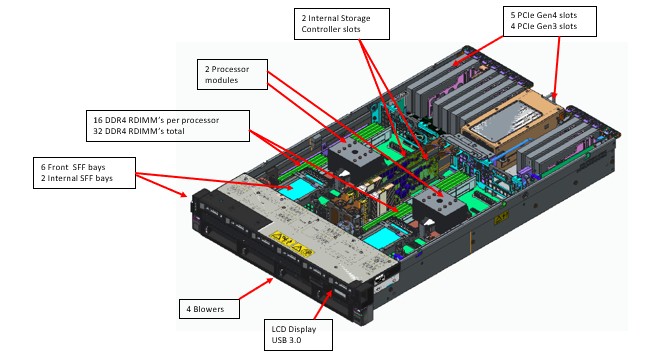

Here is the mechanical drawing of the Power S922 machine and its derivatives:

And here is the system board block diagram for these machines:

If you compare the Power S924 and Power S922 machines, you will see that the big difference is form factor is that by squeezing down from 4U to 2U, IBM had to cut back on the local storage and also on the PCI-Express slots. Specifically, two of the legacy PCI-Express 3.0 x8 slots are sacrificed. That is not much to give up for a form factor that takes up a lot less space. The smaller machine has only eight 2.5-inch (Small Form Factor, or SFF) peripheral bays, compared to a maximum of 18 for the bigger machine. These are the main differences.

For those of you not familiar with IBM’s product naming conventions, a machine has a model designation (like Power S924) and a product number (like 9009-41A) as well as feature codes for each and every possible thing that can be part of that system, including memory sticks, disk and flash drives, I/O adapters of every kind, cables, and anything. The table below shows the models, product numbers, and processor feature cards with the salient characteristics of the Power9 chips in each feature card. IBM is offering three different Power9 processor feature cards for each of the six machines. We have show their base and top clock frequencies, as shown by IBM to be the typical ranges of their operations using dynamic clock frequency scaling. We have taken our best guess at matching the thermal design points IBM has for various Power9 processors to the core counts and clock speeds available. (They are good guesses, mind you.)

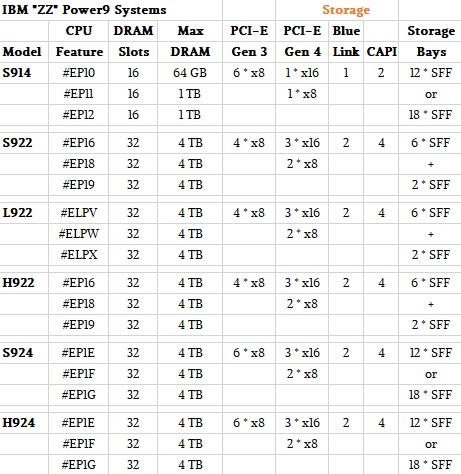

The next table shows the memory, peripheral expansion, and in-chassis storage options for each machine; IBM allows for storage bays to be added to the processor complex over the PCI-Express buses for further expansion beyond this.

One thing that is not obvious from the table. While IBM is offering DDR4 memory speeds of 2.13 GHz, 2.4 GHz, and 2.67 GHz, and in capacities of 16 GB, 32 GB, 64 GB, and 128 GB, on these machines, you cannot just pick any capacity and any speed and put them in these machines. On any machine that has memory slots 10 through 16 populated, the only thing you can do is run 2.13 GHz memory, regardless of the capacity of the stick chosen. By doing this, you get the maximum 170 GB/sec of peak memory bandwidth. If you want to run faster memory, then it can only be used in machines with eight or fewer memory slots populated. And the fastest 2.67 GHz memory is only available for 16 GB sticks. Yes, this is a little weird. And it looks like IBM is gearing down the memory speeds, not shipping different memory speeds, since there are only four different memory cards but eight different speed/capacity combinations. It looks to us like IBM is gearing down 2.67 GHz or 2.4 GHz memory sticks to 2.13 GHz speeds when the memory slots are more fully populated.

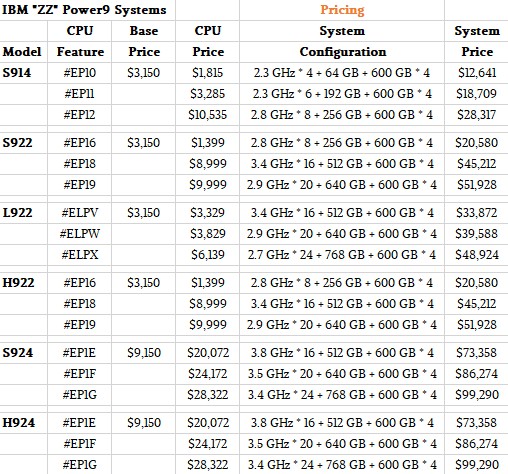

That’s the basic feeds and speeds of these machines. That leaves the last, and perhaps most important factor in the long run, and that is pricing. The way IBM’s pricing works, there is a price for the base system, and then the processor feature cards. In the past, the processor feature card and memory capacity on it had two distinct prices, and then you had to activate cores and 1 GB memory chunks separately for an additional fee. With the Power9 machines, you buy the processor card and the memory and it is activated entirely. As for memory pricing, IBM is charging $619 for the 16 GB sticks; $1,179 for the 32 GB sticks; $2,699 for the 64 GB sticks; and $9,880 for the 128 GB sticks.

In the table below, the base chassis price is shown, and next to it is shown the cost of each processor card. The system configuration pricing shows the cost of adding 32 GB per core to the system plus four 600 GB SAS disk drives. A number of needed features, such as cables and backplane options, are not included in this basic system configuration price; we are trying to give a sense of what the core compute, memory, and storage costs for these machines. Operating systems are not included.

Coming up next, we will do our best to make comparisons to the Xeon and Epyc server lines to see how these Power9 machines stack up, and ponder what the future “Boston” two-socket and “Zeppelin” four-socket Power9 machines might hold in terms of competition.

Great to see power9 coming! amazing platform for IO and acceleration! Todays needs!