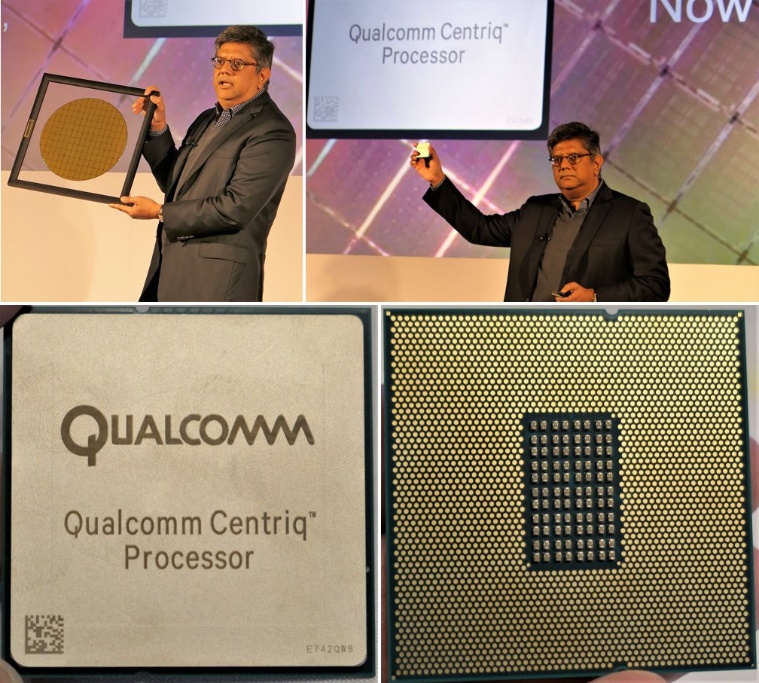

Qualcomm launched its Centriq server system-on-chip (SoC) a few weeks ago. The event filled in Centriq’s tech specs and pricing, and disclosed a wide range of ecosystem partners and customers. I wrote about Samsung’s process and customer testimonials for Centriq elsewhere.

Although Qualcomm was launching its Centriq 2400 processor, instead of focusing on a bunch of reference design driven hardware partners, Qualcomm chose to focus its Centriq launch event on ecosystem development, with a strong emphasis on software workloads and partnerships. Because so much of today’s cloud workload mix is based on runtime environments – using containers, interpretive languages, and the like – launching a new chip demands much more ecosystem support than operating systems and compilers.

We believe that Qualcomm’s strategy of focusing its ecosystem efforts on high value workloads and applications is sound. There were more 64-bit Arm datacenter workloads demonstrated at Centriq 2400 launch than we have seen gathered in one place, ever.

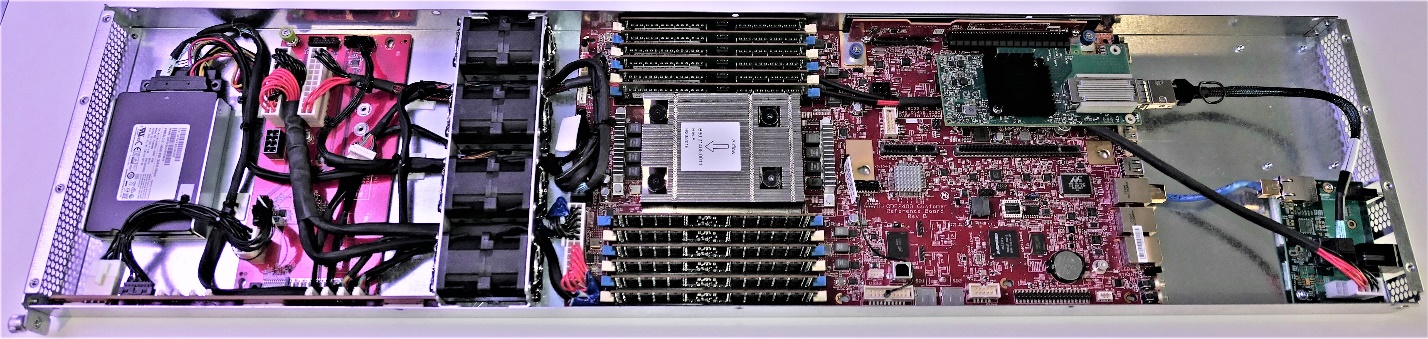

To be fair, there was also a lot of hardware at Qualcomm’s Centriq launch event. All the demos were powered by onsite servers based on Qualcomm’s Centriq reference design or cloud-based servers hosted primarily by Packet.net. We will concentrate here on the technical readiness of systems, software, and solutions that Qualcomm hosted at its launch event and at the SC17 supercomputing conference the following week.

Reference Motherboard And Platform Enable System Ecosystem

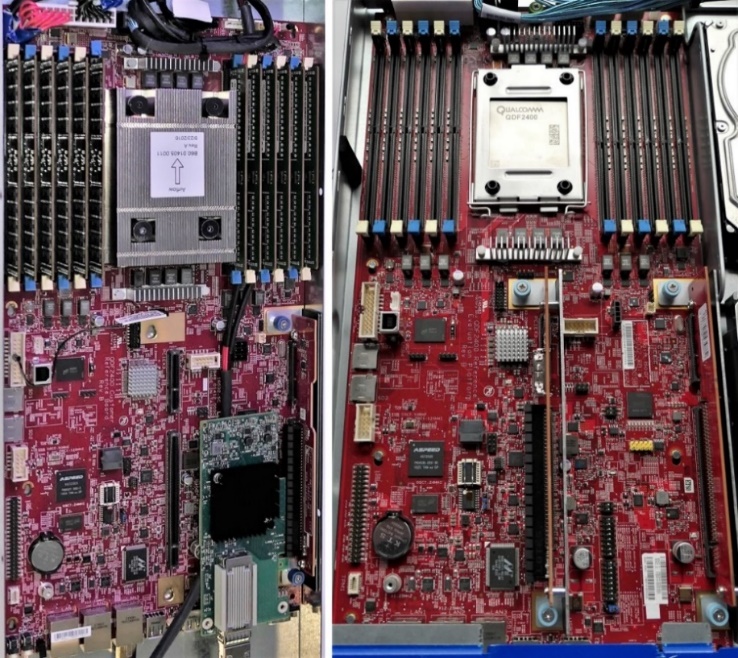

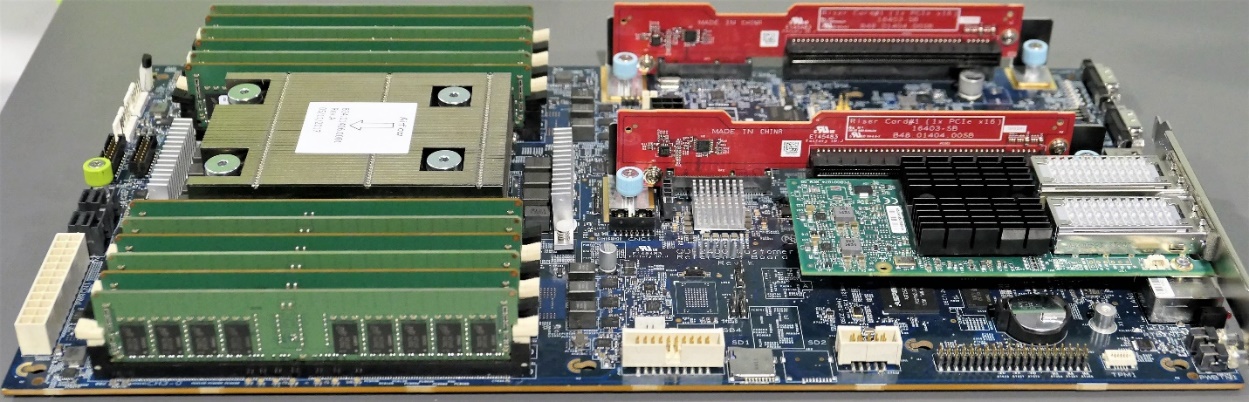

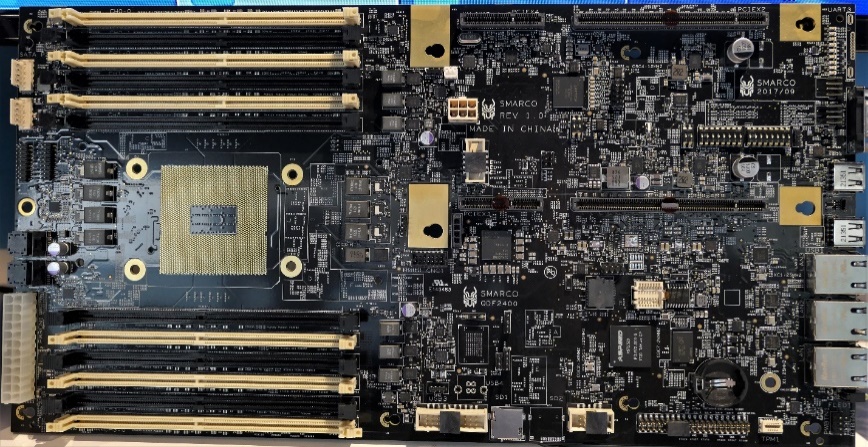

Qualcomm’s Centriq reference motherboard design is a half-width board designed to fit into a wide range of 1U bent-metal sleds. The motherboard reference design is also designed to comply with the Open Compute Project’s (OCP) Project Olympus spec, contributed by Microsoft Azure.

Qualcomm’s reference platform ships the reference motherboard in a full-width chassis so that partners and customers can evaluate dual-motherboard configurations as well as storage-rich configurations.

We saw several versions of Qualcomm’s reference platform, but only one unique third-party design.

Also, Yuval Bachar, president and chairman of the board of the Open19 Foundation chose Qualcomm’s Centriq launch to show the first Open19 sled that we have seen publicly. We did see one other competing Open19 compute sled at SC17, but it was an ODM design shown with little fanfare. We imagine more will pop up in the coming months.

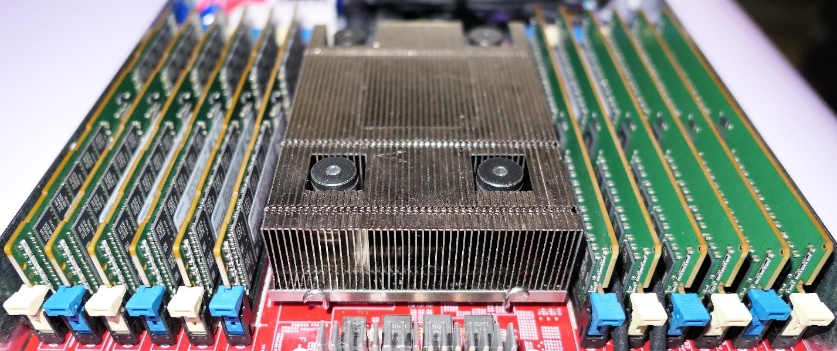

One of the most important aspects of Centriq seems mundane: with 8W idle power consumption and 120W peak consumption, Centriq 2400 does not require liquid cooling to achieve high compute densities. We saw production air-cooled Centriq 2400 heat sinks both at the launch event and at SC17.

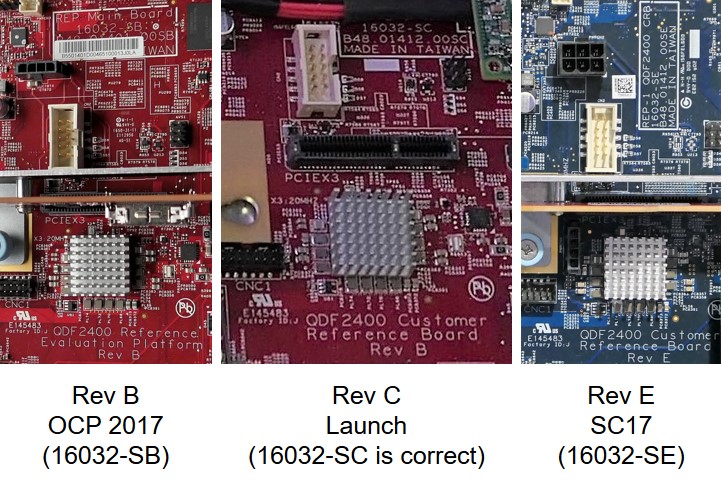

Qualcomm showed Rev B of its reference motherboard at OCP Summit in March. We saw a Rev C board at launch, and Qualcomm showed a Rev E board in Arm’s SC17 booth. A board designer forgot to change the text to Rev C in the photo below, but the board number shows that it is, indeed, Rev C.

Board revisions are important to show progress toward production-ready systems. Note the change in board color from red (Rev B and C) to blue (Rev E). The color change indicates that customers will evaluate and possibly deploy Rev E.

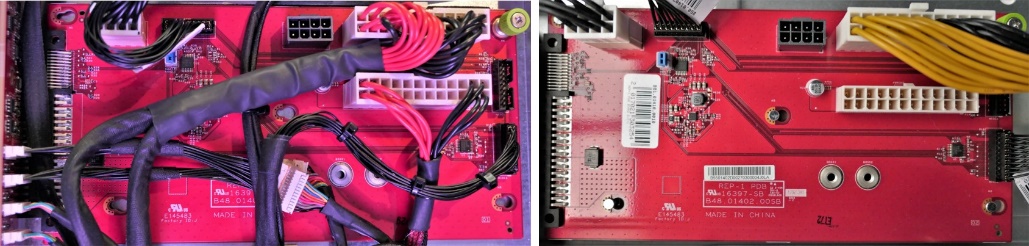

The reference platform includes a flexible power distribution board that we spotted in a couple of Centriq reference designs at launch and SC17.

Risers Enable Add-In Ecosystem

Qualcomm designed its Centriq reference platform to host OCP Olympus-compatible risers. Risers give Qualcomm’s Centriq OCP Olympus compatible motherboard a lot of options in a 1U chassis configuration.

The 1U tall PCI-Express x16 risers are designed to support a wide variety of PCI-Express add-in cards in several physical configurations within a 1U chassis. None of the other three Project Olympus motherboard specs define as many riser types as the Centriq 2400 spec.

- Connects a single PCI-Express x16 electrical and mechanical add-in card above the motherboard with the primary component side facing up, away from the motherboard.

- Connects a single PCI-Express x8 electrical and mechanical add-in card above the motherboard with the primary component side facing up, away from the motherboard and adds a single M.2 slot on the opposite side of the riser from the PCI-Express x8 connector.

- Connects a single PCI-Express x8 electrical with x16 mechanical add-in card above the motherboard with the primary component side facing up, away from the motherboard. In place of type 2’s M.2 slot, this riser supports a x8 OcuLink cable connector to extend PCI-Express via cable within the chassis.

- Connects two PCI-Express x8 electrical with x16 mechanical add-in cards, one on each side of the riser, both facing up, away from the motherboard.

- Connects a single PCI-Express x16 electrical and mechanical add-in card away from the motherboard with the primary component side facing down, leaving maximum air volume between the chassis bottom and the add-in board. This riser is specifically designed to host Qualcomm’s “MegaCard” NVM-Express storage mezzanine. The riser includes a PCI-Express x1 control line to the PCI-Express switch chip on the MegaCard.

- Connects a single PCI-Express x16 electrical and mechanical add-in card away from the motherboard with the primary component side facing down, leaving maximum chassis volume to house a full-sized, full-power (300 watt) GPU or FPGA accelerator board next to the Centriq motherboard.

Both the type 1 riser cards and the power distribution boards shown in the last few weeks were Rev B evaluation prototypes.

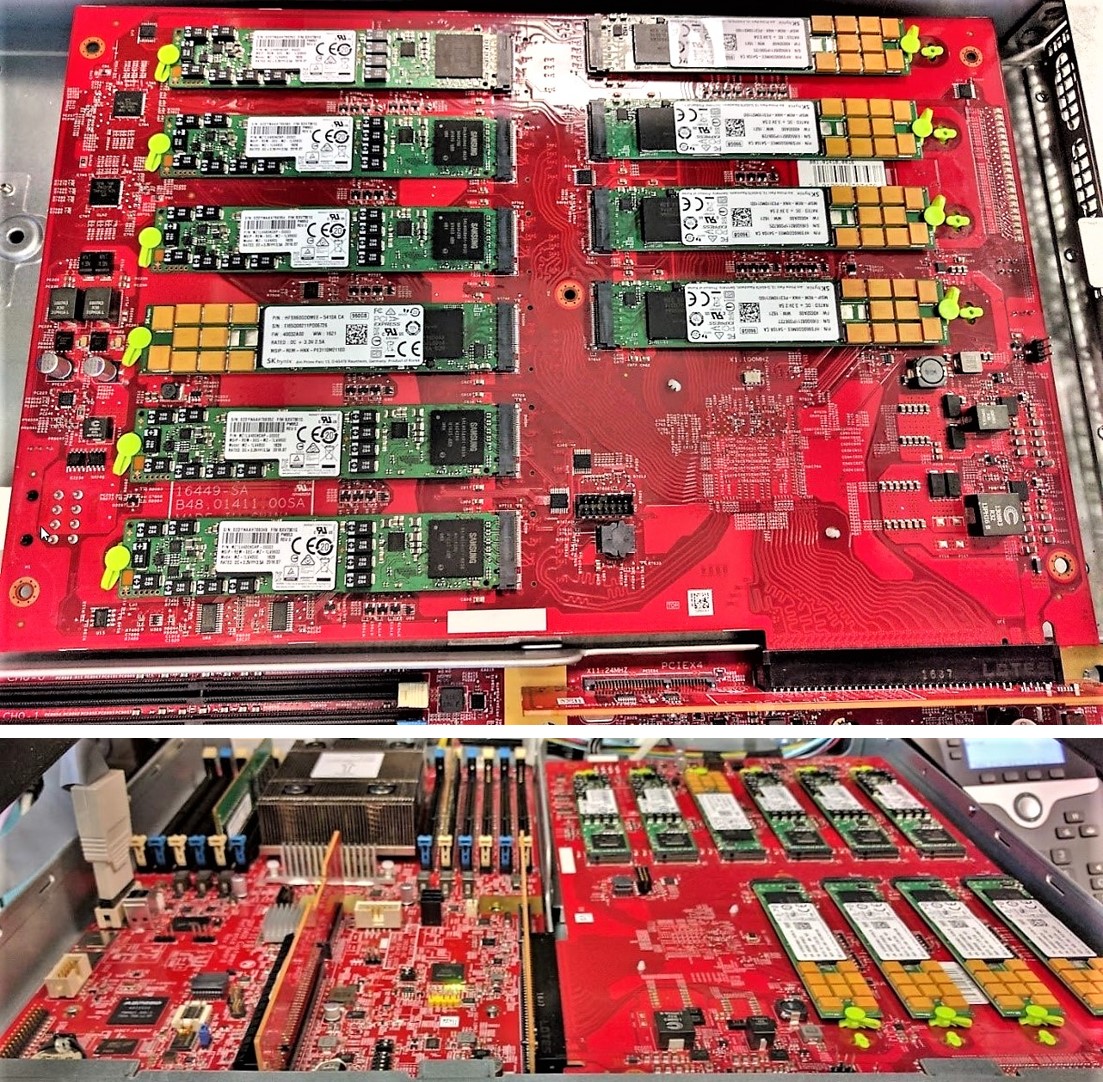

Two types of add-in cards were shown using risers at the launch event and SC17: a Mellanox network add-in card (in several systems above and below) and Qualcomm’s MegaCard.

Qualcomm’s MegaCard hosts twenty M.2 NVM-Express storage cards, ten on each side of the MegaCard. NVM-Express cards are attached to a MicroSemi PM8536 PCI-Express 3.0 switch. The PCI-Express switch is connected to the Qualcomm Centriq motherboard via the PCI-Express x16 type 5 riser (above). Because of the board area needed to host ten M.2 cards, the MegaCard takes the place of a second Centriq 2400 motherboard in a full-width 1U chassis; the PCI-Express connector is on the opposite side of the riser from the type 1 card, and is mounted higher on the riser. High-end NVM-Express drives have 4 TB capacity, so Qualcomm’s MegaCard can house 80 TB of PCI-Express 3.0 NVM-Express storage.

At the Centriq launch event, Qualcomm reinforced the importance of applications and workloads. Qualcomm and its partners set up about two dozen demo stations for launch.

Qualcomm is targeting highly-threaded workloads that scale-out well. Qualcomm’s high thread count, high memory bandwidth architecture is good for containerized, microservices-based applications such as search, content delivery networks, and memory-intensive data analytics.

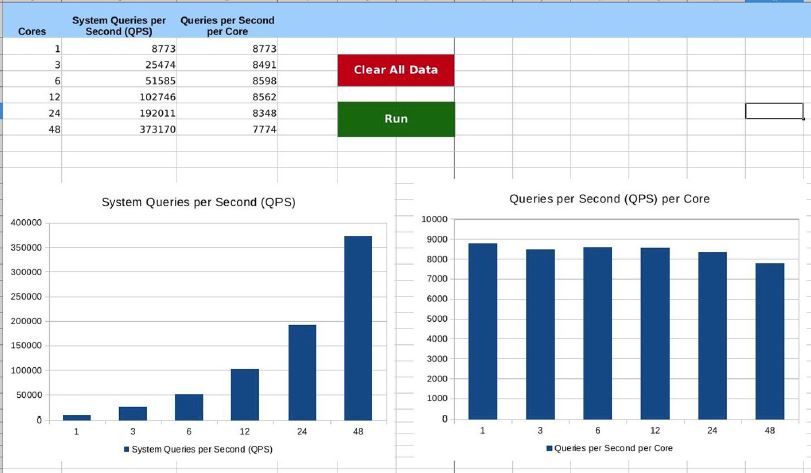

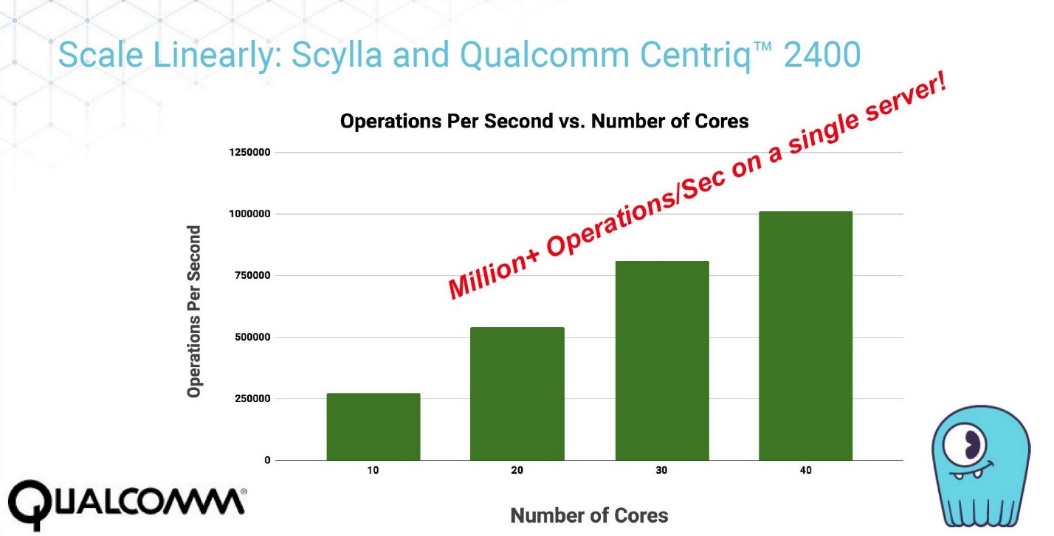

Two database companies showed performance scaling data running on Qualcomm Centriq 2400: MariaDB and ScyllaDB.

MariaDB is an open source SQL (relational) online transaction processing (OLTP) database. Centriq 2400’s high core count enables MariaDB’s one thread per connection model to scale well; MariaDB’s internal testing shows near linear scaling up to 46 concurrent database sessions on a 48-core Centriq 2400 processor. The remaining couple of cores are dedicated to housekeeping tasks.

MariaDB Server 10.2 for Centriq 2400 server processor is generally available now.

ScyllaDB performed a benchmark study of its database running on three Centriq 2400 server nodes. The number of active cores per node was varied from 10 to 40 via boot settings. The study showed that performance across the three systems scaled to exceed one million IOPS (read/write operations per node) and scaled linearly as the number of cores per node increased to 40.

Exelero, an NVM-Express block storage server startup which we have covered before, announced strategic funding from Qualcomm Ventures and demonstrated its product running on Centriq. The MegaCard looks like a good match for Excelero’s application, and might accelerate databases like MariaDB and ScyllaDB.

Leendert van Doorn, distinguished engineer for Microsoft Azure, also mentioned during his presentation that Azure values Centriq 2400’s throughput processing for serving search results and for implementing large in-memory databases. Van Doorn pointed out that for queries that have no locality of reference – where each query is likely to access different parts of the database in what he described as “random walks through the database” – lots of cores that each have outstanding requests to the memory system enable a higher aggregate performance from a memory system.

Synopsys demonstrated its VCS verification simulation and design package running on Centriq 2400. It was a demonstration only. Michael Sanie, VP of Marketing for Synopsys, said that VCS was easy to prepare for the demo and runs comfortably on the Centriq 2400. Sanie was careful not to call the demo a “port” of VCS, because porting implies commitment to debugging and quality assurance (QA). He said that Synopsys runs “thousands upon thousands” of regression testing cycles for a port. He did say that he does not see any issues with doing a 64-bit Arm port and that Synopsys is looking at the feasibility of porting its entire platform to 64-bit Arm processors. Synopsys and Qualcomm have a longstanding relationship, and I can envision Synopsys eventually supporting a “Qualcomm runs on Qualcomm” initiative, much as AMD implemented in the 2000s with its Opteron product line.

Cadence announced it is shipping its Xcelium design simulation and verification software application for Arm 64-bit processors and demonstrated Xcelium running on Centriq 2400. Xcelium runs on the SUSE Linux Enterprise operating system.

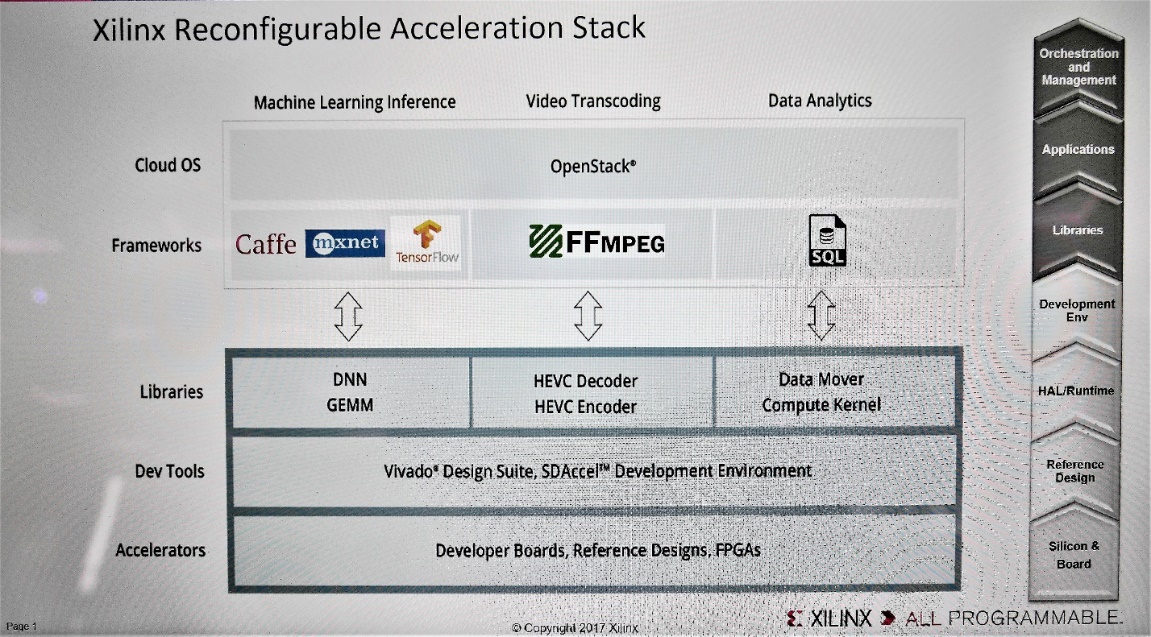

Xilinx demonstrated a machine learning Virtex Ultrascale+ FPGA inference acceleration stack running on Centriq 2400. ML is both throughput and latency sensitive. Qualcomm and Xilinx have collaborated for years, and are working together on CCIX and other technologies beyond the datacenter. Likewise, FPGA acceleration would be a key component for running Qualcomm’s chip design efforts on Qualcomm datacenter infrastructure.

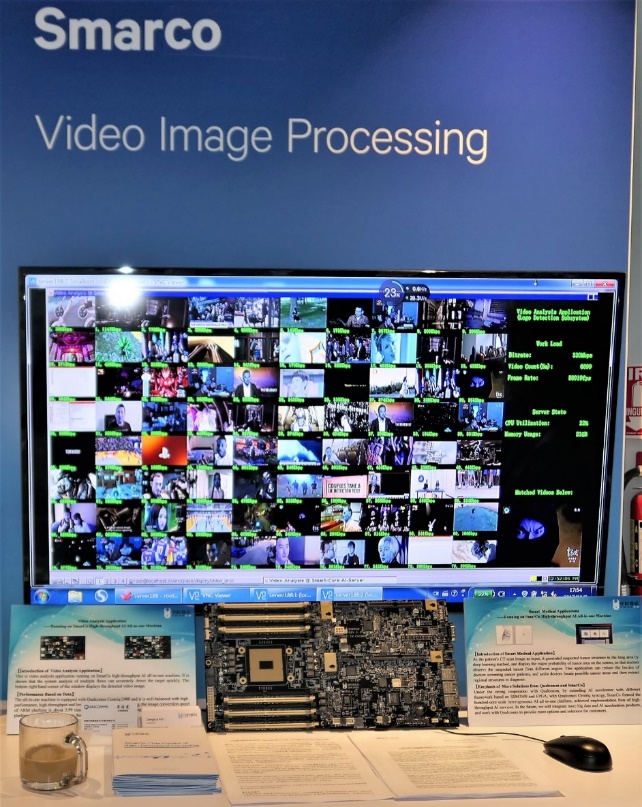

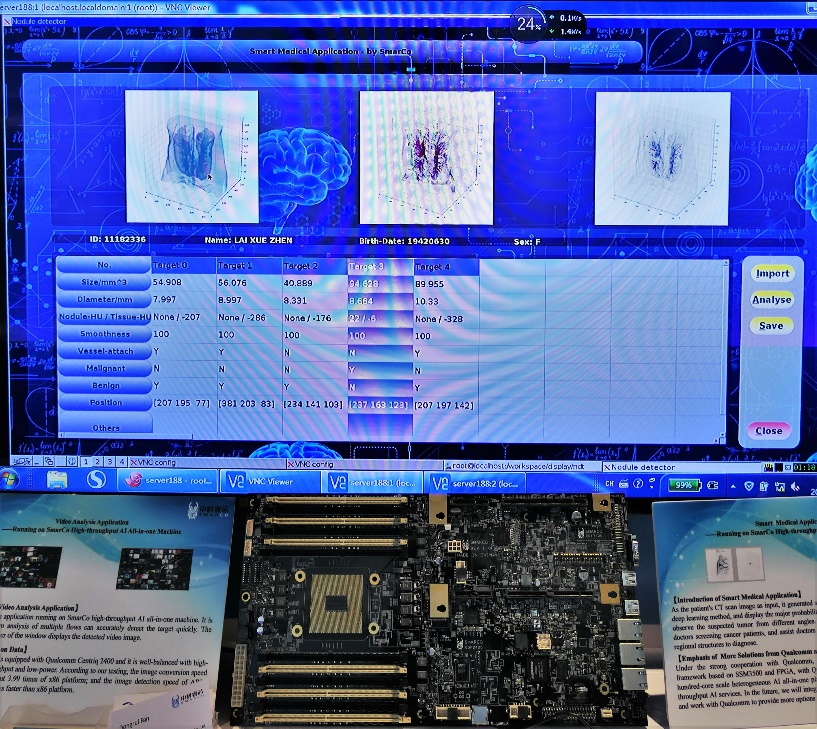

SmarCo showed its “all in one” machine learning platform, based on an in-house variation of Qualcomm’s Centriq 2400 reference motherboard design. SmarCo performs high-throughput video image processing using PCI-Express-based FPGA accelerator cards and video conversion using its proprietary SSM3500 PCI-Express cards with its own SmarCo-2 video processing chip. SmarCo claims that image conversion speed on a Centriq 2400-based platform is 4X faster than a comparable X86 platform, and that image detection is about 1.5X faster.

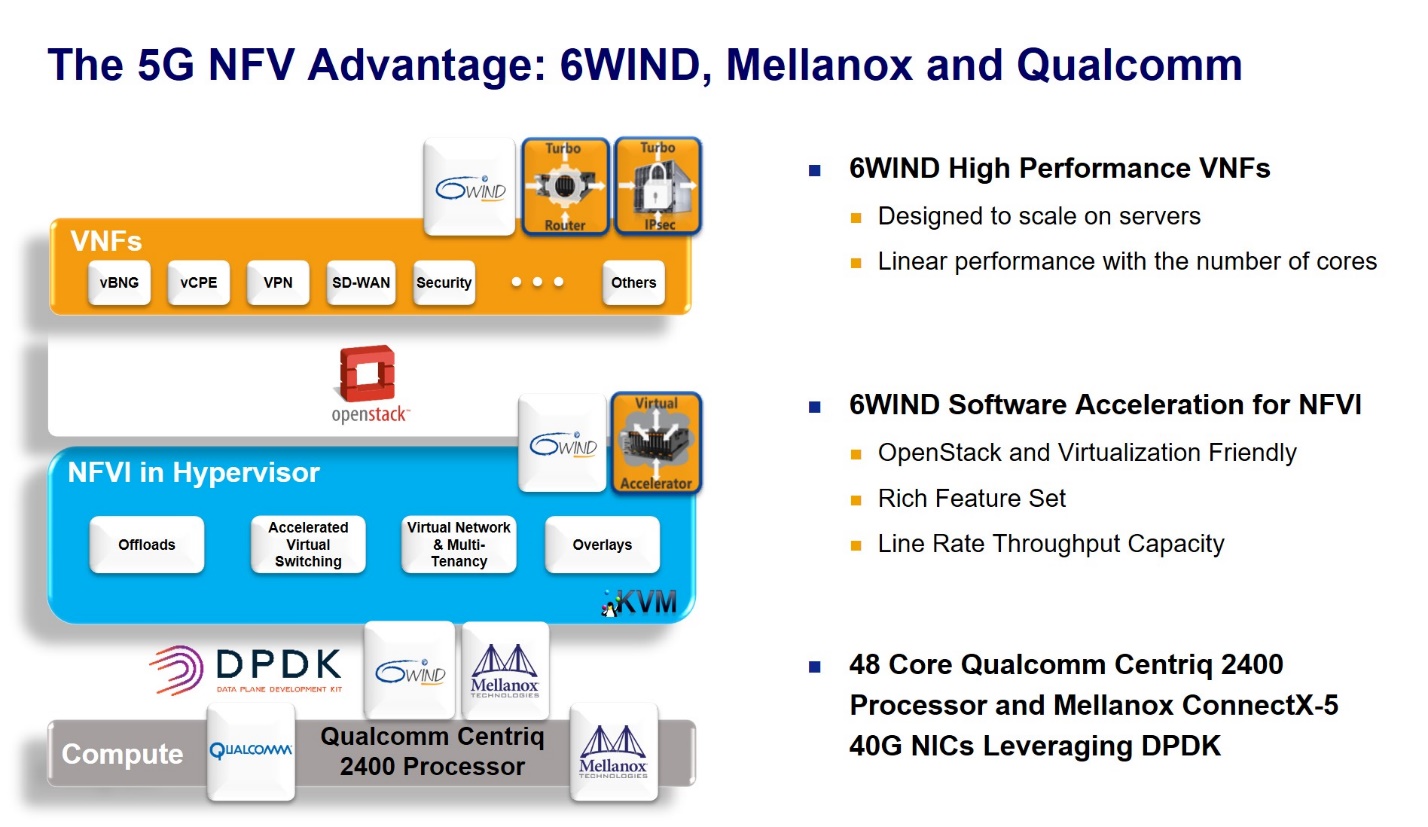

Mellanox Technologies and 6Wind demonstrated a network security gateway assembled from a combination of the Centriq reference platform, Mellanox’s current generation ConnectX-5 2×40 Gb/sec NICs, and 6Wind’s DPDK-based virtual network function (VNF) software. 6Wind announced support for Arm 64-bit processors only a few weeks before Qualcomm’s Centriq launch.

SolarFlare is using its XtremeScale SDN network interface cards and Centriq 2400 server nodes (two nodes per 1U chassis) to form what it calls “neural class networks” for large scale distributed computing environments. Each NIC supports up to 2048 virtual LAN connections, supporting 76 Centriq 2400 processors in a single rack (that’s 3,648 cores). SolarFlare is running the NGINX app delivery platform on Centriq 2400 and states that it will support web hosting and big data analytics, as well. SolarFlare is already deploying Centriq-based neural class networks.

Chelsio announced that its T6 Unified Wire networking solutions are available for the Centriq 2400 reference platform and demonstrated one of its Ethernet adaptors from Qualcomm’s Approved Vendor List (AVL).

Netronome announced that its Agilio SmartNICs and software are available for Centriq 2400. Agilio SmartNICs offload virtual switch and router datapath processing for networking functions such as overlays, security, load balancing, and telemetry. Netronome is aiming its Agilio with Centriq solution at cloud service providers, including telcos.

Packet Networks used an Amazon Alexa front-end to demonstrate a full range of its tools running on Centriq 2400 reference platforms in Packet’s datacenter. Packet is already providing Arm-based bare metal servers to its software developer base of Infrastructure-as-a-Service (IaaS) customers. Bare metal customers care about the specific hardware they are using, because bare metal machines are single tenant instances; developers must know what they are paying for to get the most performant infrastructure for their needs. Packet Networks is already hosting Centriq 2400 reference platforms for its customers, although the platforms are not yet on Packet’s bare metal price list.

In addition, Illumina demonstrated its high throughput bioinformatics running on a Centriq 2400 platform. Canonical demonstrated its OpenStack platform, while Red Hat showed its Enterprise Linux for ARM running on Centriq 2400. Qualcomm also showed its own internally designed demos of MongoDB with Varnish serving web apps, the HHVM web server, and Spark in-memory social graphs.

Where To From Here?

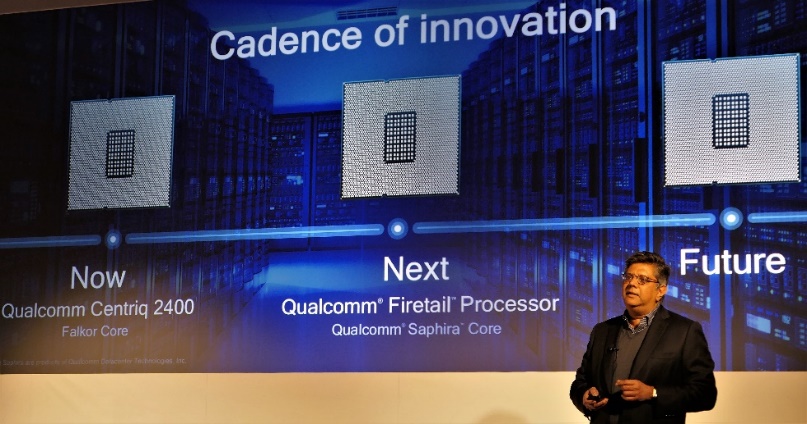

Anand Chandrasekher closed Qualcomm’s presentation with a glimpse into the future of Centriq. In keeping with Qualcomm’s execute first approach, he didn’t say very much. Chandrasekher only disclosed the code names for the next generation Centriq core and processor, “Saphira” and “Firetail,” respectively, and confirmed that a fourth generation is under development. (Remember that Centriq 2400 is actually Qualcomm’s second-generation SoC design).

Qualcomm’s strategy for bringing an ecosystem to Centriq launch was sound. No one else in the server ecosystem has demonstrated so many parts of the Arm 64-bit datacenter ecosystem in one place and at such an advanced stage of development.

Paul Teich is an incorrigible technologist and a principal analyst at TIRIAS Research, covering clouds, data analysis, the Internet of Things and at-scale user experience. He is also a contributor to Forbes/Tech. Teich was previously CTO and senior analyst for Moor Insights & Strategy. For three decade, Teich immersed himself in IT design, development and marketing, including two decades at AMD in product marketing and management roles, finishing as a Marketing Fellow. Paul holds 12 US patents and earned a BSCS from Texas A&M and an MS in Technology Commercialization from the University of Texas McCombs School.

Be the first to comment