In high performance computing in the public sector, dollars follow teraflops and now petaflops. Especially in the datacenters of academia, where cutting-edge computational research projects funded by large grants seek the most powerful supercomputers in the region.

Institutions with limited budgets these days are creatively solving their financial supercomputing needs by creating a collective that pools funds and shares computing resources. Some institutions, such as Iowa State University, are doing this internally, with various departments pitching in to buy a single, large HPC cluster, as with their Condo supercomputer.

In Japan, the University of Tokyo (U Tokyo) and University of Tsukuba (U Tsukuba) created an entirely new entity to fund, define, and manage their new supercomputer, called Oakforest-PACS. “The Joint Center for Advanced HPC (JCAHPC) was established in 2013,” says professor Toshihiro Hanawa of U Tokyo’s Supercomputing Research Division, Information Technology Center. “Together, we designed Oakforest-PACS to be the next-generation supercomputer in Japan. Ten faculty from the two universities share operation and management of it.” Their efforts have paid off. Installed in the third quarter of 2016, Oakforest-PACS, with 25 petaflops of peak double precision floating point performance, became the fastest supercomputer in Japan, outpacing the K supercomputer, which although it is many years old still placed at number seven on the June 2017 Top500 list, at 13.5 petaflops of peak performance.

The 10 petaflops variant of the K machine, which is a massively parallel Sparc64 machine, was launched in 2012, and its successor (the post-K Computer) will be deployed around 2020, according to professor Taisuke Boku of U Tsukuba’s Center for Computational Science. “Oakforest-PACS was built to meet two goals,” he explains Boku, “to bridge the gap between the K computer and post-K computer and to support next generation, general purpose computing compatible with an X86 architecture.”

Built On Knights And Next Generation Fabric

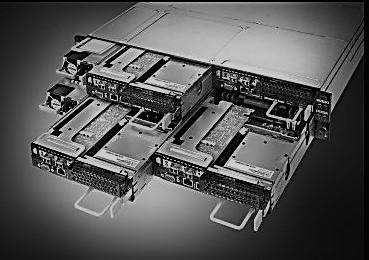

Fujitsu built the Oakforest-PACS machine, and was the vendor of the K machine as well. To meet the performance requirements defined by JCAHPC, Fujitsu engineers designed Oakforest-PACS around Intel’s “Knights” Many Integrated Core (MIC) architecture. The massive parallel computing resources in each chip were a good match for the kinds of codes that Oakforest-PACS runs. It is the second largest deployment of Intel’s MIC processor in the world with 8,208-nodes built on Intel Xeon Phi processors, and the largest installation with Intel Omni-Path Architecture (Intel OPA). “This processor with Intel’s fabric is an ideal combination for high throughput of MPI codes, which are common in science and engineering,” comments Katsumi Yazawa of Intel.

When defining their needs, U Tsukuba and U Tokyo focused on a machine with very high peak performance that would be compatible with the X86 instruction set. “Our codes are written for general purpose computing based on the X86 instruction set,” said Hanawa. “It’s the world’s most widely used instruction set. But, we didn’t describe an accelerated solution, nor require the Intel Xeon Phi processor.”

“We specified the performance. We wanted the performance of a MIC solution,” adds Boku, “but based on a general purpose X86 processor architecture, not GPUs.”

JCAHPC was well aware of the performance from MIC architecture and the vector performance enhancements that such processors offered. “For several years, developers and computational scientists at the universities have been developing and testing parallel codes using Intel Advanced Vector Extensions-512 (AVX-512) to run on clusters located at both universities,” states Boku.

“We also have experience with MIC architecture,” Hanawa explains. While the Intel Xeon Phi processor 7250 has 68 cores, they are much simpler cores that require slightly different code optimizations from the monolithic Intel Xeon processor family. To help tune codes and make product runs before Oakforest-PACS, U Tsukuba introduced Japan’s largest cluster equipped with Intel’s earlier MIC coprocessor as an accelerator, delivering 1 petaflops of performance. U Tokyo also introduced a small cluster, mainly for system-side research, as well as performance tuning. Both universities have good experience with these systems, where developers and scientists have been optimizing code for MIC. “It is very good for us to have had Intel Xeon Phi coprocessor-based systems before Intel Xeon Phi processors. We learned the approach to performance tuning in both numerical floating-point performance and memory performance on this architecture. One of the big codes we have been working on was developed on our Intel Xeon Phi coprocessor cluster. We used the native mode of the processor, making it easy to port to Oakforest-PACS.”

“So, we understand the work we have to do to take advantage of the smaller cores of the Intel Xeon Phi Processor in order to achieve maximum performance from the system,” Boku says. “We also realize that the memory architecture between the Intel Xeon Phi coprocessors and Intel Xeon Phi processors are different, but we have good experience with this architecture.”

When considering the fabric of Oakforest-PACS, Boku and Hanawa targeted both InfiniBand EDR and Intel OPA. “We didn’t prefer one or the other. We just specified performance,” says Boku. “We selected several application and micro-benchmarks for the network and CPU tests, and used an MPI benchmark for the network that wasn’t based on Performance Scaled Messaging (PSM).” According to Hanawa, the vendors responding to the Request for Proposal (RFP) chose their own solution and ran the benchmarks, reporting the results back to JCAHPC. Fujitsu chose Intel OPA, and eventually was awarded the build of the new supercomputer.

Early Results

Oakforest-PACS was released into production in December 2016. Early research is returning good performance on the system. “We have been running Lattice Quantum Chromodynamics (QCD) codes and some first-order optical material science simulations using Ab-initio Real-Time Electron Dynamics Simulator (ARTED) on Oakforest-PACS,” says Boku. U Tsukuba is at the heart of Lattice QCD work in Japan, with more than 25 years of experience. The university hosts an Intel Parallel Computing Center, which targets Lattice QCD optimization. “These are very quantum-level calculations and simulations. With its hundreds of mesh points, the QCD code demands a lot of memory bandwidth rather than CPU performance. We are seeing the benefits of Intel Xeon Phi Processor’s multi-channel DRAM (MCDRAM) for running Lattice QCD,” he added.

“Sustained performance from ARTED has reached 25 percent of theoretical peak,” says Hanawa. “That’s about 700 gigaflops per chip, which is much faster than what the standard Intel Xeon processor can sustain.” At U Tokyo, early users are performing atmosphere and ocean coupling and earthquake simulations using GAMERA/GOJIRA codes. Both universities expect to have published research from Oakforest-PACS later in 2017.

Ken Strandberg is a technical story teller. He writes articles, white papers, seminars, web-based training, video and animation scripts, and technical marketing and interactive collateral for emerging technology companies, Fortune 100 enterprises, and multi-national corporations. He can be reached at ken@catlowcommunications.com.

Oakforest is an interesting machine, as not too many KNL supers are out there. However, like all the other machines that are ahead of K in the Top500 rankings, this more conventional and low cost machine falls behind K architecture and the newer PrimeHPC FX100 and even FX10 in terms of its HPCG performance, and much more telling its HPCG % of peak which is 5.3% for K and only 1.5% fot Oakforest.

The computational efficiency of K has still yet to be matched by anything outside of the PrimeHPC family, with 93% for K and only about 55% for Oakforest. K is much like the original Earth Simulator was. An architecture that was not truly outdone for a long time, even after it was no longer at the top of the Top500. Not surprising since Dr. Tadashi Watanabe had a hand in both machines.

The only thing that’s likely to truly outpace K in the most difficult to accomplish areas, like computational efficiency and interconnect taxing workloads like the HPCG benchmark simulates is Post K. Interestingly it sounds like Aurora might be morphing into an Intel driven Post K style architecture.