The US Department of Energy – and the hardware vendors it partners with – are set to enliven the exascale effort with nearly a half billion dollars in research, development, and deployment investments. The push is led by the DoE’s Exascale Computing Project and its extended PathForward program, which was announced today.

The future of exascale computing in the United States has been subjected to several changes—some public, some still in question (although we received a bit more clarification and we will get to in a moment). The timeline for delivering an exascale capability system has also shifted, with most recent projections landing us in the 2021-2022 timeframe with “at least one” system. This roadmap was confirmed today with a DoE announcement that backs six HPC companies as they create the elements for next-generation systems.

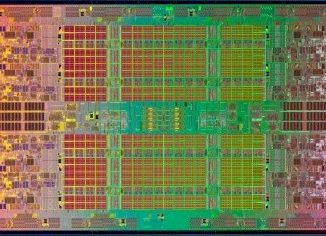

Despite lingering questions about the US exascale effort, one thing is for sure: it is now clear which companies are set to receive some exascale research and development funding, ideally culminating in the system components needed to reach sustained exaflop performance on real-world applications. The vendors on this list include Intel, Nvidia, Cray, IBM, AMD, and Hewlett Packard Enterprise—a list we will pick apart this with deeper dives into each of the vendors and their current exascale-oriented technologies.

Broadly, there will be $258 million in funding allocated over a three-year contract period for this PathForward project spread across all of these six companies. There is no indication that the funds are evenly split six ways across those winning the awards. The R&D funding is set along “work package” guidelines wherein the companies deliver reports to the DoE exascale groups (including software and application developers) to check the pace along the way. According to Argonne National Lab’s Paul Messina, who heads the Exascale Computing Project that will provide guidance to the PathForward groups, “It’s not like we spend the money and then wait three years to get an answer.”

“This $258 million in funding over three years will be supplemented with the companies providing additional funding amounting to at least 40 percent of their total project cost, bringing the total investment to at least $430 million”

As the technical lead for HPE’s “The Machine” architecture, Paolo Faraboschi clarifies for The Next Platform, “This is an R&D acceleration contract. At the end of PathForward, the DoE is not expecting a product. They’re hoping for the acceleration of these projects so they become product ready. Typically the partners in these kind of projects assume a 40% cost share, so for every ten dollars the DoE puts in, the company is expected to put in four dollars so the total amount of work is fourteen dollars. The procurement of the systems will come in the second stage and this is not funded by DoE centralized but the national labs—they have the budget, site, they send out a tender for a specific installation. This will happen toward the end of PathForward, so the expectation is the companies that have been awarded PathForward will continue the productization phase so when the facilities are ready to issue an RFP they can respond with a technology that matches. The second phase is a different, far larger funding pool. The individual machines will be size of the entire grant at least at that scale.

“The Department of Energy plans to deliver at least two systems – at least one in 2021 and perhaps two or others later,” Messina explained. “The systems will be purchased not by the exascale project but the facilities that house the leading computing resources at our labs.”

We have been listening closely to any word from Messina because Argonne was supposed to be the site of an Intel-based supercomputer, called Aurora and based on the chip maker’s future “Knights Hill” Xeon Phi processor. A few weeks ago, we started hearing some high-credential rumors that there might be some architectural or timeline changes with Aurora. To this, Messina confirms, albeit with no real detail: “The Aurora system contract is being reviewed for potential changes that would result in a subsequent system in a different timeframe from the original system. But since these are early negotiations we can’t be more specific.”

We have also been tuned into what Messina says because as lead of the Exascale Computing Project, it was his push to have one of the exascale architectures fall into the category of a novel architecture as one of the 2021-2022 target machines. As we noted a few weeks ago, the language at ECP has very recently shifted away from the use of “novel” over to the less exotic-sounding “advanced” and as we can see from the R&D funding listed here, there is no company other than HPE with The Machine that has something that could fit into the “novel” category. When the novel architecture emphasis first arose, we projected that the most conservative fit (meaning manufacturable at scale as well as broadly programmable) would be The Machine and we might be on the right track considering the only truly novel, producible architecture – quantum computing via the D-Wave systems – is not on the list of R&D investments.

Having this research and development funding on the front-end is good news for the exascale effort, but the question is really whether this all too little—and more importantly—too late. We are in the middle of 2017 and while this funding is generous, if it takes three years to research and develop and another two years to productize—well, you see the problem. That’s 2022 to get it installed and up to another year for full production.

Meanwhile, in China and Japan, for instance, there is a very clear extreme scale computing roadmap in terms of both applications and architectures. These countries set their minds to particular sets of applications and built the architecture around those with what appears to be extraordinary focus. The fact that roadmaps for exascale exist that are not altered frequently speaks volumes about a sense of direction. The DoE has produced its own exascale roadmaps, but the timelines for big supercomputer projects shift, as do the architectures tied to them. For instance, IBM pulled the plug on its Power7-based Blue Waters system and Cray won a deal to build a hybrid CPU-GPU system at the National Center for Supercomputing Applications several years back.

What this R&D funding means, coming as it does in mid-2017, is that there is still a lack of clarity about architectures for systems with definitive timelines attached. This is not a negative statement; workloads are changing with the introduction of machine learning (which the Japanese are building around with their next-gen AI supercomputer designed for both deep learning and traditional simulations) and it is important to get it right.

The PathForward funding will give the six vendors an opportunity to dedicate more resources to their next generation architectures with sound guidance from the HPC application and systems teams that will use them. The risk, as it would be anyway, is that these architectures fail to be a fit—and it is on the vendors to bear more burden for that system development than it might have been in past times (read this for historical perspective of funding trickle-down).

Here’s another way to think about this R&D investment. $258 million in funding across six companies to develop something that might be able to stand in as the correct architecture eventually. We were told this year it took $3 billion for Nvidia to develop its “Volta” GPU. Other architectures require similar billion-dollar scale investments. Unlike government investments in days gone by (those made of DARPA and DoE funds), the actual manufacture and rollout of the products generated from this R&D front-end provided by PathForward will fall to the vendors.

“The work funded by PathForward will include development of innovative memory architectures, higher-speed interconnects, improved reliability systems, and approaches for increasing computing power without prohibitive increases in energy demand,” Messina says. Of the vendors, he adds that it is essential they “play a role in this work going forward.”

Mr. Faraboschi is wrong when saying “projects assume a 40% cost share, so for every ten dollars the DoE puts in, the company is expected to put in four dollars so the total amount of work is fourteen dollars.”

His assertion of a ten plus four funding plan is not mathematically correct. A 40% cost share implies for every six dollars DoE puts in, the company is expected to put in four dollars. The numbers below confirm the six to four ratio.

As stated in the article, $258M in contracts comes from the DoE. That is 60% of total funding, with an additional 40% contribution of $172M from companies to bring the total to $430M.

Please fix the article with an editorial clarification. Thanks.

The nice part about all this government funded R&D exascale work is that whatever is developed will definitely make its way down into the consumer computer market. So for AMD, Intel, Nvidia, and IBM/Partners there will be developed IP working its way down into some consumer SKUs(Maybe not so much for IBM, but maybe for some OpenPower power/power9 licensees that may produce any consumer SKUs). I’m very excited about getting a laptop with much better integrated graphics in the form of some interposer based APUs from AMD that also includes HBM2, or whatever the latest version of HBM memory is at the time, and a GPU die that provides at least 24 or more Vega, or Navi, CU(Compute Units) with the whole interposer based APU wired up Zen cores die to Vega/Navi GPU die and HBM2/newer die stacks with thousands of parallel interposer etched traces so as to allow for massive amounts of raw effective bandwidth at much lower connection fabric clock speeds(power saving for Exascale and Mobile as well).

As this NextPlatform article shows the results of what some of an eariler round of some of this Government Exascale funding has produced from AMD:

“AMD Researchers Eye APUs For Exascale”

https://www.nextplatform.com/2017/02/28/amd-researchers-eye-apus-exascale/

This NextPlatform article links to this research PDF(Below), and is a very good read for those intrested in what will be coming on the Interposer for APUs in the future and this will be great for the laptop market as this R&D funding in the form of government grants that more than match the private investment for exascale computing systems.

http://www.computermachines.org/joe/publications/pdfs/hpca2017_exascale_apu.pdf

Have to agree with the author this looks more like a fail in my book. China will definitely be first no doubt anymore about this. And from all the sixth who received money I would say only HPE is justified at least with the Machine they are trying something different. Also everyone knows that memory is the real bottleneck in future HPC.

Although they might have to go to Intel to get that memory technology for the Machine to get really rolling. Giving IBM any funds seems to be a total waste of money.

IBM’s research and development is world class and that new 5nm GAAFET(Gate ALL Around Field Effect Transistor) process that IBM, Samsung, and GlobalFounderies as part of their IP sharing foundation/group are working on is nothing to disregard. There will even be licensed from Micron makers of XPoint memory as Micron appears to not want to develop its QuantX(XPoint) on its own. AMD’s Exascale APU(See above PDF post) may be the way to go for HBM# memory stacked right on the GPU “Chiplets” that reside next to the CPU “Chiplets” on several levels of Interposers(Active Interposers and not just passive Interposers that host only traces). Certainly IBM’s Chip Fab research shared along with its GF and Samsung partners will benefit GF’s/Samsung’s entire customer base, AMD/Others, so IBM getting its share of that Exascale funding makes good sense.

No one need go to Intel for any memory technology with Micron willing to partner with others with Micron having its own XPoint IP and IBM has plenty of IP on its own for memory, ditto for Samsung/Micron and others.

Intel will see its Cash Cow Server/HPC business(Fat Margins) greatly disrupted over the next few quarters from AMD(x86) and IBM/OpenPower and just wait for AMD’s workstation/server interposer based Professional APUs to begin to come online fully in 2018 after this first round of CPU only EPYC server SKUs begin arriving in a few weeks time.

That Government Exascale funding needs to be increased to at least the Billion US dollar range and shared with IBM, AMD, Nvidia, and others, Including Intel, simply because that Exascale developed IP will work its way down into the consumer market very quickly to make a quick return on the US government’s small investment with increased economic activity in the PC market as well as the business/server and HPC markets. The consumer PC market is sure becoming more interesting with some renewed x86 competition and that Exascale Funded R&D/IP that works its way down means great improvements for the PC market that has been stagnant for too many business quarters.