Exascale computing promises to bring significant changes to both the high-performance computing space and eventually enterprise datacenter infrastructures.

The systems, which are being developed in multiple countries around the globe, promise 50 times the performance of current 20 petaflop-capable systems that are now among the fastest in the world, and that bring corresponding improvements in such areas as energy efficiency and physical footprint. The systems need to be powerful run the increasingly complex applications being used by engineers and scientists, but they can’t be so expensive to acquire or run that only a handful of organizations can use them.

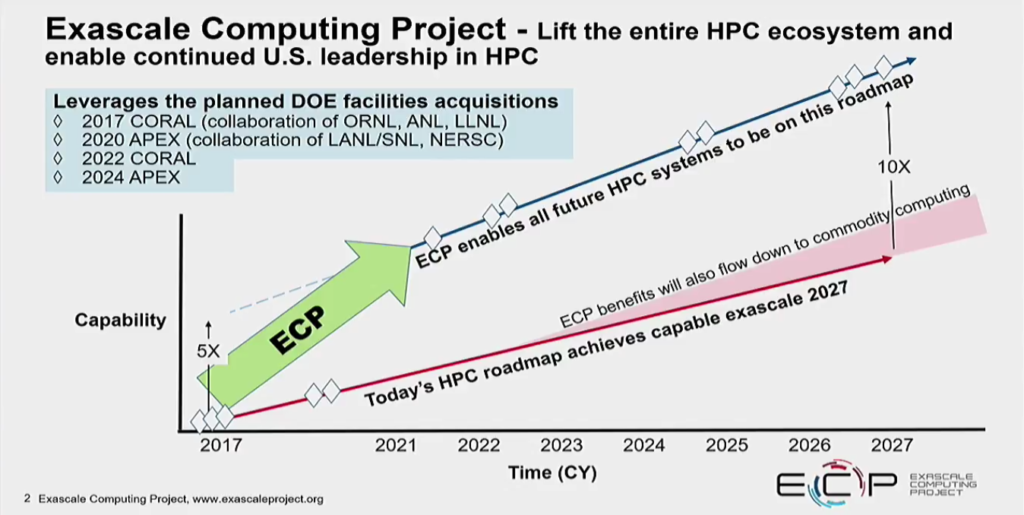

At the same time, the emergence of high-level data analytics and machine learning is forcing some changes in the exascale efforts in the United States, changes that play a role in everything from the software stacks that are being developed for the systems to the competition with Chinese companies that also are aggressively pursuing exascale computing. During a talk last week at the OpenFabrics Workshop in Austin, Texas, Al Geist, from the Oak Ridge National Laboratory and CTO of the Exascale Computing Project (ECP), outlined the work the ECP is doing to develop exascale-capable systems within the next few years. Throughout his talk, Geist also mentioned that over the past 18 months, the emergence of data analytics and machine learning in the mainstream has expanded the scientists’ thoughts on what exascale computing will entail, both for HPC as well as enterprises.

“In the future, there will be more and more drive to be able to have a machine that can solve a wider breadth of problems … that would require machine learning to be able to do the analysis on the fly inside the computer rather than having it be writer out to disk and analyzed later,” Geist said.

The amount of data being generated continues to increase on a massive scale, driven by everything from the proliferation of mobile devices to the rapid adoption of cloud computing. HPC organizations and enterprises are looking for ways to collect, store and analyze this data in near real-time to be able to make immediate research and businesses decisions. Machine learning and artificial intelligence (AI) are increasingly being used to help accelerate the collection and analysis of the data. In addition, AI and machine learning are at the heart of many of emerging fields of technology, from new cybersecurity techniques to such systems as self-driving cars.

The ECP is taking the growing role of data analytics and machine learning into consideration in the work it is doing, Geist said. That growing role can be seen in the applications being developed for exascale computing. For example, the application development effort touches on a range of areas, from climate and chemistry to genomics, seismology and cosmology. There also is a project underway to develop applications for cancer research and prevention, and increasingly that work includes the use of data sciences and machine learning.

In addition, ECP – which was launched in 2016 – originally had created four application develop co-design centers with relatively narrow focuses, such as efficient exascale discretizations and particle applications. One regarding online data analysis and reduction touched on the rising data sciences effort in the tech industry. In recent months, the program added a fifth co-design center, this one targeting graph and combinatorial methods for enabling exascale applications. The new center was created more specifically to deal with the challenges presented by data analytics and machine learning, he said.

In a more general sense, both of those emerging technologies also have an impact on the types of systems that the ECP is seeking from vendors like IBM, Intel and Nvidia, and on the growing competition with China in the exascale race. The ECP is looking for systems that not only are capable of exascale-level computing, but also that can be used by a wide range of organizations, with the idea that the innovations developed for and used in HPC environments will cascade down into the enterprise and commodity machines. Geist said they should be usable for a broad array of users, not just a small number of “hero programmers.” Given that, the ECP is looking for vendors to develop systems that can meet the various requirements laid out in the program, such as enabling extreme parallelism, creating new memory and storage technologies that can handle the scaling, high reliability and energy consumption of 20 to 30 megawatts.

However, that doesn’t necessarily mean creating radical designs or highly advanced or novel architectures. If the vendors can create exascale-capable systems without resorting to radical solutions, “that would be fine,” Geist said. “In fact, we’d probably prefer that.” At the same time, ECP officials understand that to develop the first exascale-capable computer by 2021, as planned, it will take some novel approaches to design and architecture. The hope is that by the time the next such systems follow in 2023, the need for such radical approaches won’t be necessary.

“We’re not trying to build a stunt machine,” Geist said, adding that the goal for these systems is not just how many FLOPs they can produce, but how much science they can produce. “We want to build something that’s going to be useful for the nation and for science.”

Such demands are a key differentiator in how the United States and China are approaching exascale computing. China already has three exascale projects underway and a prototype called the Tianhe-3 that is scheduled to be ready by 2018. Much of the talk about China’s efforts has been about the amount of money the Chinese government is investing in the projects. At the same time, China is not as limited by legacy technologies as is the United States, Geist said.

“They can build a chip that is very much geared toward very low energy and high performance without having to worry about legacy apps, or does it run in a smartphone or does it run in a server market,” he said. “They can build a one-off chip.”

For U.S. vendors, they have to build systems that can run a broad range of new and legacy applications, and components that can be used in other systems. They have to be able to handle myriad workloads, which is why the ECP expanded its efforts in the areas of data analytics and machine learning. These are increasingly important technologies that will have wide application in HPC and enterprise computing as well as consumer devices, he said. Vendors know that, and they have to take it into account when thinking about innovations around exascale computing.

The application of emerging technologies will be critical in a wide range of technology areas, so innovations in architectures need to be able to address the needs of both the supercomputing and enterprise worlds. For example, in the HPC space, organizations are turning to machine learning to accelerate the simulation workloads they’re running for such tasks as quality assurance, Geist said.

“In the United States, it’s really important to the health of the ecosystem,” Geist said. “If you’re only going to sell two machines a year or three machines a year, you can’t make a business out of that and you’ll go out of business. Therefore, those chips and those technologies need to be expanded into new markets. This is what the U.S. has to struggle with, to make sure we can meet the requirements of this high-performance level that we want to get to while still meeting the needs of all the other areas. This is why we see this expansion into data analytics and machine learning, which seems to have a much bigger market outside of just the HPC world.”

Be the first to comment