The future of Moore’s Law has become a topic of hot debate in recent years, as the challenge of continually shrinking transistors and other components has grown.

Intel, AMD, IBM, and others continue to drive the development of smaller electronic components as a way of ensuring advancements in compute performance while driving down the cost of that compute. Processors from Intel and others are moving now from 14 nanometer processes down to 10 nanometers, with plans to continue onto 7 nanometers and smaller.

For more than a decade, Intel had relied on a tick-tock manufacturing schedule to keep up with Moore’s Law, an observation by Intel co-founder Gordon Moore in 1965 that essentially said that the number of transistors in a processor would double every 18 to 24 months and which has driven the IT industry ever since. Moore’s Law shrinkages in circuits drove the industry for decades in a tick-tick-tick fashion, and the tick-tock methodology created by former head of Intel’s enterprise group (and now VMware CEO) Pat Gelsinger alternated between process shrink (the tick) and microarchitecture innovation (the tock) to drive the cycles of innovation.

However, the continued shrinking of the circuitry on the chip has posed challenges, with some questioning how much longer the miniaturization could continue and whether the end of Moore’s Law is nigh and, if so, what will replace it. Those challenges have been exemplified by changes in Intel’s manufacturing schedule. In the summer of 2015, Intel said it was moving away from the tick-tock schedule in the chip maker’s transition from 14 nanometers by pushing back by a year the introduction of its first 10 nanometer processor. A year later, Intel said it continue rolling out new microarchitectures for its Xeon server and Core PC chips, but that it would be more along the lines of every two-and-a-half years rather than every two.

In a recent study on the issue of Moore’s Law, a couple of researchers from Columbia University and Stanford University noted the various limitations to Moore’s Law, from the physics involved with the traditional CMOS transistor switching architecture and the fast-rising capital costs associated with chip manufacturing that are outpacing revenues to the shrinking return on investment in building new generations of smaller, more dense devices and components.

“For the three decades prior to 2005, clock frequency, integration density, and cost per device all improved exponentially with each technology generation, while active and passive power were contained within economically acceptable bounds,” Thomas Theis of Columbia and H.S. Philip Wong of Stanford wrote in their paper, titled The End of Moore’s Law: A New Beginning for Information Technology. “Since 2005, integration density and cost per device have continued to improve, and manufacturers have emphasized the increasing number of processors (cores) and the amount of memory they can place on a single die. However, with clock frequencies stagnant, the resulting performance gains have been muted.”

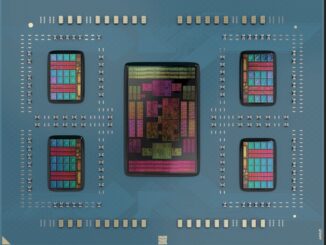

In their research, Theis and Wong outlined the myriad challenges to continued miniaturization of components and new ways of thinking in a broad range of areas, from logic to computing architecture. They also turned their attention to new technologies around memory, a part of the compute equation that is seeing significant changes not only due to miniaturization efforts but also because of changing workloads that come with increasingly loosely structured or unstructured data and that call for more access to memory from large address space. Traditional memory devices like DRAM, SRAM, and flash were created to address workloads that had a more established data locality, they said. However, “such data movement [across large address spaces] is expensive in latency as well as energy consumption, especially when data need to come from off-chip memory through a data bus with limited bandwidth,” they wrote. “Off-chip memory access can account for as much as 90 percent of energy and commensurate execution time in today’s computing systems running data-intensive algorithms.”

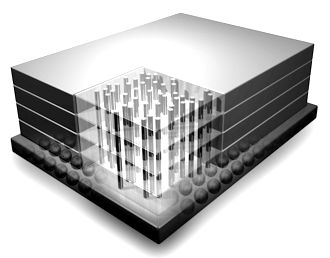

Emerging memory devices could prove easier to shrink and to enable the integration of large amounts of memory with logic, which could vastly improve the efficiency of compute systems. Currently, only SRAM can be easily integrated onto the same chip as the processor cores; doing so with DRAM or flash can be difficult and costly. Among the newer memory technologies being developed are spin transfer torque magnetic RAM (STT-MRAM), ferroelectric RAM (FERAM), conductive bridge RAM (CBRAM), resistive RAM (ReRAM), and phase change memory (PCM). All of these devices are nonvolatile and the cells of the memory array can be read without destroying stored data and written without having to first erase the stored bit. They also include read/write characteristics that are found throughout the memory hierarchy and can be fabricated at temperatures below those needed for manufacturing the interconnections, which can lead to improved integration of memory and logic.

At the same time, they also have different – and complementary – traits in such areas as read/write speed, power and energy consumption, retention and endurance, and device density.

“The tradeoffs vary greatly among the various types of memory.,” the authors wrote. “The physics of magnetic switching makes STT-MRAM singularly fast and thus well suited to be placed close to processor cores. However, one of its present limitations is the high write energy and resulting energy consumption. The write energy for STT-MRAM (and also for PCM) is proportional to the memory cell area because a certain current density is required to switch cell resistance. In contrast, RRAM and CBRAM write energy shows no dependency on the memory cell area because of the filamentary nature and very small cross-sectional area of the conduction path. Continued miniaturization should reduce the energy consumption of STTMRAM and PCM to acceptable levels. Continued advances in materials and device physics could further reduce the energy consumption of these memories.”

A key for computing architectures going forward is the development of efficient ways of integrating memory and logic functions in a single device, and much of the focus of research here is in the area of magnetism. Magnetism is at the heart of such storage devices as hard-disk drives and STT-MRAM, and as magnets continue to shrink, they need less power to switch polarization. With the recent development by researchers into new energy-efficient switching mechanisms, scientists are now looking into how the new physics of nanomagnetism can be used in digital device logic.

Traditionally, the development of magnetic logic was hampered by the fact that there was no simple way to get the magnetic state of one device to switch that of another device. There has been some advancement – think All-Spin Logic, a device and circuitry that addresses the issue – as well as research into voltage-controlled magnetism, which would make new devices faster and more efficient than current devices like STT-MRAM.

“Such devices [with combined logic and memory functions] could eliminate the need to save the state of a computation to memory before the power is turned off,” Theis and Wong wrote. “This capability would be of immediate value in power-starved systems dependent on intermittent power sources, and in the longer term, it could profoundly change computer architecture.”

Be the first to comment