For the first time access to cutting-edge quantum computing is open free to the public over the web. In May, Big Blue launched its IBM Quantum Experience website, which enthusiasts and professionals alike can program on a prototype quantum processor chip within a simulation environment. Users, accepted over email by IBM, are given a straightforward ‘composer’ interface, much like a musical note chart, to run a program and test the output. In over a month more than 25,000 users have signed up.

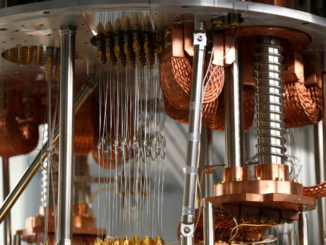

The quantum chip itself combines five superconducting quantum bits (qubits) operating at a cool minus 273.135531 degrees Celsius. “There are other refrigeration systems that can still get colder, but we don’t have a need for that right now,” said Jerry Chow, manager of IBM Research’s Experimental Quantum Computing Group.

“The number of users signing up is still going up each day. Interesting responses have been about the stability and usability of the site and academics have enjoyed the introductory tutorial material. There have now been four papers posted to the arXiv for preprint that have made use of the Quantum Experience.”

There is no specific research paper about IBM’s accessible qubit chip but research of a similar experiment is published on the arXiv preprint server. On the IBM Quantum Experience website users are shown updates of the chip’s performance every day through a series of calibrated values posted on the main composer page. This is repeated twice daily to give a sense of the errors and noise within the physical system.

“The researchers at IBM are also respected scientists. I think that the IBM machine is a genuine quantum system and it has a few relatively well-working physical quantum bits that can be used controllably for running small-scale quantum algorithms,” said Mikko Möttönen, who was not involved in the work, and is leader of the Quantum Computing and Devices Labs at Aalto University, Finland.

The idea of putting qubits on the cloud has floated around for a while between Jerry Chow, Jay Gambetta and Matthias Steffen at IBM.

“We built up to this by understanding the community’s perspective through our conference (ThinkQ 2015) back in the late fall where we invited academics, industry, VCs and government labs to join in on a discussion and symposium on what could we do with a small quantum computer of 100 qubits,” said Chow.

A future computer with the right algorithm that takes full advantage of quantum physics principles, with hundreds or thousands of logical qubits, could compute the energy transport in novel drug molecules in much less time than the billions of years it takes on today’s supercomputers.

Until that day IBM’s online qubit experience is primarily open to students, teachers, developers, quantum physicists and general science enthusiasts. The goal is to build a community platform to advance classroom teaching and research for a universal quantum computing model. Users gain units to queue up executions on the quantum processor and can access a quantum composer, quantum simulator, scores and results.

Today the only commercial quantum computer is the Canada-based D-Wave system. The D-Wave 2X is the latest version that includes a processor with far more qubits than any other research lab: these 1,000 qubits use the quantum annealing technique. Quantum annealing finds the optimal, or near-optimal, solution to a computational problem in as short amount of time as possible.

“You can imagine this as a landscape of mountains and valleys with the lowest points in the valleys representing optimal or near-optimal solutions to the problem. As the couplings take form the ‘wave function’ tunnels through this landscape exploiting superposition and entanglement to gain efficiency that is not feasible classically. The more complex the couplings, the more complex the mountain and valley landscape represented by the problem,” said Jeremy Hilton, D-Wave’s VP of Processor Development.

Origins of this approach were founded in the early 2000’s by Hidetoshi Nishimori from the Tokyo Institute of Technology, Japan, and independently by researchers Seth Lloyd, Eddie Farhi and others at MIT, U.S.

D-Wave’s latest system works at a lower temperature than the previous generation, which improves the accuracy of solving problems.

“D-Wave technology is the only quantum computing platform that is built upon a scalable architecture to directly tackle problems which are shown to be exponentially hard for conventional computers to solve,” said Hilton.

Today D-Wave’s notable customers include Lockheed Martin, Google, NASA Ames and the Los Alamos National Laboratory. But the quality of an algorithm’s output is the measuring stick of how quantum systems will prove their mettle to the wider community.

In January 2016 Jean-Philippe Aumasson, cryptography researcher at Kudelski Security, gave a talk saying that D-Wave’s machines are only faster for one very specific algorithm. He elaborated that these computers cannot simulate a universal quantum computer, nor execute Shor’s algorithm — an algorithm that finds the prime factors of an integer; and is not scalable, not fault-tolerant and not a universal quantum computer.

In response Hilton said Google’s public results last year actually emphasizes the strong potential for D-Wave’s quantum annealing-based processors. Also in August 2015, D-Wave published work indicated the quantum processor returns good solutions to native problems up to 600 times faster than highly-tuned and optimized classical algorithms.

“We have built to a problem-type that was designed to represent what hard real-world problems look like. This result can be extended to any type of hard problem with similar characteristics – so the work was quite important,” said Hilton.

IBM’s superconducting chip has a fifth (ancilla) qubit, which has an error-checking function to determine whether errors occur to the other four surrounding (code) qubits. D-Wave 2X’s qubits do not have the same rigorous error-correction requirements as IBM’s technology: the so-called ‘gate model quantum computing’.

On the D-Wave 2X, operations can be solved and encoding can be done directly on the quantum annealer processors without any quantum-error correction. This is due to a ‘natural immunity’ to noise. This performance matches or even beats classical computer operations for certain algorithms, according to Hilton. But he admits quantum error correction will most likely benefit quantum annealing at larger scales in the future.

Mikko has a healthy skepticism on whether it can be demonstrated that this is the most efficient way currently to solve commercially relevant problems.

“What is clear is that D-Wave’s services are already useful for some companies,” said at Aalto University’s Möttönen. “However, it is still not clear to me that the D-Wave annealer can beat the best possible algorithm in these cases. It seems very likely that with the increasing memory and precision, the D-Wave annealer will do this in the future. Thus I really support what they are doing and it would be great to also be part of this effort.”

Various transistor types are being developed: Research groups at Google, IBM and Microsoft, as well as many scientific labs around the world, are designing, optimizing, patenting and testing the next big architectures for a scalable quantum supercomputer. It is not clear which design, or designs, will win out. The universal quantum computer is currently the hardest architecture to build.

A lot of these quantum systems are commercially guarded secrets except for the few users that use them. But no one company or research lab can solve these quantum architecture problems alone.

In Finland, the government is making large cuts to research. However, Möttönen’s research efforts, supported by a European Research Council Starting Grant, have resulted in the design, production and patent of a new microwave detector with the potential to be integrated in a qubit chip or other quantum-optic photonic technologies; the detector is fabricated using similar techniques to current chips so it is scalable.

In a superconducting quantum computer (currently seen in academia as the most promising path for a universally scalable architecture), this detector could be used as an on-chip component. The scalability makes the single detector chip very cheap although the process itself is rather expensive right now. The microwave detector is currently able to detect packets of around 200 photons in a typical qubit frequency — a 14 factor improvement over previous thermal detectors.

In the future, this detector could be used to measure single quantum states of flying photonic qubits in a superconducting quantum computer. For qubit error correction, the microwave detector could be used in conjunction with a fifth qubit to read its state to know whether errors occur or not.

“Ultimately we want to have a single-photon resolution at high fidelity,” said Möttönen. “Scaling up the detector is very simple. Our samples are very reproducible and robust. Thus if we can make one, we can make a million almost with the same effort.”

In a year or two the fabrication process will also be improved and in up to five years the plan is to have a single-photon resolution. “I am actually looking now for funding to make this detector a commercial product. So if there are any investors or supporters of quantum technology out there, I am open for discussions.”

D-Wave is watching these academic developments closely.

“Efficient high-frequency (above 5 GHz) signal-detection is a critical part of many quantum computing hardware candidates as well as for D-Wave. Research like Möttönen’s work will no doubt bring about key enabling elements for quantum computing systems, and D-Wave incorporates developments like this wherever available and needed,” said Hilton. Chow also agrees that the qubit state-detection field is ripe, yet it’s still uncertain what is the best scalable option moving forward.

If research labs don’t end up partnering with commercial providers then they could get access to other funders. For those outside of the U.S. a recent announcement by the European Commission was the start of a new $1.11 billion (€1 billion) commitment to boost quantum computing technologies that could potentially speed-up advancements.

In order to lure the best and brightest, funding could be used to create a new international and independent center. Its focus would be to develop the research abilities of all the partners involved towards a common goal. This hub could attract more technology companies, enabling a faster route from basic research to commercial products and practical applications within our lifetimes.

“The head of the board, who will most strongly decide how the money should be spent, should have strong academic merits, but also a strong drive to industrial applications. This person should not have too much at stake for his or her own research and should really think about the big picture objectively,” said Möttönen.

“The big center should also give out scholarships to researchers all over Europe to participate in the research if they wish. Thus there would be very little permanent staff in the center but it would be a hub for researchers in the field. I think that the center’s board should first pinpoint the most revolutionary goal and reserve major funds for it to take place. In addition, a handful of smaller scale goals should be set. All this should be tied together with a support and education network.”

But in order for this to be the engine for faster progress, finding the right balance in dividing funds fairly between projects with strong goals is essential. Also the countries with the best quantum technologies should collaborate regularly for the best chances of success.

Be the first to comment