If money was no object, then arguably the major nations of the world that always invest heavily in supercomputing would have already put an exascale class system into the field. But money always matters and ultimately supercomputers have to justify their very existence by enabling scientific breakthroughs and enhancing national security.

This, perhaps, is why the Exascale Computing Project establish by the US government last summer is taking such a measured pace in fostering the technologies that will ultimately result in bringing three exascale-class systems with two different architectures into the field after the turn of the next decade. The hardware, as always, is the easy part. Getting software to scale across these enormous machines is another matter, and one that will take considerable time even though the exascale software stack will be an evolutionary one based on systems and applications software currently running on petascale systems today.

The US government labs that do the bulk of the capability class supercomputing in the country have always wanted to have options, and that is why in years gone by the Department of Energy and the Defense Advanced Research Projects Agency have collaborated to have at least two and sometimes three or four different kinds of capability-class supercomputers available from system makers. With DARPA curtailing its investments in massively scaled supercomputers several years ago and effectively removing about half of the funding that was used to create these machines, there is simply not enough money to fund science project approaches that may or may not pan out as practical systems for running real-world applications and not just being used as stunt machines in a race to show that the US can compete with Europe, China, and Japan in fielding an exascale-class system in the 2020 timeframe.

In fact, the US is taking a very measured and calculated approach, and as we discussed earlier this year, has pushed out the delivery of a working exascale system to early 2023. That is three years later than many had expected the US to deliver its first exascale iron (or hoped it might), and the systems being developed under the auspices of the Exascale Computing Project will be behind other national efforts to approach exascale performance – if China and Japan can stick to their current timelines, that is. During the recent ISC16 supercomputing conference in Frankfurt, China committed to delivering the Tianhe-3 supercomputer, possibly based on its homegrown Matrix2000 DSP accelerators or a variant of the massively multicore Shenwei processor used in the recently announced in the Sunway TaihuLight system that is at the top of the Top 500 charts. (Like the US, China will probably have two different architectures for its exascale systems.) The Japanese government is funding the Post-K supercomputer, which will be based on an HPC-centric ARM chip developed by Fujitsu and which is expected to be installed in 2019 if all goes well.

With such competition, it is reasonable to wonder if the US government will want to accelerate the schedule of the Exascale Computing Project and the consequent awards for the construction of the three exascale machines in 2023. Paul Messina, director of the Exascale Computing Project and a distinguished fellow at Argonne National Laboratory, gave an update on the effort at the ISC16 conference, and at least for now, the project is staying on its current schedule.

“I honestly don’t have an answer to that question and at this point I have no new information,” Messina said when asked if the delivery dates for the exascale systems in China and Japan had pulled the ECP plan forward. “It could, perhaps, but at this point we have our plan and our timeline and I am not aware of any changes at this time.”

A lot, we suspect, will depend on how the next US president and Congress, which will be elected in the fall, feel about supercomputing in general and staying competitive with China, Japan, and Europe.

Software Is Always The Stickler

There is little question that there is demand for the exascale-class machines and the potential for scientific breakthroughs that will be funded through the ECP and in collaboration with the part of the IT industry that participates in the supercomputing sector as well as the government labs and universities that run their applications on the largest machines in the field at any given time.

“As you might expect, scientists can always identify advances that they could make if they just had more computing, more I/O, more data, or more power to the arithmetic,” Messina said. “So the demand is there – no big surprise – and the demand is very broad. On the other hand, as has been remarked many times, it isn’t business as usual any more in terms of getting the extra power from the computers that we use because of technology issues – physics, basically. We can no longer count on the transistors being faster based on the technologies that are being used now.”

What we are all counting on, as has been made abundantly clear in exascale coverage here at The Next Platform, is a huge increase in the parallelism in systems. We are talking about billions of threads that software will have to learn to span, which is no mean feat and, we think, one of the reasons why the ECP has a much longer development window than these other exascale projects. It seems like the US wants to get its software house in order through a co-design effort that will bring together the hardware architects, the systems software designers, and the application developers in such a way that code will be as ready as possible for the exascale machines as they are turned on.

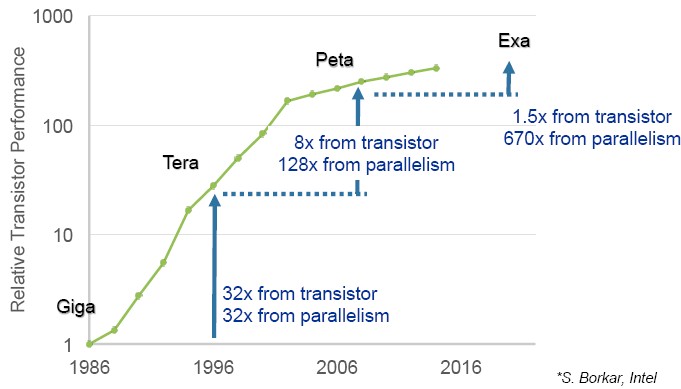

To make his point, Messina pulled out a chart that Shekhar Borkar, a now-retired Intel fellow, presented at ISC15 last summer when discussing his ideas about exascale systems. That chart not only shows how transistor speed and instruction per clock increases have petered out, but just what a mighty leap in parallelism we face moving from petascale to exascale machines:

“The estimation is that for exascale, it is all going to come from parallelism, and what that means is for applications, we have quite a challenge,” said Messina. Addressing that challenge – and not just slapping together some hardware that can run Linpack at exascale – is the point of the ECP effort.

“We don’t want applications going off on their own without paying attention to what the hardware will be like. And the same thing for the software technology, and the hardware can’t just be pursuing some interesting ideas at least to the extent possible must include features that the applications need to be more effective.”

This being a formal project also means the ECP effort has phases of development and milestones that have to be met, with hardware development, systems software, and application software all being co-developed (or modified from existing petascale systems) at the same time so the rubber will hit the road when the exascale machines are fired up in 2023 at the three national labs that will get them: Argonne National Laboratory, Oak Ridge National Laboratory, and Lawrence Livermore National Laboratory.

“This is not a case of let’s see what we can think up that is interesting over the next few years, write a few papers, and decide what to do,” Messina explained. “It is very much focused research and development with milestones and time-critical activities.”

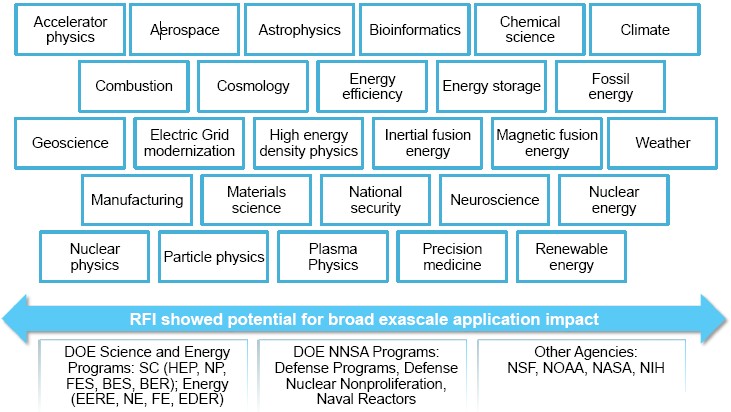

To make sure everyone is on the same page with the ECP effort, last summer researchers at all 17 of the DOE labs plus researchers at National Science Foundation and the National Institutes of Health were asked about how they would make use of an exascale-class machine. Over 200 papers were submitted, and they show a wide range of potential uses – pretty much what you would expect.

“We can’t fund all of them – that would be nice – but we do intend to fund a reasonable fraction of the domains that are represented,” Messina said. “The funding will come with strings, and we will fund application development teams and they will be required to identify the one or two problems they are aiming to solve as soon as there is hardware in place at the exascale. It can’t just be they want to do better fluid dynamics, but it has to be some kind of quantifiable problem that someone cares about. And we want them to follow modern software development practices so the software can be maintained, and typically it will be open sourced so others can take advantage of it. And they will have to execute milestones, often jointly with the software activities, and the way to ensure co-design is to have people from the software development team spend time with the application team and vice versa.”

No one is being terribly specific about the total funding level for the three exascale systems that the US will build, and no one is talking about how much of that bill will be dedicated to software, but the experts that we talked to at ISC16 said that of the total system costs, a very big portion, perhaps as much as 50 percent, should be dedicated to software. (With power consumption possibly representing 50 percent of the cost of the machine over its usable lifetime, it looks like the hardware will have to be free. . . . Yes, that was a joke. It is probably more likely that software will represent a quarter, hardware a quarter, and power and cooling a half.)

To help get the software ball rolling, ECP will set up co-design centers that focus on underlying programming techniques, not on specific industry or research domains as DOE and NSF have done in the past.

The thing that Messina stressed again and again is that software plays the central role in these future exascale systems.

“Everything in this project will be challenging and essential and has to be integrated, but software has a particularly difficult task in meeting those needs,” he said. “And it has to be ready at the right time. These high-end systems only have a lifetime of four to five years, and if you take the first year or year and a half before the entire software stack is ready, there will be inefficiencies and a waste of time for a large fraction of the usable life of the system.”

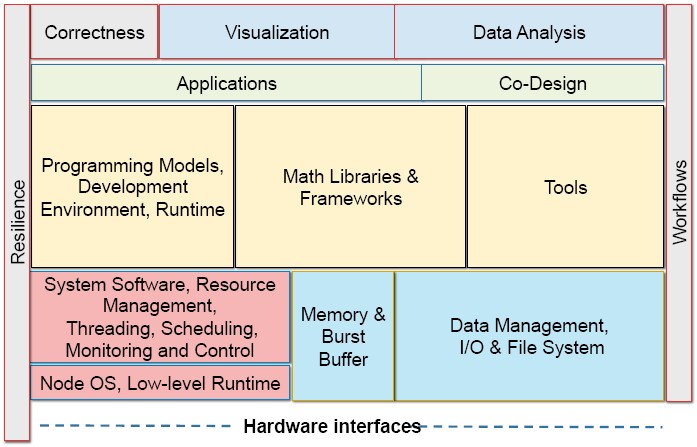

The stack will be complex and rich, as it needs to be to support a diverse set of workloads that are expected to be run on these systems. And, further complicating things, this software will have to span two completely different architectures, and it is reasonable to assume that they will be follow-ons to the Power/Tesla/InfiniBand combination used in the “Summit” system being built by IBM, Nvidia, and Mellanox Technologies for Lawrence Livermore in 2017 and the Knights/Omni-Path combination used in the “Aurora” system being built by Intel and Cray for Argonne in 2018.

Resilience is something that applies to all aspects of the software stack, including the applications, the runtimes, and the operating systems because there will be errors and they have to be able to recover from them without having to start from scratch with a simulation. Workflows are also important. With experiments having inbound streaming of data to the exascale system in real-time, something has to partition the machine and manage that data. Correctness and uncertainty quantification is another key concern with the ECP effort, so researchers know they can trust the results of their simulations, and is as important as the visualization and data analytics that will help people understand the output from the applications. Memories in these future exascale systems will be more complicated, so the software stack will have to help with that and decide what memory will be used when.

As for who is creating this software stack, Messina says that some of it will be provided by the vendors of the systems that will be purchased, but following history, some of it will developed by the labs and universities as has been the practice for decades.

“Much of the investment will be on things that already work now and we expect to work in the future, but in every case they will need a lot of evolution because of the changing hardware and the applications that are changing their requirements as well,” Messina said. “I hope there will be inventions of new programming models that will make programming easier and more adapted to the new architectures that are coming, but I am convinced that the current programming models, by and large, will continue to be used. They need to be improved, and it really requires a substantial investment in MPI, OpenMP, and related things. The applications may more and more not need to be aware of them, but these will be lurking under there as support for some of the higher level abstractions in my opinion.”

In terms of the phases, between 2016 and 2019, IT vendors are doing research and development on future exascale node designs, and software developers will be using pre-exascale systems to do their own experiments to test out ideas of how to scale further. In 2019, insights gained from the ECP will be used to put together RFPs by the DOE and the National Nuclear Security Agency (NNSA) for those three exascale systems and two architectures, and between 2019, when the RFPs are put out, and 2023, when the systems are installed, system and application software will be tweaked for the specific architectures chosen. Eventually, the technologies developed will be productized and trickle down to smaller HPC centers and enterprises.

“The goal is to have two different architectures, and we feel that this is important for a number of reasons,” Messina said, not the least of which being that one hardware architecture does not fit all applications and the US government does not want to put all of its HPC eggs in one basket regardless. “And to the extent that we can, we also hope to help HPC adoption in industry by trying to make sure that the architectures and the software that goes into our exascale systems can be very useful to industry, typically in much smaller configurations but without having to dramatically change the software environment or the underlying hardware.”

The question now is whether or not Intel, with its Knights/Omni-Path combo, or IBM with its Power chips and Nvidia and Mellanox partners, can accelerate their hardware roadmaps to try to get the hardware defined and into the field earlier. A three or four year gap between when the US has exascale machines in the field and when China, Japan, and possibly Europe can make theirs and fire them up may be too much for scientists and politicians alike to let slide. We will know more after the November elections in the United States.

Be the first to comment