We don’t have a Moore’s Law problem so much as we have a materials science or alchemy problem. If you believe in materials science, what seems abundantly clear in listening to so many discussions about the end of scale for chip manufacturing processes is that, for whatever reason, the industry as a whole has not done enough investing to discover the new materials that will allow us to enhance or move beyond CMOS chip technology.

The only logical conclusion is that people must actually believe in alchemy, that some kind of magic is going to save the day and allow for transistors to scale down below – pick a number – 7 nanometers or 5 nanometers or 4 nanometers, therefore allowing us to make ever more complex chips at the same cost and within the same thermal envelope, or the circuits for proportionately less power and less money.

Joshua Fryman, system architect for extreme scale research and development at Intel, does not appear to believe in alchemy, but in a panel session concerning the end of Moore’s Law scaling – shorthand for a number of approaches to making computers more powerful with successive generations – he lamented the difficult position that the industry is in but also offered some hope for how current CMOS technologies will have to be extended until we create follow-ons.

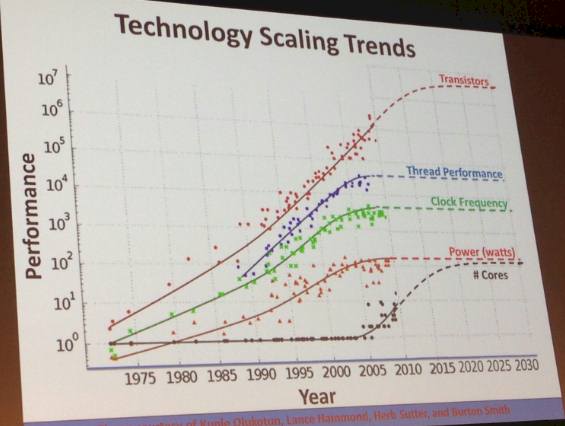

To review, Moore’s Law, an observation by Intel co-founder Gordon Moore back in 1965, says that every two years or so, we double devices whether they are electronic relays or vacuum tubes or transistors, and generally that allows for devices to scale up performance by 40 percent and reduce energy by 65 percent at constant system power for the same cost. This is accompanied by Dennard scaling, which means scaling the dimensions of the device down by 30 percent and lowering the supply voltage of the device by 30 percent.

“Classically, the recipe for engineers to achieve Moore’s Law scaling with transistors has been scale your dimensions, scale your supply, and you are done,” Fryman said during his presentation at the International Supercomputing Conference in Frankfurt, Germany this week, which was a panel that looked at quantum computing and neuromorphic computing as follow-ons to traditional Von Neumann computers as well as thinking about the end of Moore’s Law scaling for chips. “You just keep turning the crank on this over and over again. The running joke is that many years ago in the fabs, we used a handful of elements in the period table, and today we use almost all of the elements in the periodic table except for a handful of them to get the same job done. It is still just a recipe. But what happens when the recipe fails – that is, the sky is falling, there is no more Moore’s Law.”

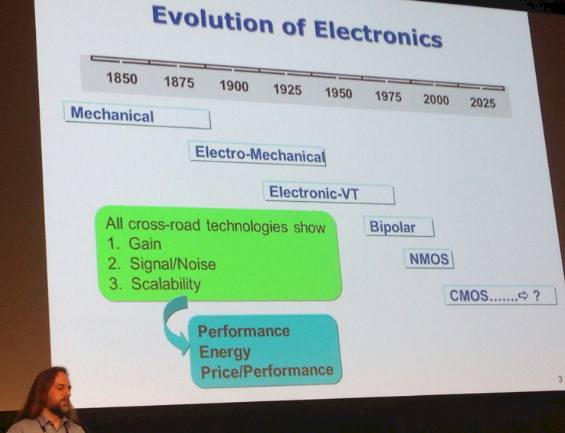

The answer, says Fryman, is to stop and think. But he wants everyone to understand that Moore’s Law is a business statement, not an engineering statement. We have had many different types of technologies over the past two centuries of computing in its many forms, as outlined below:

For each of these technologies, what was required for them to do computing was gain, or the ability to take an input signal, apply energy to it and amplify it as an output. You also need to be able to amplify that signal and keep the noise from drowning it out, and you need some sort of scale, which can mean shrinking devices or making the devices more complex over time to do more functions. The fourth tenet is that whatever technology you choose, you need to be able to manufacture it in high volume in a cost-effective manner so it can actually be employed.

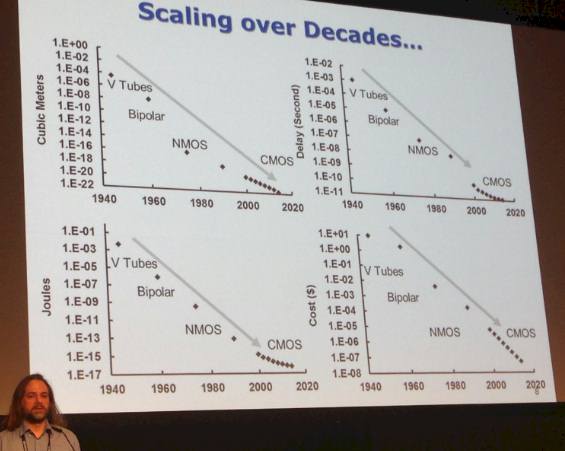

There have been many different technologies deployed over the years in electronics, and the scaling of those technologies has been fairly linear. Here are the most recent ones during the age of digital computing:

We would say fairly linear having come into the IT market just as bipolar technology hit its scaling wall, where getting chips to run faster made them exponentially hotter and linearly more expensive to bring to market, and we would also point out that there was, for high-end systems at least, a considerable gap between when bipolar chips ran out of scaling and CMOS chips could scale their performance up high enough to meet and then exceed that of bipolar chips.

It was energy consumption that derailed bipolar chips, and we can see a similar issue for CMOS in recent years in the chart above as it is starting to flatten out, which Fryman says is feeding the hysteria a little. But costs, thanks to the prowess of the major fabs, has continued to scale.

IBM’s mainframe chips, the last of the bipolar holdouts, absolutely ran out of has in 1990 and the cost per unit of performance went up at a staggering rate because of manufacturing costs, absolutely violating the tenets of Moore’s Law. And it was no coincidence that the rise of RISC processors and CMOS technology coincided with this. But IBM mainframes took another five to six years before they had adapted fully to CMOS. We think that general purpose processors will see a similar gap in the coming years, and unavoidably so as chip manufacturing processes for CMOS hit their limits when we try to press beyond 7 nanometers or so. We shall see.

So what is on tap after CMOS? Fryman does not know, actually.

“Research needs to continue because we need to find The One, the Neo, of the next generation,” he explained. “But once you find it, and once you find the techniques, you still have a long haul to make it something we can use, something that is viable for mass production. Until then, what are we going to do? The short answer is that CMOS is going to continue. It is not because it is the best technology or because we like it, it is because we have no choice and we have to do it to keep everything moving forward.”

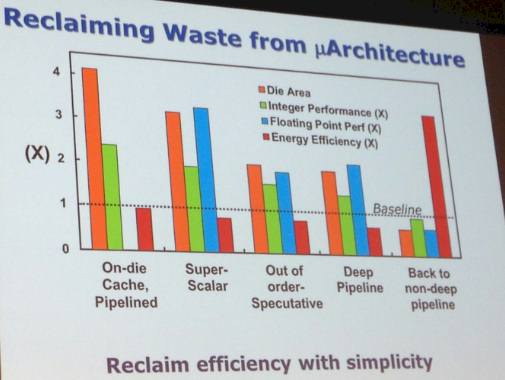

The trick to keeping Moore’s Law trending and to bend up at least some of the curves in that first chart at the top of this story, is to remove the waste from processors, says Fryman. The other trick is to embrace known techniques that have been avoided for one reason or another – they were too hard to program, too hard to design, too hard in any kind of metric.

As an example of reclaiming waste, Fryman pulled out a graph made by his predecessor, retired Intel Fellow Shekhar Borkar, that showed how Intel has wrestled with the issues of microarchitecture waste over the years:

In the first set of columns, the characteristics of the 80386 processor compared to the 80486 processor are compared. With the second set of columns, the change in the move from the 80486 to the Pentium. The out of order speculative execution comes with the transition to the Pentium Pro architecture, and the deep pipeline come with the P4 family of cores, which you will remember were supposed to scale up to 10 GHz. (We can hardly get above 3 GHz in a decent power envelope.) The transition to shallower pipelines with the Core architecture has dominated more recent Intel chips. “The big win here is energy efficiency,” says Fryman. “By choosing to simply the design, to roll back the clock on the Pentium 4, if you pardon the pun, and not target 10 GHz but 2 GHz to 3 GHz, the energy efficiency shot back up and it brought some other factors back under control.”

As for known techniques, Fryman trotted out near threshold voltage operation and fine grain power management as two that Intel has experimented with but which it has not commercialized. Silicon will get a little more unreliable as transistors get smaller, but if you create a system that assumes a certain amount of failure or error inside of a device and deals with it proactively rather than reactively – as we currently do with flash memory, for instance – then you can make CMOS work a little longer.

Fryman makes Intel’s position clear, and also that this affects all chip designers and manufacturers – we have lots of the former and fewer and fewer of the latter.

“There is nothing on the horizon today to replace CMOS,” he says. “Nothing is maturing, nothing is ready today. We will continue with CMOS. We have no choice. Not just Intel, but everybody. If you want this industry to grow, then we need to be doing things with CMOS and we need to reclaim efficiencies. And to do that we are going to have to start thinking outside of the box and go back to those techniques and ask ourselves do we really need cache coherency across an exascale machine? No, you don’t. Do we really need cache coherency across a hundred cores or a thousand cores on a die? Probably not. Are you going to take the complexity in software for a more scalable, simpler hardware? Going forward, we really need to be taking our heads out of the sand, pardon the pun, and rethink what we are doing.”

Alchemy does not work.

Now, yer transmutation, now that’s another thing altogether!

Single Atom Transistor ?

http://phys.org/news/2012-02-single-atom-transistor.html