Gannett, the $3.2 billion media giant that owns USA Today and 92 other newspapers in the United States, is undergoing massive changes in its business, and over the past few years its technology platform has been in the process of being completely overhauled to make it more nimble.

Like other news organizations trying to make a living in print and digital media, Gannett has a number of challenges. In a sense, Gannett’s systems are its business, and the backend publishing, advertising, and reader monitoring systems that it is evolving are as important as the content that its team of over 2,700 journalists – the largest number employed by any news organization in the world right now – are churning out for local newspapers like the Detroit Free Press or The Des Moines Register as well as for the flagship USA Today national paper.

Another interesting twist is that the Gannett newspaper operations have just been spun off separately from its television and other digital media operations, which include the CareerBuilder.com job search and Cars.com auto selling sites. This new company is called TEGNA and is co-located in the same Tysons Corner, Virginia headquarters outside of Washington DC where the new Gannett is also located. Like other media conglomerates, Gannett wanted to break its newspapers off separate from its other assets to “unlock shareholder value,” as the saying goes. The spinoff was completed at the end of June, and the resulting Gannett publishing company has 19,600 employees in the United States and the United Kingdom – it owns owns more than 200 newspapers, magazines, and trade publications through the Newsquest group – is virtually debt free, has access to $500 million in capital, and is looking to cut costs and improve operations on every front to drive revenues and profits.

This includes the IT department, as you might expect, given how central systems are to any media company these days. Alon Motro, manager of content and platform services at Gannett, and Kristopher Alexander, platform architecture lead at the company, spoke to The Next Platform about one interesting set of changes that Gannett is making over the next year and a half to improve its publishing operations and to cut costs. In a nutshell, Gannett is shifting away from Microsoft’s .NET programming languages and SQL Server databases as the back-end of its Presto content management system and media web sites and towards Python and Node.js and the Couchbase NoSQL data store.

At the same time, the company is also moving its systems out into the cloud and is putting together a plan to create a cross-cloud hybrid model for its compute and storage that will also include a touch of on-premises cloud.

Behind Many Papers, One Presto

The big news at Gannett for the past several years, predating the media company spinoff, has been the new Presto content management system, which was designed to bring all of those 93 newspapers (and the web sites for the several dozen now spun-out television stations that Gannett controlled) under the same publishing tools. This was a herculean effort, and one that was talked about in great detail in this case study. Before Presto, the fleet of newspapers and web sites affiliated with them had a collection of content management systems of various vintages and capabilities and often with hooks into mobile and broadcast TV systems. Now, all of the Gannett properties have Presto on the back end, with Adobe AudienceManager monitoring readers, Google DoubleClick serving up advertisements, and comScore and Moat doing analytics on the whole shebang.

Now, the back-end for Presto and the web sites as well as the front end for Gannett’s online newspaper sites are getting tweaked not only to make them run faster and scale better, but to make the resulting system portable across public clouds and a private cloud that Gannett will be building in its own datacenter in the future.

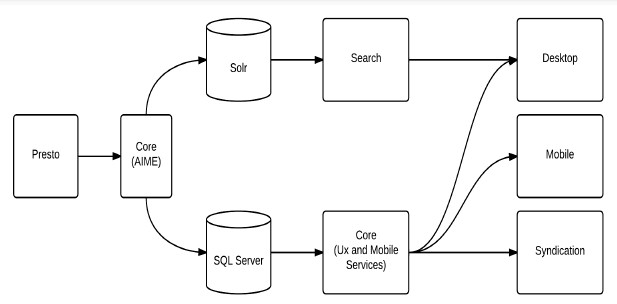

At the moment, the Presto CMS is backed by a set of 24 four-socket Xeon E7 servers running Microsoft Windows Server and its SQL Server relational database, explains Alexander. Here is what the architecture of the system looks like:

Like most software engineers, neither Motro nor Alexander knew the precise configurations of the Xeon E7 servers underpinning the SQL Server instances, but did say they were “fairly hefty,” which was a requirement to store the 70 million documents that are the archive for the Gannett online properties and print publications. The servers are also backed by Violin Memory’s all-flash disk arrays to give them a boost. The databases handle the hundreds of thousands of concurrent users that hit the Gannett sites as well as the several hundred of concurrent users on the editorial staff that are adding content to those sites.

“The replacement of SQL Server with Couchbase is really just a part of an overhaul of our complete architecture,” Motro tells The Next Platform. “We had replication issues with SQL Server and outages because of network issues, and our architectural change is meant to solve many problems.”

Gannett started looking for alternatives to its SQL Server databases for a number of reasons. First, a lot of the business logic linking its CMS to its sites was coded in stored procedures, which caused a significant delay between the time something was published in the system and was presented out on the web site. Moreover, the company wanted to move to the cloud and at the time, when this plan was first put together, Microsoft’s Azure compute cloud and online SQL Server variant had not yet matured. But more importantly, Gannett wants its infrastructure to be portable across public clouds, so it does not want to be tied to any particular implementation of a database as a service.

“The big thing is that we need to be able to support higher read and write speeds,” explains Alexander. “Some of the SQL Server machines that we had were effectively read-only instances, driving the desktop and mobile user interfaces. We read/write servers that replicate into those servers and spread the load across those nodes. We have replaced the read-only traffic with Couchbase so we can handle the scale of reads we need. Our original architecture was a replication-centric one, where everything got dumped into one database and then replicated out to the other ones. With Couchbase, we are using its push publishing feature to push data out to all of the Couchbase nodes at the same time and in parallel.”

During normal operations, the SQL Server cluster had trouble handling the 500 writes per second from Presto and the 3,000 reads per second that the Memcached caching layer between the SQL Server databases and the web servers requires. (Those figures are across the cluster of 24 SQL Server machines, not for a single node.) So Gannett took a look around, and considered various NoSQL data store alternatives, including MongoDB, Cassandra, Riak, and Couchbase. “We actually narrowed it down to Riak and Couchbase from our initial analysis of the architecture of these stores,” says Alexander. “We wanted a fast key-value store and they had similar approaches in terms of how they manage buckets and documents, but it really came down to the ‘memory first’ architecture of Couchbase, which will allow us to consolidate caching down into this layer.”

The fact that Couchbase was already tweaked and tuned to run on the Amazon Web Services cloud didn’t hurt, either.

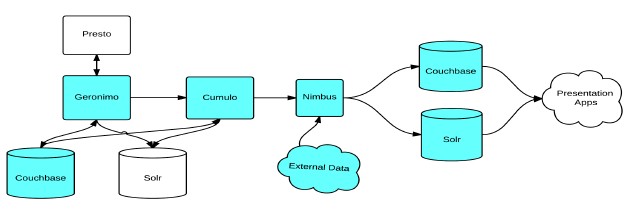

To make sure Couchbase could handle the load, Gannett’s techies ran some tests using its publishing system, and found that it could drive 10,000 writes per second and 30,000 reads per second sustained on a cluster of eight EC2 r3.8xlarge nodes on AWS, each of which had 32 vCPUs and 244 GB of memory allocated to them. (The r3 instances are memory-optimized instances.) This virtual Couchbase cluster of eight EC2 instances could handle spikes of up to 300,000 reads per second, making it an effective caching layer as well as a data store. In fact, the plan is to replace the five Memcached servers that currently sit between SQL Server and the hundreds of instances on AWS that run the Gannett web server farm with a memory-heavy Couchbase cluster. Couchbase will also replace the MongoDB database that is used in the Presto CMS to hold application settings, user preferences, and workflow. Here is what the new Gannett publishing system architecture will look like:

One big change by moving to this new architecture for the publishing backend is that Gannett will save a bunch of money. Alexander estimates that the company pays close to $1 million a year for its enterprise licenses for the SQL Server software stack on those 24 machines, but that its bill for Couchbase software licensing will be on the order of $240,000 per year. That savings along can buy a lot of EC2 instances; if you do the math, it is just under four years’ worth of those eight EC2 r3.8xlarge instances for reserved capacity on AWS. That savings will no doubt be used to pay for the EBS block storage that will be underpinning the Couchbase cluster out on AWS.

Gannett is not moving away from SQL Server in one fell swoop, but is taking a measured approach as it shifts its web sites and syndication customers from the existing set of APIs that front end its SQL Server databases and applications to a new set that ride atop Couchbase. Alexander says the full transition could take as long as 18 months, which is roughly timed to coincide with the software engineering teams transitioning away from .NET programming languages on Windows to Python and Node.js running on Linux. This programming transition will take time, too, because Gannett has hundreds of applications to port over.

Spanning Many Clouds

In the middle of all of this, Gannett is also implementing a hybrid cloud strategy that will see the company not being tied to any particular public cloud provider and building its own private cloud for selected workloads that have security sensitivities or other reasons to not be out anywhere else but inside of Gannett’s own datacenters. The idea, says Alexander, is to not get too tightly tied to any particular public cloud’s platform services, but rather to pick and choose a set of infrastructure and platform software that Gannett can install across AWS, Microsoft Azure, Google Compute Engine, and other clouds in a uniform way.

“We want to be able to take an app and run it in multiple clouds at once,” explains Alexander. “Each of the clouds are more available in certain parts of the country than in others, and because we are a very large national platform, we need to be able to get the best availability for our applications. So, for example, it may make more sense to use Google in the Northwest than Amazon, or Azure in the Midwest.”

Gannett is already a big user of the Chef configuration management tool, and this will be a unifying element of its cross-cloud strategy. Gannett has also chosen the Scalr Hybrid Cloud Framework to provide an abstraction layer above these various public clouds, helping to ensure application portability. As for its internal cloud, which it is in the process of building now, Gannett has chosen the combination of the OpenStack cloud controller and the KVM hypervisor as its substrate, with Chef hooks for configuring the software inside of VMs much as it does on the clouds. The company will be using RabbitMQ message queuing software internally and across the clouds as well.

Be the first to comment