“Machine learning connects supercomputing to consumers; its impact will be felt everywhere.”

So began Pradeep Dubey, an Intel Fellow and director of the Intel Parallel Computing Lab as he outlined key facets of deep learning, a special form of machine learning, and how new technology from the chipmaker is aimed at enabling a brilliant future for new applications that spans the full gamut of HPC, enterprise, and consumer end users.

Pradeep explains that “training the model is everything”. Data scientists have been using machine-learning since the 1980s to create models that solve complex pattern recognition problems. The recent surge of interest in machine learning, especially deep learning, is the result of a convergence of technology innovations that now provide sufficient compute capability, large amounts of data, and algorithmic innovations. Together these innovations enabled supervised training of deep neural networks such that useful predictions can now be made in image or speech recognition tasks, at attractive levels of accuracy, often as good as or even better than what humans can achieve. “The impact is going to be felt everywhere”, he said

For the layperson, deep learning involves a subset of the techniques available in the broad subfield of computer science known as machine learning. The majority of machine learning techniques today involve only one or two hidden layers of processing between input data and output prediction layers. Deep learning on the other hand refers to the subset of machine learning techniques with multiple hidden layers of processing between input and output layers, and hence the name ‘deep learning’.

The recent revolutionary impact of deep learning is the result of a convergence of technology innovations that provide affordable sufficient compute capability and access to large amounts of data.

The use of many layers in deep learning mimics the structure of what goes on in the biological brains of animals – like humans – where increasingly deeper layers (as seen from the perspective of the input data) deal with increasingly more abstract representations. These deep hierarchical abstractions, Pradeep observed, give deep learning technology the ability to still recognize a bent spoon as a spoon and manage other really challenging transformations (or non-affine transforms in technical parlance) while staying within affordable levels of compute requirement.

The magic of machine learning technology was made clear to the masses in 2011 when IBM’s Watson computer won against two of Jeopardy’s greatest human champions.

Pradeep echoes the excitement permeating the industry over the potential of deep learning technology – especially when he speaks about how Intel is making this technology affordable to everyone. “It will be magic!” he said, and followed up by predicting, “This is where success will be defined!” In one example, he described a path to success where people access the cloud to train a deep learning model that can solve a complex, real-world financial, medical, or other real-world problem. “The power of training” he said, “is that it can potentially create a model that is compact enough to reside and run even on a phone, and thereby enabling the handheld computing device to do a recognition task hitherto far beyond its compute capability”. The ability to run a cloud or supercomputer trained deep learning model on a phone (or embedded system) means the power of deep learning will be available to everyone. To make the impact of this statement clear, Pradeep noted that the natural language processing performed by Watson, image recognition that we now see in the web, and other hard deep-learning problems are “all related at the core”.

The biggest challenge in deep learning is that the training framework must be accurate, efficient, and have the ability to scale to process extremely large amounts of data. From a performance perspective, he quantifies training in terms of the time it takes for the machine to be trained to an acceptable level of accuracy, or succinctly time-to-train.

For anyone who wishes to “just get going”, Pradeep emphasized that Intel has the building blocks that can take machine learning to the masses. For example, Intel is enhancing its widely used MKL (Math Kernel Library) with deep learning specific function, and has positioned the recently announced DAAL (Data Analytics Acceleration Library) for distributed machine learning building blocks, well optimized for IA based hardware platforms. Both libraries have free options via the “community licensing.” On that note, this year Intel also announced timeline for Caffe Optimized Integration for Intel Xeon processor and Intel Xeon Phi processors. Caffe is Berkeley’s popular deep learning framework.

Since DAAL fits on top of the Intel Math Kernel Library (MKL), efficiency on CPUs and the Intel Xeon Phi family of products is assured. As Pradeep said, “use the libraries, avoid the hard work.” Of course, this approach means that users can leverage high-level interfaces and the Intel tools to capitalize on everything from Intel Xeon server processors to the upcoming Intel Xeon Phi processor “Knights Landing” product. Being a good scientist, Pradeep acknowledges that the theory of deep learning is still being developed and further, that this deep learning is an emerging area where, “the theoretical foundations of deep learning and the computational challenges associated with scaling the training to run on large distribute platforms are still being addressed by industry and academia”.

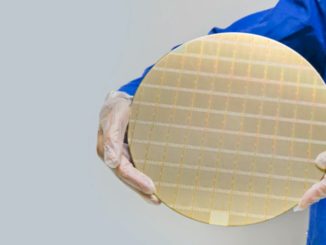

Pradeep had to be more cautious when disclosing some of the reasons for his excitement about the latest generation Intel technology – namely the forthcoming Intel Omni-Path Architecture (Intel OPA) and the Knights Landing generation of Xeon Phi processors. He did note that Intel OPA, “allows building machine-learning friendly network topologies”.

Without going into too much technical detail, all deep learning algorithms need to calculate the cumulative error the model makes over all examples during each and every step of the training algorithm. This cumulative error is used by the optimization method to determine the next set of model parameters. Unfortunately, the cumulative error can be very expensive to compute for large data sets or complex machine learning models. Stay tuned as Pradeep also teased us with a new smart method being developed by his group to scale training of deep neural networks to large number of processing nodes, and thus significantly reducing the time-to-train from current state-of-the-art. He could not say more at this time as the work is in preparation for publication. However, he was happy to share with us the specific performance achieved so far by his team on reducing the time-to-train for deep neural network topologies which exceed any comparable published result. More specifically, using OverFeat-FAST deep neural network topology, his team is able to reduce the time to train to just about 8 hours with the current generation 64 node Intel Xeon processor E5-2697, with the upcoming Knights Landing platforms reducing this down to just 3-4 hours.

Pradeep described current Intel technology for prediction (aka scoring) using the deep learning-training derived model, which is where the rubber meets the road for consumer-based deep learning applications. Pradeep noted scoring to be inherently a much more compute-friendly task than training, and hence, Intel platforms offer very good architectural efficiency, throughput and energy efficiency for prediction tasks. Further, Pradeep noted the potential for FPGAs to further improve the energy efficiency of certain deep learning prediction tasks.

These claims are backed up by Microsoft, who also observes similar power advantages when using FPGAs in their data centers.

In summary, Pradeep sees a brilliant future for deep learning and the application of Intel technology. He notes that this is an emerging area where Intel has positioned itself nicely to meet the needs of the market, and that Intel provides the hardware and tools that can be incorporated into high-level frameworks like the Berkeley CAFFE deep learning framework bringing deep learning to the masses. Users can then capitalize on everything from mainstream Intel Xeon processors to the latest generation Intel Xeon Phi Knights Landing processors and leadership class supercomputers so that people can leverage the availability of large, labeled data sets from small to very, very large with the end result that, everyone can start doing affordable deep learning .

There is only one major flaw in his assumption you can not just take a trained neural network and put it in another device at the ment. As the device needs the same amount of processing power to build the same deep layer structure.

That’s why large scale neural networks currently will stay and probably for a forseeable future in the hand of the few powerful enterprises that have both the computational resources, the big data sources required for training and the manpower to label those data sources. Also they have no interest in giving up this monopoly either.

Training the neural network is significantly more compute intensive than having it classify once trained, these are vastly different in terms of workload. Consider that training an image classifier takes hours or even weeks, however once trained you can exercise its classification in < 1 second. Info that supports can be found at: http://image-net.org/challenges/LSVRC/2015/

Although that’s true but you still need the same infrastructure and some of the weight matrix of those very deep networks are very large not likely to fit in a small device and the power it consumes and heat it would generate even for just the sampling state would be enormous.

Unless of course you go the special silicon route like these guys are doing

http://www.ceva-dsp.com/CDNN

The article is not clear, but likely that deep-learning training is the first step followed by training a classifier using a machine learning method, and the resultant model is deployed on mobile devices. If the model is relatively small, then it should work on the device. If not, then a conventional device-server/cloud architecture will work.

But that’s exactly the problem in a DNN even in the simple ones (like CNN) and definitely not in the more complex RNNs you do not end up with a simple classifier unlike traditional ML methods like SVM, AdaBoost or Decision Trees/Forest which you then can take and then use to classify things very fast.

You can’t just take the weight matrix out of the network and say this is your classifier you still need the full network layer structure even for sampling. Besides those weight matrices tend tp ne quiet large.

Its the same as you can’t just take a bit out of your brain and say okay this is now going to work in general.