The last thing that server virtualization juggernaut VMware wants to do is become a hardware vendor. Frankly, the company is literally and figuratively above that. Hardware is a hassle to manufacture and ship, and has a nasty habit of breaking every once in a while, requiring a human being to physically replace components.

But software has to run on something, and if VMware doesn’t build its own hardware, it has to partner so it can tell the same ease-of-use and integration story that its rivals in hyperconverged, cloudy infrastructure are boasting about. This is what its EVO:RAIL midrange clusters were designed to do for relatively modest workloads, and what the EVO:RACK systems that the company is unveiling this week at the VMworld extravaganza in San Francisco are aimed at. But EVO:RACK, now known as EVO SDDC (no colon) are supporting a more diverse set of hardware and much greater scale, says VMware.

The EVO is short for evolution, as if companies need to evolve from building their own clusters from piece parts and just buy preconfigured machines that are essentially sealed boxes, like an iPhone. Given the diversity of workloads out there in the datacenter, we think it is dangerous to think a single cluster to do all kinds of work or that you can get acceptable performance by not knowing much about the underlying hardware. What we see is that the organizations that spend the time and understand the hardware best get the best results. This is not a surprise, and if anything is true, it is that the EVO setups could make purchasing clusters for specific workloads or scale easier but customers still have to do their due diligence and make sure the iron fits the software and performance requirements.

Old Time RACK and RAIL

The EVO:RAIL machines, which were announced a year ago and which started shipping this year, span a mere sixteen server nodes and have very precise configurations for running the ESXi hypervisor, its vSphere extensions, and the Virtual SAN (VSAN) clustered storage area network software that VMware has been peddling against Nutanix, SimpliVity, EMC, Hewlett-Packard, Pivot3, Scale Computing, which offer server-storage hybrids of their own. There was a flurry of rumors ahead of the launch of the EVO:RAIL appliances last August that VMware was building its own hardware under an effort called “Project Marvin,” but this turned out to be nonsense. VMware was working with partners to create a server-storage hybrid, and the initial clusters were aimed mostly at virtual desktop infrastructure (VDI) or generic virtual server farms. With sixteen two-socket Xeon nodes with a total of 78 TB of capacity, the EVO:RAIL machines could host about 400 server-class VMs and about 1,000 VDI-class VMs. This is not exactly big iron, although there is nothing stopping any large enterprise or cloud builder from podding up multiple EVO:RAIL machines and distributing workloads across them. (The management and fault domains would still be set at sixteen nodes, however.)

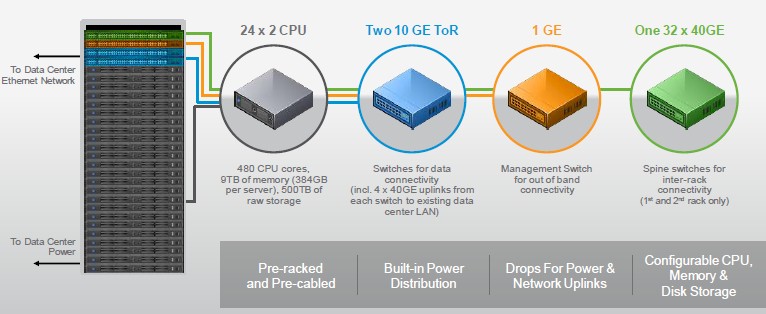

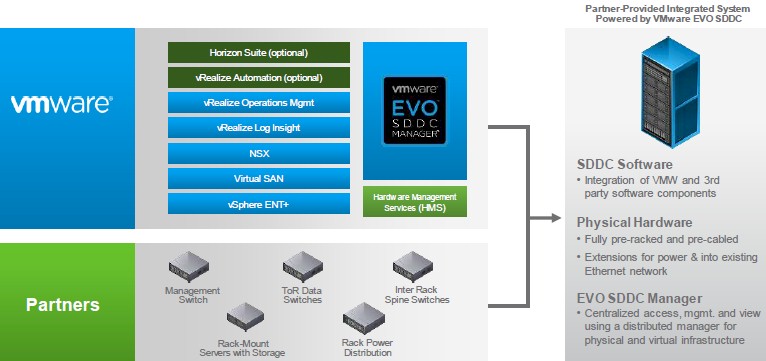

With EVO SDDC, VMware is busting out the big iron, or more precisely, it has worked with selected hardware partners Dell, Quanta Cloud Technology, and the VCE server unit of EMC (which resells the Unified Computing System platform from Cisco Systems) to get its entire virtualization and orchestration stack deployable on rack-scale systems. (To make things a bit more confusing, VMware has changed the name of the product to EVO SDDC from EVO:RACK, in line with the software-defined datacenter technology and sales pitch that VMware has been using for a number of years. (Before that, VMware tried on the terms “21st century software mainframe” and “virtual datacenter operating system” to describe its complete virtualization and automation stack.)

The EVO SDDC rack-scale appliances have been a long time coming, and it is a wonder why it has taken so long for VMware to round up hardware partners and create a complete software stack with all of its wares. But these things apparently take time, particularly when Dell has forged tight links with hyperconverged storage provider and self-described physical SAN killer Nutanix, which is leading this nascent market, and Hewlett-Packard very much wants to sell its own StoreVirtual virtual SAN software in conjunction with hypervisors and ProLiant servers. Quanta was on hand showing off prototype EVO:RACK clusters at VMworld last year when this concept was unveiled, and in this case it built appliances based on its Open Compute-compliant servers and racks. VCE is a strong partner with VMware, which was one of its original founders before Cisco backed away and EMC took it over. VCE probably will have the most enthusiasm for the machines that are now called EVO SDDC, and HP might even join Dell and Quanta peddling the racks, as could Supermicro, Lenovo, Fujitsu, and a few others who are trying to tap into the 500,000-strong ESXi and vSphere customer base that VMware has built up. While demand for EVO SDDC might be large in terms of numbers of aggregate nodes, the customer base could be relatively small. And as we have reported already, hyperconvergence has not exactly taken large enterprises by storm.

As best as we can figure, that installed base has something on the order of 50 million virtual machines and somewhere on the order of 6 million machines, according to people familiar with the base who used to work for VMware. If you do the averages, that is 12 servers and 100 VMs per customer. These are averages, we are aware. Server count should, roughly speaking, scale with revenues at large enterprises (not counting the hyperscalers, cloud builders, and telcos who make running IT systems and applications their actual business). So we are back to EVO SDDC being aimed mostly at the 50,000 or so large enterprises and government organizations in the world. These are the same entities that embraced VMware’s server virtualization early on, and they are dabbling a bit with Virtual SAN virtual storage and NSX virtual networking from VMware. But they have not rapidly embraced either, certainly not with the appetite that ESXi and vSphere were consumed during the Great Recession.

Server virtualization solved a real – and large – capacity utilization problem and helped a lot of companies slam on the brakes on server spending during the economic downturn. Even though a SDDC approach – virtualizing and orchestrating servers, storage, and networking – can save companies something on the order of 30 percent to 50 percent on capital expenses, says John Gilmartin, vice president and general manager of VMware’s Integrated Systems business unit, citing a report from Taneja Group, VMware knows it has to be a lot more flexible with hardware configurations with EVO SDDC than it was with EVO:RAIL.

“This is different from the appliance market, which tends to use one cluster for one workload,” Gilmartin explains to The Next Platform. “But enterprises have more diverse workloads and they therefore have different needs.” That said, they want their infrastructure to be as homogenous as possible without sacrificing flexibility.

That Taneja Group comparison is interesting. It pits Cisco’s ACI hardware-based network virtualization against VMware’s software-based NSX network virtualization, and stacks up real SANs against Virtual SANs, accounting for a lot of the difference in the cost. The difference between using Cisco’s UCS servers for the “hardware-defined data center” setup and Supermicro SuperServers for the SDDC setup’s compute and storage is also a factor.

To support 2,500 VMs cost $4.33 million for the network, $2.59 million for the compute, $1.9 million for the vSphere virtualization and vCloud orchestration tools, and $1.83 million for the storage. That comes out to a total of $10.6 million for the hardware-based virtualized network and storage setup. The compute is cheaper with the SDDC setup at $1.66 million, but the network hardware is cheaper at $1.38 million and the storage hardware is cheaper at $1.3 million. The vSphere and vCloud software costs are about the same at $2 million, NSX adds another $615,120 and Virtual SAN adds $264,470. Add it all up, the SDDC stack costs $7.24 million, or 32 percent lower. If you do the math, the HDDC way costs $4,254 per VM and the SDDC way costs $2,897 per VM.

This hypothetical setup is on the order of magnitude that VMware is expecting for customers to buy as they roll out greenfield applications onto fully virtualized EVO SDDC iron.

The EVO SDDC setup is designed to span from one to eight racks, delivering about 1,000 server-class VMs and about 2,000 VDI-class VMs per rack. So the SDDC setup above is around two and a half racks and should cost on the order of $2,500 to $3,000 per VM, all in, depending on hardware options, based on the Taneja Group report cited above. Discounting for scale seems appropriate, but assuming linear pricing and that the Taneja Group pricing is representative of that which EVO SDDC sellers will use, then an eight-rack setup with 192 server nodes and capable of supporting around 8,000 server-class VMs will run around $23.2 million, including storage and networking appropriate for supporting real workloads. EVO SDDC systems will start at a third of a rack (that’s eight servers) and scale up from there in single node increments.

“This is really important for the service providers, who are trying to match costs against the revenue they are bringing in,” says Gilmartin.

While the EVO SDDC setup will have 192 nodes, the vCenter management console at the heart of vSphere and the Virtual SAN storage will still be limited to scaling to 64 nodes. That eight rack limit, by the way, is one imposed by the heftiness of the spine switches that are expected to be certified to work with EVO SDDC systems. If fatter spines are used, the racks can scale out more.

The important thing for VMware is to have a vehicle by which large organizations can easily consume its entire software stack. Everything in blue in the chart above is part of the EVO SDDC stack. The vRealize Automation tools, part of what used to be called vCloud Suite, is an optional add-on, as is the Horizon Suite VDI stack. While the chart does not show this, EVO SDDC will eventually also support the company’s VMware Integrated OpenStack (VIO), a variant of the OpenStack cloud controller that plugs into the compute, networking, and storage portions of the VMware stack instead of the open source toolset usually hooks into. It is not clear when VIO support will come.

The new bit about EVO SDDC is called EVO SDDC Manager, and as the name suggests, this is used to automate the configuration of the entire VMware stack on the hardware that partners use to create their racks. Gilmartin says that hardware partners will integrate their BIOS and hardware patching with the updating software in EVO SDDC to better automate all of these processes, but that rather than just do it automatically and remotely (as Oracle does with its engineered systems), customers will be the ones pulling the trigger to do updates.

The first EVO SDDC rack-scale appliances are expected to come to market in the first half of 2016, and it is our guess that it is timed to the launch of Intel’s “Broadwell” Xeon E5 processors and the availability of 25G Ethernet switches, which will be ramping at that time, too. This seems like a long time to wait, but VMware has waited this long, so what is another six months or so. In the meantime, there is absolutely nothing that prevents companies from deploying all of these pieces themselves and then migrate to EVO SDDC by transferring their software licenses and get the additional benefits of EVO SDDC Manager. EVO SDDC Manager will not be sold as a separate product, however. At least for now.

2500 vm’s at 10mil? Who the hell is building it? I can do it for a fifth of that at most, and get extremely high performance. Is that maybe EMC list pricing or some-such?