Hyperscalers like Apple and Facebook helped flash vendors like Fusion-io, now part of SanDisk, get off the ground in such a big way that they could then attack the broader enterprise market. But not every lesson about how to make the best use of a new technology comes from on high. Some of it comes from the enterprise trenches, and such is the case of flash-accelerated virtual machines.

Only a few years ago, when enterprise-quality flash was a lot more expensive than it is today, upstart disk array makers started adding flash to their boxes as a tier for storing the very hottest of their data, the ratio of flash to disk was proportionally smaller. With flash capacity costs dropping much faster that disk capacity costs – the analysts at Wikibon have just put together a pricing analysis of the market that runs from 2012 through 2026, which we reported on here – it has become cost effective not only to offer bigger chunks of flash on hybrid arrays, but thanks to de-duplication, compression, and other data reduction techniques, to also deliver all-flash arrays.

There is no shortage of all-flash array upstarts, with Pure Storage, EMC XtremIO, and IBM being the biggest revenue generators and Hewlett-Packard, SolidFire, Violin Memory, Tegile Systems, NetApp, Kaminario, and now Tintri offering all-flash products. (We covered the latest Pure Storage announcements here, SanDisk there, and DataDirect Networks here.) This is by no means an exhaustive list, and keeping track of all of the hybrid and flash array vendors would be a full-time job in itself. When we discuss these storage vendors, we are looking for lessons that can be applied across vendors, and we report on those lessons as much as we report on any specific product.

Tintri, which is launching its first all-flash arrays, is interesting in terms of using it as a foundational component of a platform because from the get-go, when the company was created in 2008, specifically to deliver storage tuned for server virtualization environments. This may not sound like a big deal, but at the time, when server virtualization was gaining steam, “the I/O blender effect” was just starting to be felt. Simply put, individual servers have reasonably predictable and somewhat spikey data access patterns when they hit storage, but when you virtualize many servers and lay them on top of hypervisors, their I/O gets blended across those virtual machines and not only becomes much less predictable, but also more intense.

So flash memory helps a lot. With the Tintri architecture on hybrid arrays, explains Chuck Dubuque, senior director of product marketing, with a normal server virtualization cluster or a cloud (if it has orchestration layers on top), the Tintri hybrid arrays can server about 99 percent of their I/O requests from flash and only put data onto disks when it gets cold. The arrays know all about different hypervisors and their operating systems, and watches applications as well to learn what pieces will be hot and cold and make sure the hottest data is moved to flash. With such a high I/O success rate with a hybrid array, you might be wondering why Tintri has joined the all-flash party.

“What we have seen as we have worked with customers over time and delivered the tenth or the twentieth of our hybrid arrays to them is that there are concentrations of workloads that do benefit from having an all–flash array,” Dubuque tells The Next Platform. This could be databases that range in size between 5 TB and 25 TB that are in a very active database farm or that are underpinning a complex analytics workload. Persistent virtual desktop farms are another example where all-flash can help, particularly if it is a farm dedicated to developers who have an entire stack, including a database, to create and test their applications. “A hybrid array is always bound by how much flash it has and how good the algorithms are at making flash hits, and we do a better job with VMs because we know what VMs look like, and our arrays have more flash relative to disk than most of our competitors, but you do eventually start hitting disk instead of flash and that affects performance and latency.”

In this case, says Dubuque, it is more economical to have an all-flash array for the high I/O work than it is to buy a bunch of hybrid arrays and then try to spread the work out across them. That said, Dubuque say that Tintri expects for most customers to buy its VMstore hybrid arrays for the foreseeable future and then only use all-flash arrays for the corner cases. The point is, the arrays all run the same storage operating system and use the same management tools.

Higher IOPS And Consistent Low Latency

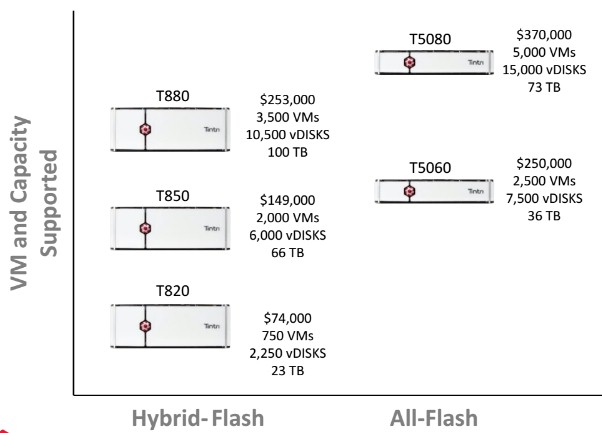

We hear the same thing over and over when we talk to customers in just about any industry, and that is that consistent performance that offers reasonable latency is much more desirable than lower latency that is subject to crazy and maddeningly hard to debug spikes where latency goes through the roof. The new VMstore T5000 series all-flash arrays from Tintri aim to jack up the IOPS enough that for those I/O-bound VMs as discussed above, but the machine is able to support a higher number of VMs by making better use of that I/O such that the cost of the array, on a per VM basis, is about the same as on a hybrid array. This is a neat trick. Here is how the all-flash arrays stack up to the VMstore T800 series, the most recent hybrid arrays from the company:

If you compare the hybrid arrays on the left to the all-flash arrays on the right, then in terms of capacity, if you do the math the all-flash arrays cost about twice as much as the hybrid arrays do. But if you look at it in terms of raw IOPS and therefore the number of VMs that you can support on the machines, then the T5000s have the same cost per VM as the T800s, generally speaking. They are also considerably more compact, too. While we hear hybrid vendors talk about how their hybrid products will continue to sell and make up the bulk of their sales even, this kind of argument cuts against it. Who doesn’t want to cram more VMs onto fewer and smaller machines?

One thing to note in the comparison above. The T800 series have flash, but it is not counted in their capacity by Tintri because it is essentially used as a hot cache for the VMs that are encapsulated by that storage. The low-end T820 has 1.7 TB of flash and its disk drives, after RAID 6 overhead is taken out, delivers 23 TB of effective capacity in a 4U chassis; it can support around 750 VMs and about 2,250 vDisks. The larger T880 has 8.8 TB of flash and 100 TB of effective capacity, and can support 3,500 VMs and about 10,000 vDisks. The controller on the T800 series has a pair of Xeon processors as its main brains, and uses 10 Gb/sec Ethernet links to keep them in synch.

On the all-flash arrays, Tintri has upgraded to zippier Xeon processors and has switched from Ethernet links between the nodes to direct non-transparent bridging links over PCI-Express ports on the controller nodes. This delivers 64 Gb/sec of bandwidth between the nodes, says Dubuque, which was a necessary upgrade because of the high I/O rates for flash. The flash in the T5000s is implemented in NVDIMMs – Tintri is not at liberty to say who its supplier is – rather than the NVRAM that was used in the T800s. The top-end T5080 has 23 TB of flash and delivers an effective capacity of 72 TB after data reduction techniques are employed. This machine can feed as many as 5,000 VMs and as many as 15,000 vDisks. The T5060 has half the flash capacity and all of the other metrics get cut in half, too, excepting the form factor, which remains the same at 2U. A rack of T5000s would weigh in at 100,000 VMs and about 1.4 PB of usable capacity and working from the list pricing above, would cost $7.77 million.

Here’s a fun mental experiment. How many servers would it take to choke that rack of T5080 all-flash arrays? Let’s use two-socket “Haswell” Xeon E5 machines with processors that normal companies might deploy, and we think the 14-core Xeon E5-2607 v3 is the most capacious processor in the Intel lineup that anyone besides the very rich would splurge on. Because the storage is external, we will pick a skinny ProLiant DL360 Gen9 machine rather than the workhorse DL380 that a lot of enterprises buy for their local storage inside their compute. With two of those Xeon E5-2697 v3 processors and 256 GB of pretty fast main memory, this machine costs $13,195 at list price. Assuming one core per VM, you would need something on the order of 4,167 servers to support 100,000 VMs, which is just shy of $55 million. We are not saying that this configuration is the best one for a private cloud hosting 100,000 VMs, but we are pointing out that against that cost of raw compute, maybe the all-flash arrays don’t look so expensive. There is a reason hyperscalers build their own gear. And even at 50 percent off, that would still make the servers around three times as expensive as the flash storage driving the servers.

Who Is Buying Tintri Kit?

The TintriOS storage operating system that runs on all Tintri storage appliances and that delivers the VM-level management and quality of service guarantees can speak to just about any hypervisor you want. VMware’s ESXi 4, 5, and 6 hypervisors are supported and so is Microsoft’s Hyper-V 2012 and Red Hat Enterprise Virtualization (the commercial version of KVM) at the 3.3, 3.4, and 3.5 level. Raw, unsupervised hypervisors like the freebie ESXi or the open source KVM are not supported.

Like other hybrid and all-flash array makers, Tintri has a relatively modest installed base, with around 800 customers worldwide and over 2,000 VMstore arrays sold to date. The largest Tintri customer has 50 of its hybrid arrays with roughly 3 PB of capacity under management, which is a pretty big installation by enterprise standards. (About 15 of those arrays at this customer are used for a private cloud exclusively for application developers who need high I/O performance. And these are all hybrid arrays, not all-flash arrays.)

One of the key differentiators that Tintri says it has feeds off of its focus at the VM level in The Next Platform stack. Because system administrators do not have to mess around with file systems or disk logical units (LUNs), but rather work at the abstract layer of VMs, there is just a whole bunch of things that they do not have to do any more. Dubuque says that the average storage admin manages something like 300 TB of storage, which seems pretty small if you are used to hyperscaling, but with the Tintri arrays, a single admin is averaging something on the order of 1 PB of storage and some can push as high as 5 PB or 6 PB. (Granted, this is against a relatively modest base, and it is not across all customers in the Tintri base, just the big ones.) Across those 2,000-plus VMstore arrays that it has sold so far, the company reckons that its customers are supporting more than 400,000 VMs in aggregate.

Be the first to comment