The 25 Gb/sec Ethernet effort that was launched by Google and Microsoft a little more than a year ago, along with switch and adapter chip makers Broadcom and Mellanox Technologies and switch maker Arista Networks, continues to gather steam. Broadcom is ramping up production of its “Tomahawk” switch ASICs, and Mellanox has recently announced its Spectrum ASICs as well as adapter cards to support 25 Gb/sec, 50 Gb/sec, and 100 Gb/sec speeds on servers. Now, Broadcom is rounding out its 25G offering as it debuts its next-generation of NetXtreme C-Series of server adapter cards, which will offer a significant price/performance and performance advantage over 10 Gb/sec and 40 Gb/sec Ethernet adapters.

People tend to talk about the switching side of a server-switch pairing because it is the big ticket item in the networking stack. Ethernet-style datacenter networking is around a $25 billion business, but the discrete adapter market is only about a $1.5 billion market. (So many Ethernet ports are on the CPU these days or welded to the server motherboard by default, and the economic value of those ports is not counted in the numbers cited above.) But the server adapter card is a key element in getting the full performance out of the switching that companies install.

Even companies are not ready to move to faster Ethernet switches today, they might do well to upgrade their adapters first and therefore be ready when they do make the bandwidth jump on the switches. In this way, they can stretch the life of their servers out a bit longer, particularly if their workloads are constrained on I/O bandwidth or memory rather than on raw compute. (As a lot of workloads fit this profile.) Obviously, the best possible scenario is to upgrade the servers, their Ethernet adapters, and their switches all at the same time, building a cluster that is balanced for the particular workloads at hand. Either way, the important thing is to not neglect the adapter side of the network equation.

To that end, Broadcom is launching its NetXtreme C-Series chips and adapters based on them. As with prior generations, Broadcom is making the chips available for other to purchase to build adapters as well as making its own adapters for sale.

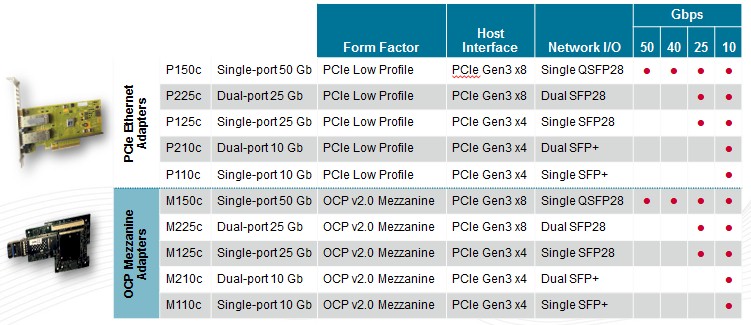

Jim Dworkin, director of product marketing for controllers at Broadcom, tells The Next Platform that the company will be offering NetXtreme cards with 25 Gb/sec and 50 Gb/sec ports in both standard PCI-Express 3.0 form factors and in special versions that plug into mezzanine slots on systems compatible with Open Compute designs created by Facebook and its hardware friends.

Broadcom does not play in the white box server market, but rather focuses on getting its adapters adopted by the tier one server makers – including Hewlett-Packard, Dell, Lenovo, IBM, and others – and within that space, Broadcom has a 65 percent to 70 percent market share on adapters and adapter chips. This is similar to the share that Broadcom’s “Trident” and “Dune” families of Ethernet switch ASICs have in datacenter L2/L3 switching today, and both are good reasons for Avago Technologies to be spending a whopping $37 billion to acquire Broadcom and take its name. (Avago wants to take on Intel in the datacenter, including server processors based on the ARM architecture that are currently being developed by Broadcom.)

Broadcom doesn’t talk about it, but it no doubt ships silicon to the handful of hyperscalers that all of the 25G players are courting as they launch switches and adapters based on a technology that the IEEE initially rejected. Then Google and Microsoft made it clear there were sound technical and economic reasons to gear down the technology created for 100 Gb/sec networking to support 25 Gb/sec and 50 Gb/sec links on servers and 50 Gb/sec and 100 Gb/sec ports on switches.

To sum it up simply, 10 Gb/sec and 40 Gb/sec switching is based on 10 Gb/sec switching lanes, while the 25G standard is based on 25 Gb/sec lanes. Products are not yet in the field, and pricing has not yet been set for either switches or adapters, but generally speaking, a 25 Gb/sec port will offer 2.5X the bandwidth of a 10 Gb/sec port (whether on the server or the switch) at around 1.5X the cost in half the power envelope per port. With hyperscalers being both space and power constrained, you can see why they were not enthusiastic about 40 Gb/sec server adapters when their 10 Gb/sec adapters ran out of gas. They don’t want multiple adapters, which add cost and heat and complexity. They want faster adapters that cost less per bit.

As we have pointed out in the past, the adoption of 10 Gb/sec Ethernet in the enterprise has been slower than many expected and certainly slower than anyone wants, but we think that, if the price is right, adoption of 25 Gb/sec and 50 Gb/sec on the server and 50 Gb/sec and maybe even 100 Gb/sec on the switch could be faster than many expect. (Dworkin says he knows of no hyperscale or cloud customers who are contemplating 40 Gb/sec switching in their future.) If the pricing is right for hyperscalers, it will no doubt be right for enterprises looking to get the lowest cost per bit transmitted. Broadcom concurs with this theory, even if some of its own customers, who are building switches, do not think this way.

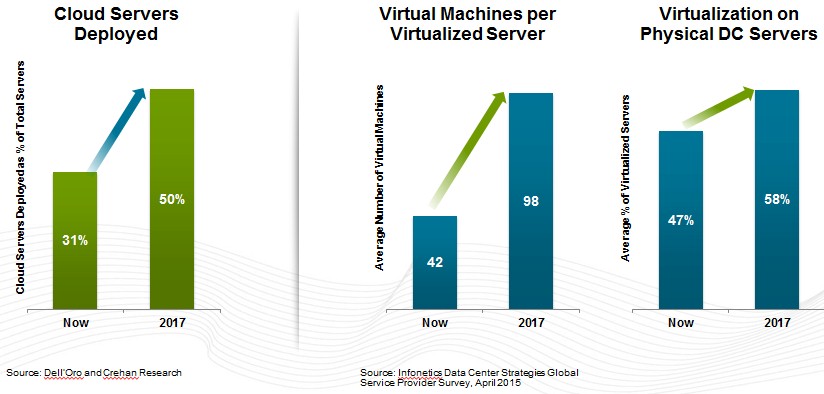

“There is a waterfall effect going on,” says Dworkin. “The mega-scale datacenter operators are creating such a rapid maturity in the ecosystem around scale-out and efficiency that is now cutting over to the enterprise space. Azure is a public cloud offering, but Microsoft has a private cloud stack, too, around Windows Server. There is a natural overlap between cloud and enterprise, and what I am seeing is not only is hyperscale growing really fast, but requirements are cascading down to enterprises that can now take advantage of it.”

When we suggested that certain price-sensitive HPC shops might be looking to 25G Ethernet instead of InfiniBand or 10 Gb/sec Ethernet. Anyone who was thinking about 40 Gb/sec Ethernet on the port or switch has been eagerly awaiting 50 Gb/sec Ethernet for more than a year, and if they can hold out until products start shipping later this year or early next, they are going to end up with better value on all fronts.

Outside of the hyperscalers, cloud builders, and potential HPC shops, Broadcom expects that blade server architectures will move to 25G switching fairly quickly because of the reduced number of lanes to deliver bandwidth and the consequent power reduction because 25G takes a lot fewer lanes in the midplane of the blade server chassis to deliver networking and a lot less power, too.

Broadcom is also anticipating that four-socket servers, which are often used for heavy virtualization workloads as well as for databases, will move to 25 Gb/sec on the server side fairly quickly. Such machines can swamp a 10 Gb/sec adapter today, and again, companies have to double up the cards to get enough I/O to balance at the increasing number of cores and threads in the box.

Take A Look Inside the NetXtreme Chip

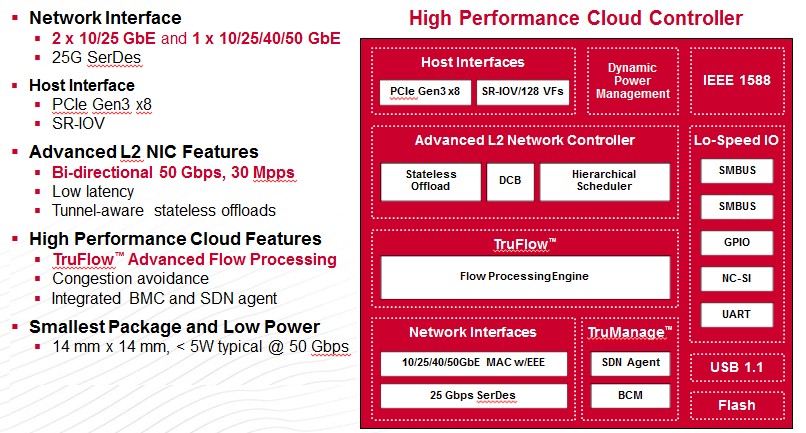

The NetXtreme adapter chip is technically known as the BCM57300, and it has some interesting features aside from just delivering 25 Gb/sec and 50 Gb/sec links out of servers into the network. But the basic feeds and speeds are interesting, too.

The NetXtreme C-Series Ethernet adapter chip is etched using the same 28 nanometer processes at Taiwan Semiconductor Manufacturing Corp as is used by Broadcom to create its companion “Tomahawk” switch chips. In fact, the same 25 Gb/sec SERDES circuits are used on both the switch and adapter ASICs. The chip can support two ports running at 25 Gb/sec speeds (with a fallback to 10 Gb/sec for backwards compatibility) or one port running at 50 Gb/sec speeds with a gear down to 25 Gb/sec or a fallback to 10 Gb/sec or 40 Gb/sec if it links to switch ports running at those speeds.

The chip can be implemented on PCI-Express 3.0 adapters with x8 slots, and it supports Single Root-I/O Virtualization (SR-IOV), which allows a hypervisor to carve up a physical network interface into multiple virtual interfaces for its virtual machines. The chip supports up to 128 virtual functions with this iteration of SR-IOV. With small packet sizes (64 byte), the chip can push 30 million packets per second, according to Dworkin, and the round trip latency – going from the CPU through the adapter, out across the network to another adapter, up to an adjacent CPU, and then back through the stack in reverse back to the original CPU – is about 1.5 microseconds. Without naming names, Dworkin says that the latest NetXtreme is considerably smaller than competitive adapter chips that support 40 Gb/sec, 25 Gb/sec, or 50 Gb/sec protocols and at around 4 watts pushing data at the full 50 Gb/sec, it consumes around 25 percent less power. This matters to hyperscalers, who have tens of thousands of servers in a datacenter. Every watt adds up.

Perhaps the most interesting new feature on the NetXtreme adapter chip is hardware acceleration for virtual switches, which is one of a number of features that are embed in the TruFlow flow processing engine at the heart of the chip.

With modern virtualized infrastructure, the servers are configured not only with a hypervisor, which abstracts compute and memory, but also a virtual switch, which as the name suggests provides a virtual switching layer to link virtual machines and physical switches that are out on the network. Back in the days when virtualization was young and live migration of VMs was not yet available, there was no need for virtual switches and the networking overhead was modest. On a Xeon-class machine of 2005 with Gigabit Ethernet networking, something on the order of 15 percent of the server’s CPU cycles were dedicated to networking overhead. By 2010, with more sophisticated “Nehalem” Xeons and a move towards virtual switching, that overhead rose to 25 percent of total CPU capacity on the machine, and Broadcom reckons that with current “Haswell” Xeon machines matched to 25G adapters, the overhead of the networking portions of virtualization will be around 33 percent, and more appallingly, with the “Skylake” Xeons coming out two generations from now paired with 50G adapters, the overhead will reach 45 percent of CPU.

Some of this overhead just comes from having to deal with much more bandwidth and having packets come at the server much faster. No matter the cause, this overhead is obviously unacceptable, and the TruFlow engine can take the dataplane of the virtual switches running on the servers and move them onto the adapter card, thus freeing up that CPU capacity to get back to doing real work. Broadcom will supporting the Open vSwitch virtual switch when the NetXtreme adapters first come out and is working with Microsoft, VMware, and Cisco Systems to support their respective virtual switches. The bits of code that Broadcom created to support Open vSwitch offload will be contributed back to the open source community, by the way.

The TruFlow engine also has congestion detection and avoidance features, which synch up with Broadcom’s Tomahawk and “Dune” switch chips. (The Dune chips, which are aimed at slightly different kinds of aggregation and core switches in the datacenter, were updated by Broadcom back in March.) Broadcom says these congestion avoidance circuits are up to 5,000 times faster than software-based software congestion routing algorithms implemented in software on switches.

Depending on the nature of the workload, this virtual switch offload and other offload features of the NetXtreme chip can improve the performance of server workloads by anywhere from 33 percent to 50 percent, says Dworkin.

The trick for Broadcom will be pricing its adapters and switch ASICs so as to not stymie the move to 10 Gb/sec in the enterprise market that it is chasing with its just-updated Trident-II+ chips – most enterprises are still poking along with Gigabit Ethernet, although that is hard to believe – but at the same time keeping 25G products cheap enough to encourage widespread and rapid adoption. Competition between chip makers and pressure from customers will determine those price points, and no one really knows for sure what is going to happen. It will be fun to watch. Our money is on 25G for the large enterprises, HPC centers, hyperscalers, and cloud builders that we care about here at The Next Platform.

SR-IOV is _Single_ Root Input/Output Virtualization (not _Serial_), FWIW

Yup. Just a brain crossed circuit. Thanks for the catch.

I do not agree that https://www.wildcardcorp.com/blog/is-it-worth-investing-more-money-in-your-network-card-new

– Cheree