Intel has spent a considerable sum of money acquiring switching technologies over the past several years as well as doing a whole bunch of its own engineering so it can branch out in a serious way into the $25 billion networking business and keep its flagship Data Center Group growing and profitable. Networking can account for as much as a third of the cost of a cluster, so there is a lot of revenue and profit to chase.

The first fruits of Intel’s networking acquisitions and labors – the company’s Omni-Path architecture – will come to market later this year, but details are emerging ahead of the launch as they always do.

As is the case with any technology vendor looking at a long time horizon between when a project starts and when a product launches, Intel has been providing information in dribs and drabs about both its “Knights” family of massively parallel processors and co-processors and its Omni-Path interconnect, both of which are key components of the chip maker’s high performance computing stack and which will no doubt see broader deployment outside of supercomputing centers as enterprises and governments wrestle with complex analytics, simulations, and models. At the ISC15 supercomputing conference in Frankfurt this week, Intel is disclosing some information about the Omni-Path switches, adapter cards and ports that will eventually be etched onto its Xeon and Xeon Phi processors. We have also been doing some digging of our own and have some additional insight into what Omni-Path is and what it isn’t.

When Intel first disclosed that it was working on Omni-Path at last year’s ISC event, the company did not provide a lot of detail on the technology, which was actually being called Omni Scale Fabric at the time and which had its brand changed a few months later. What Intel said a year ago was that Omni-Path was a complete next-generation fabric and that it would be making PCI-Express adapter cards, edge switches, and director switches that supported the new networking scheme as well as perfecting its silicon photonics to interconnect switches.

Intel said that Omni-Path was based on technology it got from its Cray and QLogic acquisitions, but when pressed about it, Charles Wuischpard, who had just came on board to be vice president and general manager of the Workstations and High Performance Computing division in the Data Center Group, did not say much more about it except that it was not InfiniBand and it was not, as some had been expecting, based on a variant of Cray’s “Aries” interconnect. Wuischpard said that Intel was providing customers using its 40 Gb/sec True Scale InfiniBand switches and adapters an upgrade path, and said that Omni-Path was going to allow applications tuned to run on QLogic InfiniBand to run atop Omni-Path.

That sounded like a neat trick to us, but it is also one that its supercomputing partner mastered a long time ago with its Cray Linux Environment, and specifically with a feature called Cluster Compatibility Mode for Cray’s “SeaStar” interconnect used on its XT family of supercomputers. Back in 2010, with this compatibility mode, Cray added some code to its software drivers for its proprietary interconnect that made it look like it is a normal Ethernet driver for running the TCP/IP stack on a SUSE Linux machine. What that means is that applications running on a normal Linux cluster could be dropped onto an XT machine and actually work without having to modify the software to understand the eccentricities of the SeaStar interconnect. A year later, Cluster Compatibility Mode was offered for the OpenFabrics Enterprise Distribution for InfiniBand to do the same thing: Let applications compiled for parallel InfiniBand clusters run atop SeaStar.

Our point is that when Intel acquired the Cray “Gemini” and “Aries” interconnect businesses for $140 million back in April 2012, it got a team that had experience building some of the lowest latency and highest bandwidth networks ever created, and one that also knew how to make one type of network look like another one. Only a few months earlier, Intel paid $125 million to snap up the InfiniBand adapter and switch business of QLogic. And, we hear, these two assets are being combined in clever ways to create the Omni-Path Architecture.

The details are still a bit thin – Intel plans to reveal more in the coming weeks and months – but what we have heard is that Omni-Path takes the low-level Cray link layer (a part of the network stack that is very close to the switch and adapter iron) and marries it to the Performance Scaled Messaging (PSM) libraries that QLogic created to accelerate the Message Passing Interface (MPI) protocol commonly used in parallel applications in the HPC world. The word on the street is that the low-level Cray technology scales a lot further than what QLogic had on its InfiniBand roadmap, and the wire transport and host interfaces are better with Omni-Path, too. The preservation of that PSM MPI layer from QLogic’s True Scale InfiniBand (probably done with the assistance of Cray intellectual property and engineers working at the chip maker) are what helps make Omni-Path compatible with True Scale applications.

We look forward to learning a lot more about how this all works and what effect Omni-Path will have on high performance networking, whether it is in HPC, enterprise, cloud, or hyperscale settings.

In a conference call ahead of the ISC conference, Wuischpard revealed a few more tidbits about the Omni-Path interconnect, which coupled with a few other past statements and the information revealed above begin to give us a more complete picture.

First, some code-names. We already knew that “Storm Lake” was a code-name for the Omni-Path network ports being put into future Xeon and Xeon Phi processors. As it turns out, this appears to be the code-name for the overall Omni-Path architecture, not any particular product. And technically, that is the Omni-Path Architecture 100 series, to delineate it from future iterations that will presumably be called the 200 series and run at 200 Gb/sec like the InfiniBand from Mellanox Technologies will when it comes out supporting the HDR speed bump sometime in late 2017 or early 2018. The switch chip that implements the 100 Gb/sec Omni-Path protocol is code-named “Prairie River,” and the chips that are used on host fabric interfaces (whether they are implemented on an adapter card or etched onto an Intel chip) are developed under the code name “Wolf River.”

“The message from us ahead of launch is that we are gaining momentum, that we are open for business today, and we have actually already won quite a few opportunities that we will be installing later this year and into next year,” says Wuischpard. “We have been sampling with all of the major HPC and OEM vendors.”

Intel has over 100 switch and system vendors (presumably counting servers and storage separately) that will be ready to deploy Omni-Path in their products at launch, and Wuischpard said that Intel had “quite a large pipeline” of deals that are being priced and bid right now. More specifically, he said that across the deals currently done or in the pipeline, Omni-Path was already covering 100,000 server nodes. Two deals involving Omni-Path networking that have been publicly announced are at Pittsburgh Supercomputing Center in the United States and Fudan University in China.

First customer shipments of Omni-Path gear will come in the fourth quarter of this year, Wuischpard confirmed, but Intel is hoping to close a lot more orders between now and launch day, which we expect will be around the SC15 supercomputing conference in November down in Austin Texas.

The PCI-Express adapters for the Omni-Path interconnect will come to market first along with switches, Wuischpard said, and we know that one version of the impending “Knights Landing” processor will feature two Omni-Path ports. Intel has also said in the past that a future Xeon processor based on its 14 nanometer technologies – which could mean either a “Broadwell” or “Skylake” part – would also feature Omni-Path ports on the die. We know for sure now that the future Skylake Xeons will have the integrated Omni-Path ports, as The Next Platform reported back in late May, but what we do not know is if the Broadwell Xeons, which we now hear will roll out early next year rather than at Intel Developer Forum, will have Omni-Path ports. If Intel wants to drive Omni-Path switch sales, it can do so with adapter cards, but integrated ports might be even better.

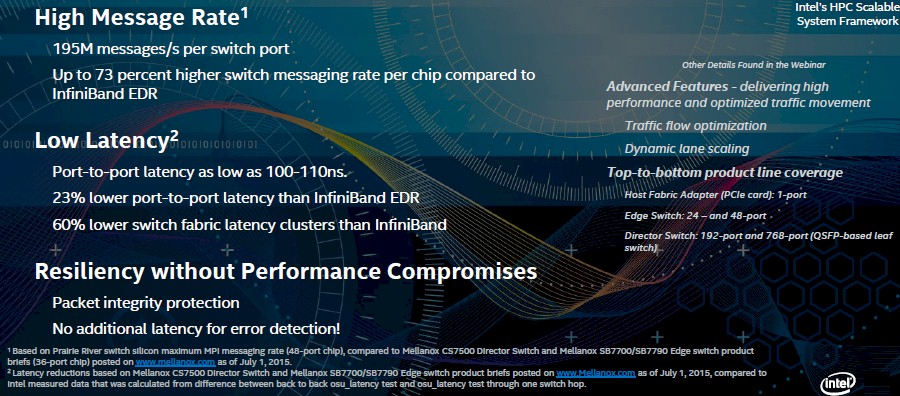

Wuischpard gave out some feeds and speeds about Omni-Path and how it would stack up to EDR InfiniBand shipping from Mellanox in the chart above. We have no doubt that Mellanox will want to have a rebuttal about these figures and what they mean once both sets of products are in the field. The Prairie River switch ASIC can drive 195 million MPI messages per second per port, according to Intel’s tests, which it claims is a 73 percent higher switch messaging rate per chip compared to the Mellanox Switch-IB chip that drives its EDR InfiniBand products. Intel is also saying that it can deliver a port-to-port hop latency of between 100 nanoseconds and 110 nanoseconds, which it says is 23 percent lower than EDR InfiniBand, and moreover that its latency across the Omni-Path Fabric was 60 percent lower than the Mellanox alternative.

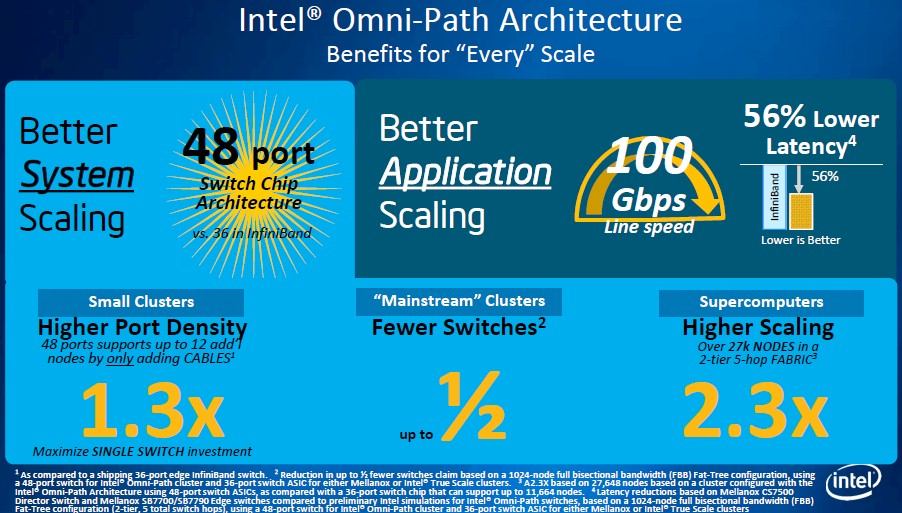

Last fall, when Intel was talking about Omni-Path, it provided some comparisons between it and EDR InfiniBand for clusters with 1,024 servers nodes configured in a fat tree topology. Both Intel True Scale and Mellanox SwitchX gear have 36 ports per switch, and obviously with the higher port density of the Omni-Path switches, which will come in a 48-port variant, customers will be able to buy fewer switches and not have as many stranded ports in their racks. That’s 30 percent more ports per switch, and across that 1,024 node cluster, with a combination of director and edge switches, Intel can deploy half as many switches to do the job, it says. Interestingly, the extra ports in its switches also allow it to scale an Omni-Path network up to a maximum of 27,648 nodes, which is a factor of 2.3X higher than the 11,664 nodes that Intel says is the top limit for InfiniBand switches with only 36 ports.

The other bit of information that Intel has let out about Omni-Path is that it will have single port adapter cards, plus a pair of edge switches for inside of racks or to be used as spines and a pair of director switches for aggregating across racks. The edge switches will come with 24 or 48 ports, and the director switches will come with 192 or 768 ports.

Intel has not said anything about pricing for Omni-Path, and it will be very interesting indeed to see how it stacks up against EDR InfiniBand from Mellanox and 100 Gb/sec Ethernet alternatives.

What does the switch have to do with MPI message rate? Isn’t that governed by the endpoint adapter and software?