The broader adoption of GPU acceleration for workloads in the traditional high performance computing segment and expansion in new areas such as deep learning are driving revenues and profits at graphics chip maker Nvidia.

The company does not report sales of its Tesla GPU compute business separately in its financial reports, but as this business becomes more material Nvidia is talking more concretely about its business in the datacenter as distinct from the money it makes peddling GPUs and intellectual property relating to devices.

In conjunction with the GPU Technical Conference back in March, Nvidia diced and sliced its numbers a little bit for Wall Street and started talking about the five major segments that it is focusing on, and this provides a little more insight, however obliquely, into how its GPU compute business is doing. Drawing the lines is not always easy, because clusters can use regular GPUs or Tesla accelerators, which have features not found in Quadro GPU cards. However, Quadro GPUs support Nvidia’s CUDA parallel programming environment and are often used for single-precision workloads like machine learning or seismic analysis or signal processing.

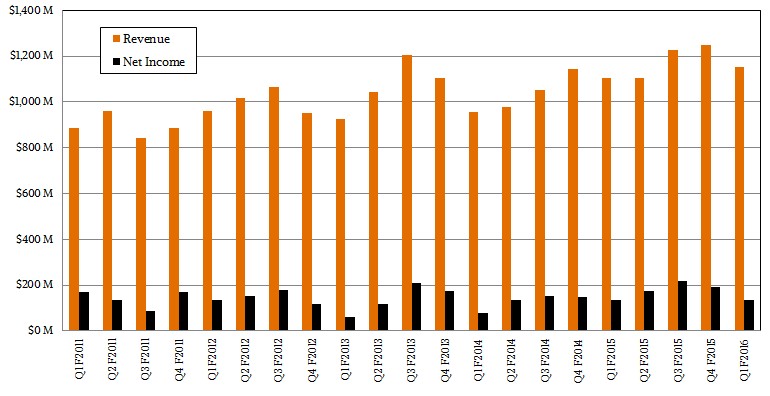

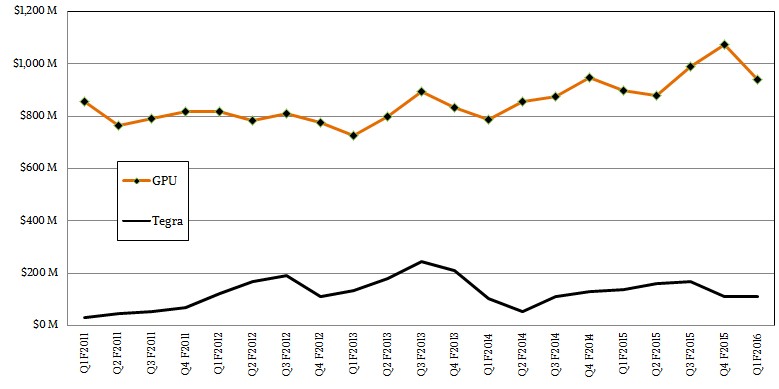

In the first quarter of fiscal 2016 ended in April, Nvidia’s overall revenues were $1.15 billion, up 4 percent year-on-year, but net income was down a smidgen to $134 million. In the quarter, GPU revenues were up 5 percent to $940 million, and Tegra ARM processor sales were up 4 percent to $145 million. The company also booked its quarterly $66 million in intellectual property royalties from rival Intel, which comes as the result of a lawsuit between the two companies that settled a few years back.

Nvidia said on a call with Wall Street analysts that sales of Tesla GPUs “increased strongly,” and were driven by large project wins with cloud service providers (what we here at The Next Platform call hyperscalers to specifically refer to the scale of their web-based services and distinct from those who are building public clouds for others to use). Baidu, Google, Facebook, Yahoo, Microsoft, and Twitter have all said that they are deploying Nvidia GPUs to massively accelerate their machine learning applications, which are used to classify videos and photos or to do speech and text recognition, among other things. Deep learning was the central theme of the GPU Technology conference, and Nvidia explained how its future “Pascal” family of Tesla GPUs and its NVLink interconnect for lashing up to eight GPUs together and to processors would help accelerate deep leaning applications by a factor of 10X compared to current Quadro cards based on “Maxwell” GPUs. (There are no Maxwell variants of the Tesla cards, so Nvidia cannot make that comparison.)

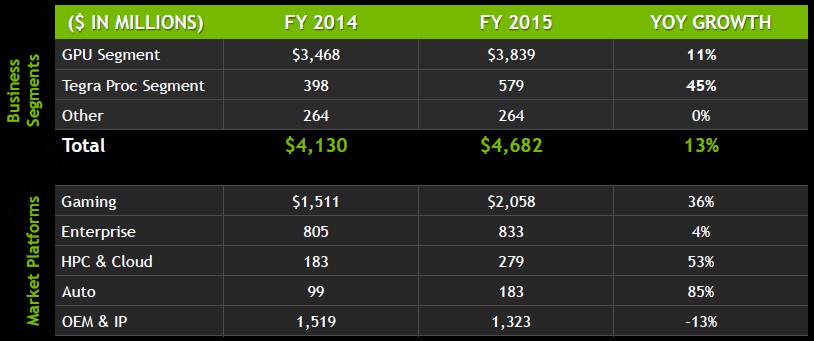

The way Nvidia sees the markets it is chasing is like this. The gaming industry that got Nvidia co-founder Jen-Hsun Huang into this business in the first place is still a growing and vibrant business, with a $5 billion opportunity for GPU sales within an overall $100 billion gaming industry. Nvidia and AMD fight hard and both win their share of GPU sales in gaming, but Intel, Qualcomm, Imagination Engineering, and others have GPUs as well. Processing and GPUs for the auto industry account for another $5 billion market that Nvidia is chasing that is a subset of the $35 billion overall computing segment of the vast auto industry. Another segment is enterprise visualization, which Nvidia often abbreviates to enterprise but which is not all sales to enterprises (don’t get confused). This enterprise visualization market, Nvidia reckons, has about $1.5 billion in potential sales to professional designers and another $5 billion for centralized datacenter tools based on products like its GRID virtualized GPU cards. And finally, there are sales to the traditional HPC market and cloud service providers that are accelerating their applications with Tesla GPU cards. This latter bit is a $5 billion opportunity. Add it all up, and Nvidia is chasing markets worth $21.5 billion.

In the presentations to Wall Street at the GPU Technology Conference back in March, Shanker Trivendi, vice president of enterprise sales and industry business development at Nvidia, said that Tesla accelerator sales hit $279 million for the fiscal 2015 year, which is also the same number cited by CFO Colette Kress as the revenue for the HPC and Cloud division in fiscal 2015. So we now know that when Nvidia says HPC and Cloud, it means Tesla. (But there are plenty of accelerated workloads running on Quadro cards and then there are the GRID products, too. So don’t think this is all of the datacenter business that Nvidia has.) That Tesla business was up 53 percent from $183 million in fiscal 2014.

Up to the point of the conference, Nvidia had sold an aggregate of 576 million CUDA capable GPUs, up from a base of 100 million devices in 2008 when CUDA and GPU acceleration really took off. Huang said back at the conference that Nvidia had shipped a total of 450,000 Tesla accelerators since they were launched back in 2008, when the company sold 6,000 units. The CUDA environment has been downloaded an incredible 3 million times, up from 150,000 downloads from 2006 when it was launched through 2008. CUDA has had 1 million downloads in the past 18 months alone, Kress said on the call. There are now well over 300 HPC, deep learning, and other applications that have been accelerated by Nvidia GPUs, and this as well as a slew of homegrown code is driving this HPC and Cloud division business.

In the first quarter of fiscal 2016, HPC and Cloud division revenue (again, this means Tesla) rose by 57 percent to $79 million. Don’t be mistaken in thinking you can multiply that number by four to get an annualized run rate, because hyperscalers and HPC centers make big deals at certain points in the Intel processor cycle excepting when they have no choice but to buy gear right now to support a workload. This means the revenue rises and falls along with an Intel Xeon processor launch a lot of the time. In the quarter, the enterprise visualization segment had $190 million in sales, down 4 percent thanks in large part to the strength of the US dollar and weakness in foreign currencies that translate into dollars on Nvidia’s quarterly books.

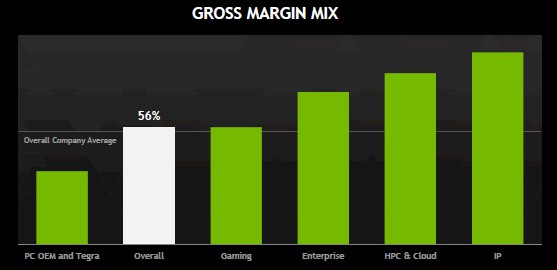

We think that the gaming and enterprise visualization segments help make investment in Tesla possible, and as it turns out, if you look at the gross margins, the extra goodies that are adding in with Tesla help bolster Nvidia’s bottom line, too. Gross margins are considerably higher on Tesla than on regular gaming and visualization products, as you can see from the adjacent chart for fiscal 2015. The investment that Nvidia has made over the past decade has helped it create a platform for parallel processing that is accelerated by GPUs, one that is far larger than what rival AMD has put together and that rivals the accelerator business Intel has engineered with its first two generations of Xeon Phi cards.

The interesting bit is what happens when the standalone “Knights Landing” Xeon Phi processors are available later this year, complete with interconnects and not hanging off a CPU as a coprocessor to do parallel work. Nvidia’s Project Denver sought to add ARM cores to Tesla GPUs (and perhaps interconnects, too) to do a similar thing, but that project was abandoned. Nvidia will certainly sell Tesla cards as adjuncts to Xeon processors from Intel, and it has a tight partnership with IBM through the OpenPower Foundation to couple Tesla GPUs to future Power processors with its NVLink interconnect and build clusters of hybrid CPU-GPU nodes with high bandwidth InfiniBand networks from Mellanox Technologies. But what Nvidia could really use is NVLink ports on Xeon processors, too, and this seems very unlikely to happen.

Is the 4th paragraph’s reference to first-quarter-2016 performance correct?

Yup. It is their fiscal year.