The so-called industry standard server, by which most people mean a machine based on an X86 processor and generally one made by Intel, has utterly transformed the datacenter. The ubiquity and compatibility of X86 machinery has in many ways radically simplified the infrastructure of computing and reduced the market to a few handfuls of server suppliers and Linux or Windows being the operating system of choice for the vast majority of workloads.

If some major hyperscale datacenter operators and networking upstarts have their way, it will not be too long before we talk about industry-standard networking hardware, with common hardware and only a few network operating systems – very likely open source and therefore malleable by vendors and customers alike.

Google and Amazon Web Services have created their own networking gear and network operating systems, and social media giant Facebook has made no secret about its desire to push the industry to create open switches through its Open Compute Project. Now Hewlett-Packard is getting into the game, which Quanta, Penguin Computing, Dell, and Accton Technology/Edge-Core have already been playing in for a while. Cumulus Networks, Pica8, and Big Switch Networks are keen on supplying operating systems for open switches, and Juniper Networks has recently announced that it has ported its Junos network operating system to whitebox switches made by Alpha Networks. (That is akin to Sun Microsystems porting Solaris to an X86 server but keeping it closed source.) Broadcom, Intel, Mellanox Technologies, and Accton have all contributed open switch designs to the Open Compute Project. Suffice it to say, some switches will be more open than others.

If server history is any guide, it won’t be long before both network ASICs and network operating systems will be largely commoditized and fewer in number and those vendors supplying the chips and OS software will be the only ones making any money as others largely resell boxes and bits with some value add on top.

Open For Business

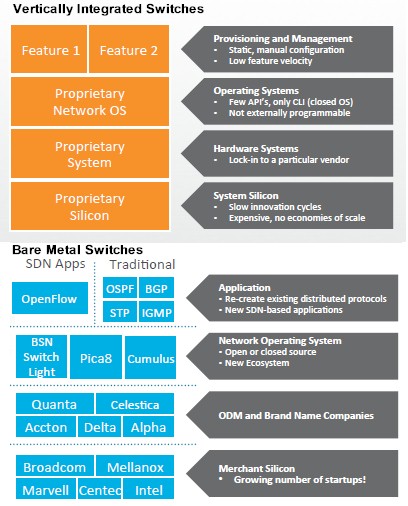

As far as customers are concerned, what open networking means is that the switch hardware and switch software can be developed independently from each other and, if the network operating system is open source, tweaked by customers as they see fit. Customers can drive innovation at a pace they set, not accept the one set by the vendors. This is particularly important for hyperscale datacenter operators, who do not require the broad feature sets that address the needs of the entire IT industry but who do like to have continuous development of their systems and networks because they are operating at the upper echelons of scale. Equally important in open networking is the idea that companies can run multiple network software stacks on their hardware, choosing different stacks for different jobs, much as they choose Linux, Windows, or Unix on X86 iron and can change it at will if they want.

There is a lot of money at stake here as end user companies make the inevitable shift from closed, appliance-style switching to open networking.

Cliff Grossner, directing analyst for datacenter, cloud and SDN at analytics research at Infonetics Research, pegs the worldwide datacenter switching market at $7.8 billion with a total of 29.4 million ports of various speeds shipped in 2013. The market is growing steadily, and with some estimates for the fourth quarter of last year, Grossner reckons a total of $8.2 billion in datacenter switches were sold in 2014, comprising 35 million ports. That’s 19 percent growth on the port counts, but only 5.1 percent revenue growth, and one of the market forces holding down revenues is the shift to open networking.

“It has come to the point that it is pretty clear that a portion of the market is going to go this way,” Grossner tells The Next Platform. “The incumbents have to change their businesses, and they understand that the future is software, not hardware. Just like with servers, they have to make the transition as well.”

To be more precise, the money that there is to make – meaning not just revenues, but profits – will be with the component suppliers and the operating system suppliers in the open networking arena, not necessarily with the companies that bend metal and peddle the boxes with their own software. Many companies, says Grossner, are not going to accept a closed network operating system with a bunch of APIs exposed to allow for some more sophisticated control of their networks. While enterprises and hyperscalers alike want to have less expensive networking gear, a reduction in capital expenditures is not what is driving the move to open networking, says Grossner. The real driver is that customers want network devices that are programmable like a Linux server and therefore that will enhance the automation of the network. “We are starting to see enterprises that have no network engineers,” says Grossner,” because they have IT guys that know Linux and with network training they are able to operate the network fabric. That is the big savings: being able to program in the native Linux environment and not to have to maintain two separate skillsets.”

The way that Infonetics Research carves up open networking is like this. It includes bare metal whitebox switches that are built by Quanta, Accton, and others, usually in Taiwan, that are shipped without network operating systems and that generally do not have local support. Then there are branded bare metal switches, which are machines like the Dell Force10 S4810 and S6000 switches that were allowed to run the Linux-derived network operating system from Cumulus Networks a little more than a year ago. (Dell has since allowed for the Switch Light OS from Big Switch Networks to be installed on selected machines.) The final piece is the switching software itself, which is sometimes developed in-house – by Google, Facebook, Amazon, and other hyperscalers, cloud service providers, and telecommunications companies – as well as by companies such as Cumulus, Pica8, and Big Switch that want to be the Red Hat of network operating systems.

That software is a very small portion of the open networking business these days. The hyperscalers and cloud providers dominate the open networking market and they buy mostly bare metal white box switches. (This branded white box switch category is just getting started, and it is aimed at enterprises who want to emulate the hyperscalers but who do not want to create and maintain their own network OS.)

Grossner reckons that about 10 percent of the ports sold and somewhere between 6 percent and 7 percent of total datacenter switching revenues come from these bare metal switches. But over the next five years, Grossner expects that bare metal white box machines will account for something on the order of 20 percent of network ports worldwide and branded bare metal machines will drive something on the order of between 10 percent and 15 percent of ports; revenues driven by these machines will be a slightly smaller percentage of the overall market because of their inherently lower cost. So open networking will drive around 30 to 35 percent of the ports and multiple billions of dollars in revenues. (Pegging the revenues is a bit tougher than the ports.)

It remains to be seen what effect such a shift will have on networking giants like Cisco Systems, Juniper, Hewlett-Packard, Dell, and others. But clearly, the incumbents are starting to position themselves for this open networking world, much as system makers adopted Unix and then Linux as an alternative to their proprietary systems three decades ago.

Facebook Drives A Wedge Into The Market

As The Next Platform was preparing for launch, Facebook announced the second of its own homegrown switch designs, a modular switch nicknamed 6-pack. This machine followed fast on the heels of Facebook’s top-of-rack switch, called Wedge, and the FBOSS Linux-derived network operating system that runs on it, both of which were revealed to the outside world last summer.

This initial Facebook Wedge switch was based on Broadcom’s Trident-II network ASIC, which is just about the closest thing to an X86 processor that the switch industry has at this point. While Facebook created the Wedge switch with 16 ports running at 40 Gb/sec, the Trident-II ASIC could handle as many as 32 ports running at that speed, or using splitter cables, would allow it to handle 64 ports running at 10 Gb/sec as it is currently configured. (Presumably a Fulcrum ASIC from Intel or a SwitchX ASIC from Mellanox could be snapped into the Wedge switch without too much trouble.) The Wedge switch has a compute element, which Facebook did not specify but which is probably an Intel “Avoton” C2000 Atom server chip if we had to guess. (And we do.) The design will accommodate ARM processors for adjunct computing jobs, too, according to Jay Parikh, vice president of infrastructure engineering at Facebook. The FBOSS network operating system runs on whatever processor is embedded in the Wedge switch, and it acts as a bridge between the ASIC in the chip that does the switching and the Thrift networking service that is the backbone of the Facebook infrastructure and lets applications coded in various languages communicate with each other in a standard fashion.

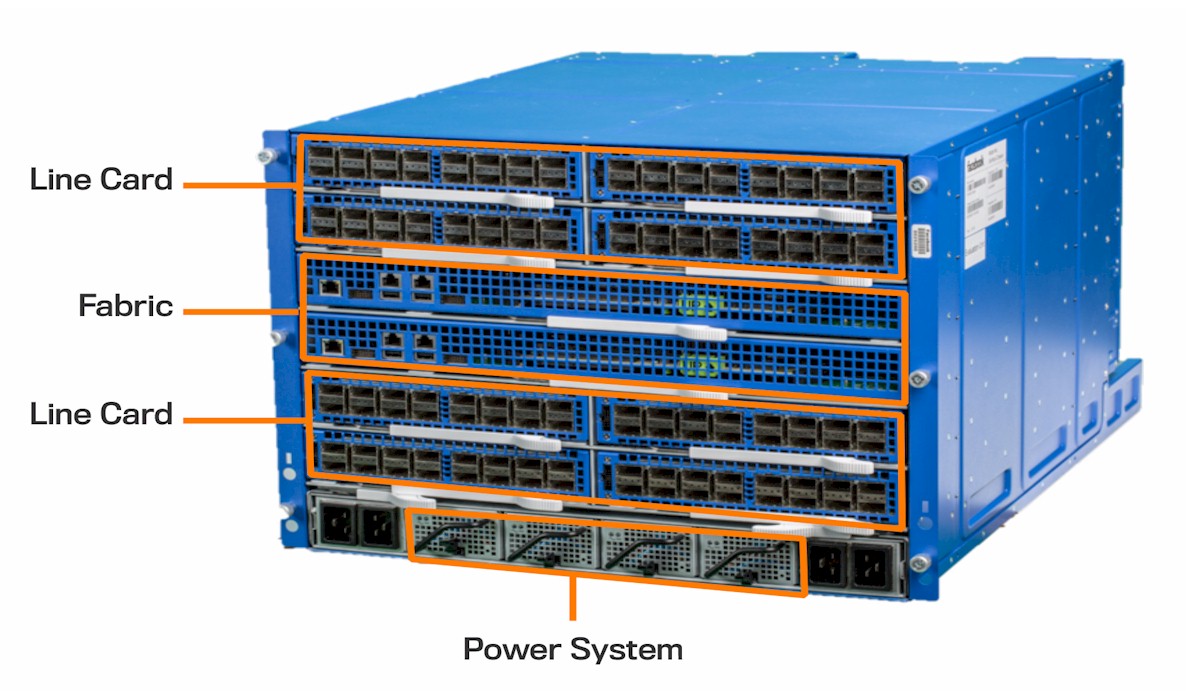

Yuval Bachar, hardware networking engineer at Facebook and formerly a system architect at Cisco Systems and a CTO at both Juniper and Cisco, said that Facebook had a top of racker, a network operating system, and a fabric to link all of its switches together, the one thing it was lacking was a modular switch to aggregate its networks. Modular switches tend to be a whole lot pricier per port than top of rack machines, just as scalable NUMA servers tend to be a lot more expensive per unit of compute than machines with one or two sockets, so when and if Facebook moves to 6-pack machines, someone is going to lose some pretty juicy business. (For all we know, Facebook is in the process of doing that now in its Altoona, Iowa datacenter, the latest of its four facilities around the globe)

Facebook did not reveal what ASIC it was using in the 6-pack modular switch, but Bachar said in the blog post announcing the machine that it uses Wedge as a building block. Technically, 6-pack is a full-mesh, non-blocking, two-stage switch that has a dozen ASICs on six line cards. These ASICs all provide 1.28 Tb/sec of aggregate switching bandwidth each, and the 6-pack comes in two configurations. When used as an aggregation switch, all of the 1.28 Tb/sec of bandwidth is exposed to the back and as a normal modular switch it has 16 ports running at 40 Gb/sec in the front and 640 Gb/sec (which is 16 ports worth of bandwidth) exposed out the back. Here’s the schematic of the 6-pack, which actually has eight line cards and two fabric cards, but six full-width slots in the chassis:

What is interesting about this 6-pack design is that the line cards and the fabric cards are based on the same ASICs and essentially the same layout. In fact, the fabric card is a double-wide board with two ASICs and two microserver co-processors that are mounted on a single motherboard with the ports for the fabric pointing out the back. The fabric module glues the line cards together in a non-blocking manner, which is what makes the whole shebang look like a giant, wonking, big ole switch as far as the servers and other switches are concerned. The FBOSS network operating system, which also has server management functions embedded in it, implements what Facebook calls a hybrid software-defined network, with a centralized controller on the network having all of the forwarding tables for the switches but with a local copy of the control plane for the modular switch running on the microservers embedded in each switching element.

Facebook says that the 6-pack modular switch is in production testing now, alongside the Wedge top-of-racker, and that it will contribute 6-pack to the Open Compute Project, most likely at the Open Compute Summit held in San Jose in a few weeks. It will be interesting to try to figure out what this 6-pack modular switch costs compared to similar products from Cisco, Juniper, HP, Dell, Huawei Technologies, and others and how quickly the OCP hardware manufacturing community tries to build and sell Wedge and 6-pack machines.

One last interesting bit: Facebook expects the 6-pack modular switch to be on a development cycle of between 8 and 12 months – significantly shorter than for most modular switches from the big vendors.

Hewlett-Packard Joins Dell In Branding Bare Metal

No matter how good HP’s own Comware network operating system is, the fact remains it is not Linux and it therefore is less appealing to those customers who want a tweakable, Linux-based switching platform. Hence the new three-way partnership inked between HP, hardware maker Accton, and Cumulus that will see HP create a line of branded switches based on other people’s technology but backed by HP sales and support.

The deal with Accton and Cumulus mirrors one that HP inked last April with contract manufacturer Foxconn aimed at the same hyperscale datacenters. As it turns out, HP had already been using Foxconn to make servers for European customers for a decade, although it never said anything about that. While HP arguably has the widest range of servers on the planet and has its own manufacturing operations, the Foxconn hyperscale server partnership makes use of Foxconn’s server design and manufacturing capabilities, which presumably are lower cost than HP’s own design and manufacturing operations, and backs the machines up with HP sales, support, and branding. The idea is to compete with Quanta, Supermicro, Wiwynn, and others who make and sell custom or Open Compute machinery for large scale datacenters. Those HP-branded Foxconn systems were supposed to ship before the end of 2014, but as far as The Next Platform knows, they have not been launched yet.

Mark Carroll, who is CTO at the HP Networking division, says that the partnership with Accton and Cumulus is about bringing the benefits of open networking down from the “Big Seven” hyperscale companies that have commissioned the building of their own switches and have created their own network operating systems to other large-scale datacenters. These customers want a lower total cost of ownership, but Carroll says that the increased agility and flexibility that comes from an open, Linux-based platform is the real driver of the open networking effort at HP specifically and in the IT market at large.

The initial “brite box” open switches, as HP calls them, will be based on Broadcom’s Trident-II ASICs, as is the case for a lot of the open switches out there in the market today. But in the second half of the year, HP will deliver switches based on Broadcom’s future “Tomahawk” ASICs, the design of which was compelled by hyperscale datacenters that wanted to have ports running at 25 Gb/sec and 50 Gb/sec rather than the 10 Gb/sec, 40 Gb/sec, and 100 Gb/sec that the Ethernet community, as represented by the vendors, had adopted. (The Next Platform will examine the ramifications of the advent of the 25 Gb/sec standard, which launched last June as which has been subsequently ratified by the IEEE, in a future article.)

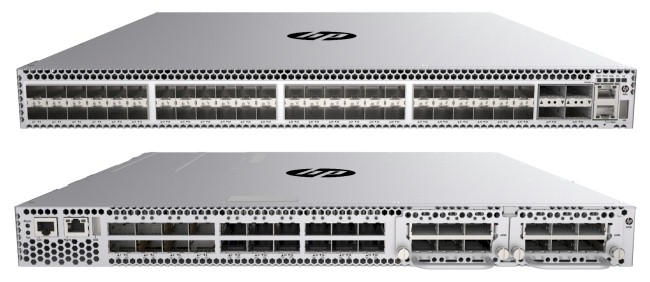

To start, HP will be launching two open switches in a top-of-rack form factor sometime around the late March or early April timeframe, Carroll tells The Next Platform. One will be a leaf switch with 10 Gb/sec ports and the other will be a spine switch that supports 10 Gb/sec or 40 Gb/sec ports.

HP has not released the feeds and speeds of these two Accton-made switches, but you can see from the picture above that the one on top of the image has 48 ports running at 10 Gb/sec with four 40 Gb/sec uplinks on the right. The one on the bottom is the 40 Gb/sec model, which has 20 fixed ports and what looks like two modules that have six ports each for a total of 32 ports.

Both HP switches will make use of the ONIE network operating system installer, which Cumulus donated to the Open Compute Project, and will run the Cumulus Linux operating system. Because ONIE works on these switches, any other network OS that supports this installer and the Trident-II ASIC will technically be able to be loaded onto these switches – including any homegrown software – but for now HP is only offering tech support on Cumulus Linux. Over time, HP expects to round out the network OSes supported, with Big Switch’s Switch Light being the obvious next one in line.

As for HP open sourcing Comware, this is not in the cards for now. “We definitely talk about that, but we do not have any plans to open source Comware at this time,” says Carroll. “We drive to what customers want us to do.” So, presumably, if customers wanted to install Comware, open or closed, on this switch, HP would consider it and if there was enough customer demand, do it. But still, Carroll says that there are going to be plenty of enterprise customers that want a traditional switch with an integrated network OS and lots of other circuits for accelerating portions of the network stack, as is embodied in the switches that HP developed itself or got through its 3Com acquisition in November 2009.

Further down the road, HP will also deliver switches based on Accton hardware and Cumulus software that are based on the Broadcom Tomahawk ASIC with 25 Gb/sec and 50 GB/sec speeds as well as a 100 Gb/sec switch that could use Trident or Tomahawk ASICs. These will also be top-of-rackers, and at the moment the bet is that HP will work with Accton to deliver what Broadcom has called the “God box,” which is a 32 port switch with ports running at 100 Gb/sec speed; this will likely use the Tomahawk ASIC. HP is also working on a switch that will deliver a much more modest 1 Gb/sec port speed for what it calls “unique customer needs” in the datacenter where high bandwidth is not necessary but open networking is.

Who are the big seven hyper-scale companies? I can only name Google, Amazon, Microsoft, Facebook and Yahoo. Whom am I missing here?

The big seven I know are Google, Microsoft, Amazon, Facebook, Tencent, Baidu, and Alibaba. I would put Apple and Yahoo next perhaps.