One thing is certain: The explosion of data creation in our society will continue as far as pundits and anyone else can forecast. In response, there is an insatiable demand for more advanced high performance computing to make this data useful.

The IT industry has been pushing to new levels of high-end computing performance; this is the dawn of the exascale era of computing. Recent announcements from the US Department of Energy for exascale computers represent the starting point for a new generation of computing advances. This is critical for the advancement of any number of use cases such as understanding the interactions underlying the science of weather, sub-atomic structures, genomics, physics, rapidly emerging artificial intelligence applications, and other important scientific fields.

Even so, the computing industry has fallen behind based on its historical rates of improvement. New research shows that supercomputer performance doubled nearly every year from 2002 to 2009, but this rate dropped to every 2.3 years from 2009 to 2019. This is due to several factors including the slowdown in Moore’s Law and technical constraints such as Dennard scaling. These are significant obstacles, but they are simply the next chapter of engineering challenges that will bring exciting advances over the next decade.

To push the bleeding edge of performance and efficiency will require new architectures and computing paradigms.

Process Nodes

Even with the inherent limitations on process node improvement as we approach atomic scale, a shift to 5 nanometers, and likely 3 nanometers, should offer at least two more generations of substantial performance gains and energy efficiency. While the path to 5 nanometers is becoming clear, getting to 3nm may require a new transistor architecture beyond today’s FinFETs, whether an evolved form of current architecture or new technologies such as nanosheets and nanowires. There is a good chance that 5 nanometer technology could come to market later this year or in 2021. However, these advanced technologies are very expensive, costing $1 billion or more just to design a device for 3 nanometers.

Semiconductor advances will continue, but the approaches are more costly for each generation and are taking longer to develop. This trend has driven other areas of innovation to offer further potential gains.

Heterogeneous Computing with Accelerators

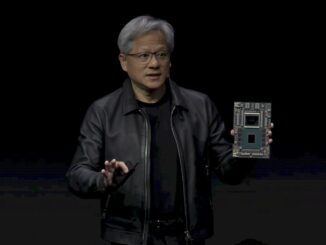

There are parts of applications that will diverge from the processing strengths of traditional CPUs. GPUs, FPGAs, and purpose-built ASICs are already examples. The industry has been pursuing heterogeneous computing for years. For example, at AMD we merged CPUs and GPUs onto a single die to reduce latency. We call this an Accelerated Processing Unit, or APU.

More recently, GPUs have been adapted with great success to execute neural networks, the deep learning algorithms behind so much of the AI advances. Purpose built ASICs carry this one step further, albeit they are limited to specific use cases. Google’s Tensor Processing Unit is such an example, designed specifically to accelerate the performance of linear algebra and other specialized mathematics algorithms used in many of today’s deep neural nets. FPGAs from Xilinx and others have proven adept at speeding video transcoding and image processing. Other examples of custom silicon for AI are in development though it’s quite possible these will entail software specialization that could lock-in developers to work with just one vendor.

Much is being discussed about quantum computing. This holds tremendous promise for certain use cases but likely will not be a replacement for general purpose digital computing for the foreseeable future. Instead, quantum will serve as another specialized accelerator for specific computing challenges. This and other future specialized accelerators will work together with traditional computing technologies.

Memory

Memory technologies have advanced in recent years, with die-stacking leading to high-bandwidth memory (HBM). Developed by AMD, Samsung, and SK Hynix, HBM enables more storage capacity and data bandwidth than conventional memory products such as DDR.

In the future, alternative materials and designs could yield big gains. Carbon nanotubes for memory, then for logic circuits, for example. With this technology, semiconducting nanomaterials can replace silicon in transistors and other circuit elements. Because nanotubes can be formulated into inks, they can be layered in multilevel circuits – something that’s impractical with bulk silicon. Future memory arrays could be slipped into stacks of carbon nanotube circuits, each layer connected closely to the ones above and below by a layer of metal wiring much like today’s HBM products.

This design breaks the memory bottleneck by offering more short-distance speedways for data to travel, reducing the time and energy used to fetch and send data. Though it remains to be seen if they will be sufficiently reliable, nanotubes would be smaller and denser than current mainstream approaches and arguably three times as fast and three times as energy efficient as equivalent silicon systems.

This is one potential approach as I expect to see many new memory technologies in the next decade. One of the more promising technologies now being explored is photonics. Computers today move electricity across metal circuits, and with that comes resistance, leakage, latency and heat. All of these serve as brakes on performance. Photonics – the integration of light-based hardware – offers the possibility of overcoming some of these obstacles. Computers incorporating photonics would use light to transfer information and electronics to process it. Efforts so far have mostly failed to match the performance and energy efficiency of state-of-the art electronics, but this may be changing. Potential uses are for inter-core communication and to sustain cache coherency. Chip-scale photonics makes the technology vastly more accessible and has the potential for large performance gains.

Experimental

Also, on the horizon is Neuromorphic computing. Neuromorphic computing includes the production and use of neural networks and deals with proving the efficacy of any concept of how the brain performs its functions. This includes not only reaching decisions, but also memorizing information and even deducing facts. The engineering of a neuromorphic device requires components whose functions are analogous to parts of the brain and perform similarly. This entails circuitry and software combined to produce neurons which can trigger other neurons, form connections, and respond to stimuli. Among the advantages attributed to Neuromorphic computing is much lower energy consumption. Neuromorphic computing holds significant potential. Despite recent products announcements, there are huge software challenges and the technology is likely still many years away from widespread use due to the complexity of building the ecosystem.

Conclusion

Semiconductor engineering has never been easy, but one can argue that the industry had the wind at its back for roughly 60 years. During that time, manufacturing process improvements have complemented architecture and software advances to produce seeming clockwork gains, often doubling the performance every 18 to 24 months. Those process improvements have helped to drive the industry to exascale computing, but now appear to be slowing. As this article highlights, there will be no shortage of innovative approaches and creative concepts that could be perfected over the next decade to continue the exceptional growth of computing capabilities. This is the beauty of computer engineering – demand driving innovation, and the exascale era is just the next milestone on the never-ending HPC journey.

Mark Papermaster is chief technology officer and executive vice president of technology and engineering at AMD responsible for corporate technical direction, product development including system-on-chip (SOC) methodology, microprocessor design, I/O and memory and advanced research. Papermaster led the development of the “Zen” high-performance X86 CPU family, leadership GPUs, and the company’s modular design approach, Infinity Fabric. Papermaster also oversees AMD’s internal compute infrastructure and services.

One as inexpert as I, needs simple analogies.

AFAICT, while there is surely room to improve computers, by far the most backward area seems to be when you try and interconnect computers. Bandwidths drop by huge factors.

Perversely then, to me, the most exciting tangible thing listed is the humble APU – the microscopic reduction of an 8 core cpu and a Vega GPU onto a tiny monolithic chip.

It may be small, but so is the Zen CCX, and look what a mighty Epyc oak has grown from that humble acorn.

I think adding gpu to this variant of the AMD chiplet/lego block?, is far deeper and more exciting than it seems – a chiplet also able to do GPU compute & with a very efficient & hi bandwidth linking of the two types of processors.

I find it interesting that Mark Papermaster has singled the APU out for mention in this context.