This week, Amazon Web Services announced the availability of its first UltraServer pre-configured supercomputers based on Nvidia’s “Grace” CG100 CPUs and its “Blackwell” B200 GPUs in what is called a GB200 NVL72 shared GPU memory configuration. These machines are known as the U-P6e instances, and come in full rack and half rack configurations, actually, and they complement the existing P6-B200 instances that were unveiled last December at the re:Invent 2024 conference and that became generally available on May 15.

In the case of both the P6 and P6e instances, NVLink 5 ports on the GPUs and on the NVLink Switch 4 GPU memory sharing switches are used to gang up GPUs into large shared memory compute complexes similar to the NUMA clusters that have been around for CPU servers for more than two and a half decades. Other non-NUMA shared memory architectures are older than non-uniform memory access techniques, such as symmetric multiprocessing, or SMP, but none scale as far as NUMA does on CPUs, which pushed up to 128 and 256 CPUs in a shared memory cluster back in the days of single-core processors.

With the P6e instances based on the Nvidia NVL72 design, which we detailed here, the GPU memory domain stretches across 72 GPU sockets, and the Blackwell chip has two GPU chiplets per socket, so the memory domain is really 144 devices in a single rack. AWS is selling UltraServers with either 72 or 36 Blackwell B200 sockets as a memory domain, and presumably this is done virtually and not physically so the instance size can be configured on the fly. These machines have a Grace CPU paired with every two Blackwell B200 GPUs, and the whole shebang is liquid-cooled, which is one of the reasons why the B200 GPUs are being overclocked by 11 percent and deliver that much more raw compute performance for AI workloads.

The P6 instances used more standard HGX-B200 server nodes, which are not overclocked and which create a GPU memory domain that spans eight sockets. The P6 instances use Intel Xeon 6 processors as their host compute engines, with two CPUs for every eight Blackwell B200 GPUs, and the resulting compute complexes are half as dense as the GB200 NVL72 systems and therefore can still be air cooled.

With these two Blackwell systems now available on the AWS cloud and pricing information available, this is a perfect time to do some price/performance analysis on the Blackwell instances versus prior generations of “Hopper” H100 and H200 GPUs and even earlier instances based on “Ampere” A100 and “Volta” V100 GPUs that are still available for rent on the AWS cloud.

The instances and UltraServer rackscale configurations that we examined are sold under what AWS calls EC2 Capacity Blocks, which as the name suggests is a way to reserve and buy preconfigured UltraClusters that range in size from one instance or UltraServer to up to 64 instances or racks for up to six month terms up to eight weeks in advance of when you need the capacity. It is a funky version of a reserved instance sold in bigger chunks as a single unit.

Just for fun, we took the EC2 Capacity Block configurations and also found the setups that had on-demand pricing to see how these compare in cost all the way back to P2 instances based on Nvidia Volta GPUs and P3 instances based on Ampere GPUs.

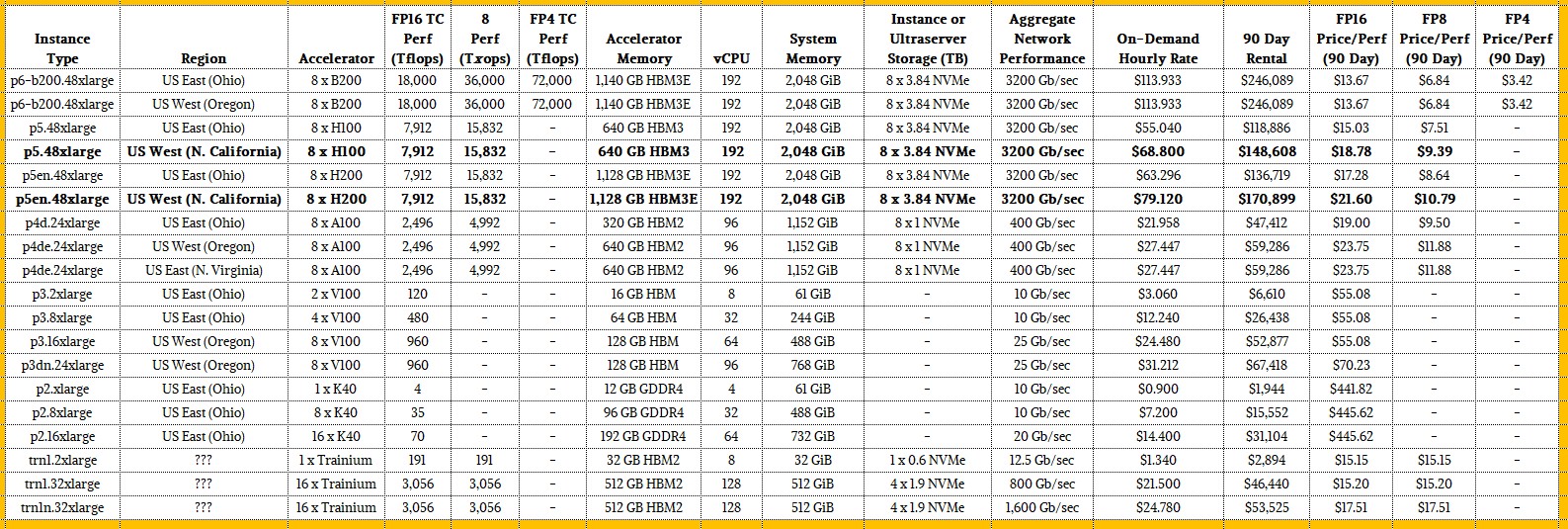

So without future ado, here is the mother of all spreadsheets for the EC2 Capacity Blocks, with pricing shown across regions around the globe where they are available, including Nvidia GPU instances as well as AWS Trainium1 and Trainium2 instances:

There is a lot to take in there. To get a sense of how the price/performance stacks up, we added peak theoretical performance with FP16, FP8 or INT8, and FP4 precisions. We ignored FP64 and FP32 precision for the purposes of this comparison, realizing full well that sometimes higher precision calculations are used with AI models and are certainly used with HPC simulation. These performance ratings are for dense math, not sparse matrices, which can double the effective numerical throughput of the devices.

We decided that a 90-day rental was representative of what it would take to train a fairly large model, but nothing crazy. This scale of instance cost makes for a nice dividend into which the performance in teraflops that the divisor chops it into.

A lot of things jump out of this monster table, but the first one that we saw, and which we highlighted in bold, is that AWS charges a 25 percent premium for GPU instances based on Hopper H100 and H200 GPUs that are served from its US West Northern California region. It is tough to get power and datacenter space in Silicon Valley, which is why you see so many new goodies being installed in the Oregon area of the US West region. The US East region is anchored around Ashburn, Virginia, and it still gets a lot of goodies first, including the UltraServer P6e rackscale system based on the GB200 NVL72 design. The US East region in Ohio gets its share of new stuff, too, including Trainium1 and Trainium2 clusters, as you see.

We think of FP16 performance as a kind of baseline for AI accelerators, and then FP8 and FP4 precision as an important further accelerator for models that can be trained using lower resolution data and still not sacrifice the accuracy of the model.

If you look at FP16 performance of the rackscale GB200 NVL72 system versus the HGX-B200 systems, which do not scale nearly as far, the rackscale machine – which requires liquid cooling and is a bit of a beast to install – only carries a 17 percent increase in a unit of performance the way AWS is renting it. This is not much of a premium at all, really, and it is in line with what you would expect given the density of the system and the power and cooling issues the density of the GB200 NVL72 causes.

The other thing you will see is that the H100 and H200 devices have the same peak theoretical performance, but the H100 that AWS has installed is an earlier one with only 80 GB of HBM3 capacity while the H200 has 141 GB of HBM3 capacity. AWS is charging a 10 percent premium for this memory and the higher bandwidth that comes with it. That H100 with 80 GB of HBM3 has 3.35 TB/sec of bandwidth, while the H200 with 141 GB of HBM3E provides 4.8 TB/sec of bandwidth. For a lot of workloads, this extra memory capacity and bandwidth can nearly double the actual performance of AI training. You might have expected for AWS to charge more of a premium for the H200 instances than it does.

The EC2 Capacity Blocks can still be acquired using Ampere A100 GPU accelerators, and it is interesting to note that on a per-GPU basis, the H200 is 3.07X more expensive than an A100, but it delivers 3.17X more FP16 performance per GPU. When you do the math, renting an A100 with 40 GB of HBM2 memory for 90 days through Capacity Blocks costs $10.21 per teraflops, compared to $9.88 per teraflops for the H100. Which you would only do if you could not get H100s or H200s or B200s. The A100 with 80 GB of HBM2 memory costs $12.78 per teraflops. (All of these prices are for regions outside of Northern California.)

At FP16 precision, the full-scale NVL72 machine in the P6e instance with 72 Blackwell B200 GPUs as well as the half rack with 36 Blackwell B200s costs $9.14 per teraflops to rent for 90 days, and will cost $1.65 million and $822,856, respectively, for those three months. The P6-B200 instances with the smaller memory domains cost $7.81 per teraflops over 90 days at FP16 precision, and given that these are air-cooled with a smaller memory domain, this stands to reason. The wonder is that the liquid cooled GB200 NVL72 machines do not cost more.

If you look at FP8 performance, all of the cost per teraflops are cut in half, and with the Blackwells, the ability to calculate in FP4 format cuts the cost of a teraflops in half again. The net result is that if you change your model to take advantage of FP4 performance, you can either rent one quarter of the machinery to get the same work done at one quarter of the cost, or you can spend the same money and train a model that is four times larger.

Now look at the bottom of the table with Trainium. It takes twice as many Trainium1 AI accelerators, which are designed by AWS, to beat the Nvidia A100 by around 22 percent in terms of raw FP16 throughput. With Trainium2, the performance on FP16 was increased by 3.5X and performance on FP8 was increased by 6.8X while the HBM capacity was boosted by 3X but the cost per teraflops only went up by 7.4 percent at FP16 resolution. The addition of FP8 cut the price of a teraflops at FP8 precision to a mere $3.72, which is less than the $3.91 per teraflops of the HGX-B200 nodes AWS is renting as the P6 instance and even smaller than the $4.57 per teraflops that AWS is charging for the GB200 NVL72 instances. The Trainium2 does not have FP4 support, which means when it comes to raw cost, Nvidia has the advantage for those AI application that can run at FP4 resolution and not lose accuracy.

Now, if you look at On-Demand pricing on AWS, the Trainium1 chips are still available out there and they are considerably more expensive than the Blackwell B200 instances that are rented on demand. Take a look:

What is immediately obvious in this table is that the ancient accelerator instances based on K40, V100, and A100 GPUs have very low cost and therefore very low capital outlay, which looks attractive, but if you look at the cost of a teraflops of FP16 oomph, these are terrible in an economic sense, and have a much bigger gap with new iron sold under the EC2 Capacity Block plans. And if you compare these ancient GPUs running in FP16 mode with Blackwells running in FP4 mode, it is downright silly to consider using this older iron except possibly in an absolute emergency.

Clearly, if you need to rent instances on demand, rent Blackwells and run in FP4 mode. If you do that, the cost of FP16 performance is 9 percent lower and with the downshifting of precision two gears, you can boost the performance by 4X and improve the bang for the buck by 4.4X.

Moore’s Law is only really alive in the guise of shrinking precision, not so much in shrinking transistors. FP2 anyone? And as some have said, there is no point in FP1. . . .

Slim code has its advantages. mb

It’s cool to see the Trainium2 holding their own against Hoppers and Blackwells in $/perf in this here rodeo!

Makes me wonder if the rumors about their GP-SIMD Engines being composed of parallel 1-bit in-memory-processing units, inspired by Connection Machines of the 1990s, are right … and how one might program those to great advantage!?

“ And as some have said, there is no point in FP1…”

I see what they did there…. 😉

Really interesting insights on the new AWS GPU systems! I’m curious how they compare in real-world applications versus the previous models. Looking forward to seeing more benchmarks!

But there is a point in FP1, of course it’s not floating but it’s still a point. This was all kicked around 40 years ago in expert systems days, when it was found that certainty weights on intermediate results were ineffective. There is nothing new under the sun.

As to the big charts, it looks like the prices were set linearly with the performance estimates, but the effective power is a product of many factors. That would make the new modules even more preferable, on this pricing. In fact so powerful that it adds up to much more available power today than two or five years ago. Enough to make overcapacity a real issue. IMHO.

So as FP precision moves every closer to meaningless AND Nvidia’s market cap moves seemingly closer to infinity AND the useful life of a given Nvidia data center “GPU” generation seems to shrink…Why would any hyper-scaler want to buy them? Wouldn’t you hope that your peers installed enough of them for you to just rent them?

PS: I mentioned the T-shirt idea of “There’s no point in FP1” in a comment on said sage website a month+ ago…My just completed custom T-shirt design has at the top silhouette of a digital human brain, under that “(2B || !2B)”, lastly “That IS the question” // Think a modest bit of well known Shakespeare cast as C (OK the variable names may be poetic license)

One does have to wonder about the juxtaposition of a $4T market cap company pushing 4-bit floating point…Just seems odd.

I wouldn’t give two bits for FP2….