Given two endpoints and a compound annual growth rate between those two points over a specific amount of time is not as useful as it seems. Not when you are trying to figure out what is happening at all of the points in between. And that is necessarily so because that is just how CAGR works. Or rather, doesn’t work.

There are many different lines that can be fit between those two endpoints – mathematically, it is an infinite number of possibilities, but here in the physical world when we are counting compute engines or servers or money, the probability distribution of numbers between those two endpoints is fairly constrained. Revenues don’t go up by 10X and then come back down in under five years, for example. Well, not usually.

But, no matter what, given two points and a CAGR over the usual five years, it all comes down to educated guesses at best to fill in the blanks.

We have been playing a bit of cat and mouse game with AMD chief executive officer Lisa Su over the past several years as she has been revising and refining and now finally somewhat more fully revealing AMD’s prognostications for datacenter AI compute engine revenues over the next several years. This is the kind of prediction that Nvidia does not do publicly, so we think AMD has been commended for at least telling us what it thinks will happen in the AI compute engine market between the years of 2023 and 2028. And now, because if you give a mouse a cookie it wants a glass of milk, having a better sense of what AMD thinks of current and future revenues for the AI accelerator market in the datacenter, we want to know what AMD’s share of that might be.

We will get to that in a moment. But for now, let’s just review what Su has said for market projections since she first brought the topic up in June 2023.

At that time, during the Datacenter and AI Technology Premiere event where then-impending enhancements of AMD CPU and GPU compute engines were previewed, Su made this prediction:

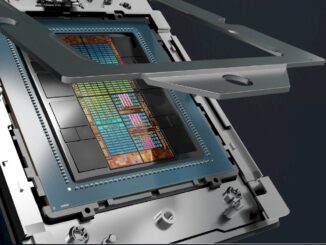

This was just as AMD was preparing to talk about the third generation “Antares” Instinct MI300 series of datacenter GPUs, which would come out in December of that year. If you squint your eyes and read the fine print on this chart above, it says “Datacenter AI Accelerator TAM: GPU, FPGA, and others” and that means exactly what it says. It does not mean CPUs with tensor or matrix accelerators, and it mostly means GPUs with a dose of Google TPUs, AWS Trainiums, and a pinch of Cerebras, SambaNova, Groq, and other things that might scratch the market’s surface a little. Microsoft’s Maia and Meta Platforms MTIA might make a dent or two – we shall see.

So, $30 billion in 2023, growing at a CAGR of more than 50 percent to more than $150 billion by 2027. These seemed reasonable to us at the time, given that the overall datacenter systems market was $236 billion in 2023, according to Gartner. (We did a deep dive on Gartner’s IT spending projections back in January.) This was out of a core IT market – systems, enterprise software, and IT services – that brought in $2.72 trillion in revenues.

In 2012, before the first AI boom that one might call the Machine Learning Era, core IT was just a smidgen above 35 percent of total IT spending, which includes spending on devices like PCs and smartphones as well as telecom services. But core IT spending started to accelerate in 2013 and is expected to crest about 60 percent of total IT spending in 2025 in a core IT market that has expanded by a factor of 2.6X to $3.38 billion, if Gartner’s prognostications are right. Overall IT spending has only grown by a factor of 1.55X, and that is because device and telecom spending, while growing during the coronavirus pandemic, has actually shrunk by 4 percent comparing 2012 real and 2025 projections.

Which, once again, just points out the difficulty of predicting what happens between any two end points, particularly as the number of time slices increases.

And then, the GenAI boom, the second wave of the AI revolution, took off in 2023 and the sales models started getting revised like crazy.

Only six months later, at the launch if those Antares MI300A and MI300X GPU accelerators, here is what Su & Co said were the projections for 2023, which was not yet technically finished, and 2027, which seems like a long time away unless you are waiting for your Nvidia “Hopper” and “Blackwell” GPU allocations so you can build foundation models.

So now datacenter AI accelerator sales in 2023 was going to be 50 percent bigger than projected only six months earlier, and would grow at a CAGR greater than 70 percent to be more than $400 billion by 2027.

Then, in October 2024, nearly a year later, Su put out this projection:

In 2023, which was long since over and could be characterized better, AI accelerator sales in the datacenter were indeed $45 billion, which means that AMD and whoever it is working with on market forecasts thinks they got it right for 2023. But now, the projection was out to 2028, and the CAGR cooled off to greater than 60 percent instead of greater than 70 percent.

We took a stab at trying to figure out what might happen between those two end points in The World Will Eat $2 Trillion In AI Servers, AI Will Eat The World Right Back, and we are not going to go through it all again. The data will be in the chart below. Frankly, we need no longer worry about that because at the Advancing AI event last week in San Jose, Su gave us the chart that lets us fill in the blanks.

Take a gander at this:

Here is what Su had to say about the chart:

“So let’s talk a little bit about the market. When we were here last year, we said that we expected the datacenter AI accelerator TAM to grow more than 60 percent annually to $500 billion in 2028. And frankly, for many of the analysts and folks you know, at the time, said that seemed like a really big number. People were like, “Do you really think it can be that big, Lisa?” and I said, “Well, you know, that’s what we’re seeing.”

“And what I can tell you, based on everything that we see today, that number is going to be even higher, exceeding $500 billion in 2028. And most importantly, we always believed that inference was actually be the driver of AI going forward. And we can now see that inference inflection point with all the new use cases and reasoning models, we now expect that inference is going to grow more than 80 percent a year for the next few years, really becoming the largest driver of AI compute, and we expect that high performance GPUs are going to be the vast majority of that market because they provide the flexibility and programmability that you need. Models are continuing to evolve, and really, algorithms are moving so fast you want that programmability in your compute infrastructure now.”

Not only did Su talk about how the markets for AI inference would be distinct from AI training, but she filled in all of the data from 2023 through 2028, inclusive. She didn’t give us the numbers for the middle bits, but you can count pixels and get a reasonable approximation of the data behind the chart. Which we did in the table below:

There is a lot going on in this chart. As always, the red bold italics are estimates made by The Next Platform to fill in gaps in the data. The data shown in blue bold italics are much better estimates that we made by counting the pixels in the chart that Su just showed last week. These are estimates, but not guesses made by having a hunch about what a curve should look like and how the global economy is going to behave.

What leaps out to us immediately is that AMD is expecting for the market for AI accelerators in the datacenter to not grow as aggressively in 2025 as you might expect given all of the noise. It looks like people might wait for the 2026 machines in a lot of cases to make big investments. If you do the math on AI training versus AI inference, 2026 is the year when total inference accelerator spending will surpass total training accelerator spending. We do not think this is a coincidence. AI architectures are shifting from blurty models and the machines that do this well to reasoning models, which take different kinds of systems with larger memory domains and tighter coupling. We also think people know less expensive alternatives are around the corner, and they will wait for them to do their huge deployments.

The other thing that jumps out is that the market for AI accelerators in the datacenter is projected to be a lot more than $500 billion in 2028, and is closer to $600 billion. That is a 67.5 percent CAGR between 2023 and 2028.

To put it all in perspective, we did a rough approximation of the total systems spending that these AI accelerator numbers imply. We are using a multiple of 2.1 to get spending for full AI training systems (this is at the cluster level, including networking and storage), and 1.8 to get spending on AI inference systems. Perhaps this gap will be larger, perhaps there will be no gap at all. It remains to be seen. But, given this, that means $180 billion in AI accelerator sales this year will drive $351 billion in AI systems sales.

This is against a total datacenter systems spending of only $405.5 billion projected by Gartner for 2025, mind you. Either AMD is wrong or Gartner is wrong. It is more likely that they are both wrong. Gartner is projecting for $321 billion in AI system sales in 2028, which is less than the implied spending for 2025 for all kinds of AI systems in the datacenter.

For 2028, the Gartner data, which is sparse, implies around $589 billion in total datacenter systems spending, based on our own analysis of AI server spending and server spending in general and making some guesses about storage and networking. The AMD data revealed at the Advancing AI event last week implies AI system spending alone of around $1.13 trillion.

These are indeed all very big numbers. We just give them to you so you can ask your boss to invest more if you think that is valuable. We think that the AMD data does at least parse inference from training – although we think there will be a big gray area where systems do double duty. If we had to guess, we would say a third of the money will be spent on distinct AI training systems, half of the money on distinct inference systems, and the remaining machines are mostly being used for inference but sometimes for training. That fits the math shown above.

Now here is the fun part. Assuming a fairly pessimistic forecast for 2025, where AMD will only get maybe $6.1 billion in sales – it could be a lot more than that, but AMD is not giving out much in the way of a datacenter GPU revenue forecast these days – against that $180 billion TAM opportunity. This is not good, but that is what happens when everyone knows you have much better products coming next year.

Just for fun, assume that AMD starts to make real market share gains in GPUs and its partners do so with systems. Maybe 7.5 percent share in 2026, maybe 10 percent share in 2027, and maybe 15 percent share in 2028. If AMD’s revenue projections for the market at large are correct, then by 2028, AMD could be kissing $90 billion in GPU sales from the datacenter.

That’s almost four times as large as the whole company was in 2024.

And where would those fantastical prognostications leave Nvidia in 2028?

With nearly 85 percent market share of a very big number.

I reckon this here is a right Wild West gold rush and those selling the shovels stand to benefit big time (good for them)! I mean, there’s the OpenAI-SoftBank-Oracle $500B Stargate, the Amazon-Google-Meta-Microsoft looking at $320B for AI in 2025 alone, the EU InvestAI and AI gigafactories €220B, Japan’s $65B push for chips and AI, the PRC’s $8.2B early-stage AI investment fund and Alibaba-Bytedance-Honor looking at $80B to throw in, so no shortage of partygoers there.

The bold red $351B and $589B for ’25-’26 should be no sweat I guess but whether what follows is a frantically increasing gallop or a trot may depend on how much gold was actually dug up with those pretty-penny silver-dollar spades … or not, if gold fever remains a factor, irrespectively!

I think your 2025 number is way too pessimistic. Oracle tagged their initial mi350 order of 30k at around $2B, and now upgraded that to a zetascale cluster of 131k machines. Just by itself that order is probably worth $8-$10B. AMD started shipping beginning of June, and that first cluster will be fully deployed in two months, so good chance the supercluster is completed by years end. And that’s just Oracle.

I agree that it sounds low. And when I did that estimate three months ago, it was the absence of any guidance at all that compelled me to shoot low. It is still bigger in 2025 than in 2024.