Current US president Joe Biden and once and future president Donald Trump do not agree on much. But they absolutely agree that advanced semiconductor manufacturing techniques as well as chips made with those processes to host HPC and AI applications should not be easily accessible to the military in the People Republic of China.

And so, the Bureau of Industry and Security at the US Department of Commerce has put out a whole new set of rules that, among other things, restrict the export of HBM stacked DRAM to the Middle Kingdom.

The wonder is not that this has happened, but rather why it took so long to happen given how critical HBM is to the functioning of certain high performance CPUs as well as just about every kind of datacenter accelerator (such as GPUs and various custom ASICs such as TPUs and RDUs) that is used for AI training and inference as well as for HPC simulation and modeling.

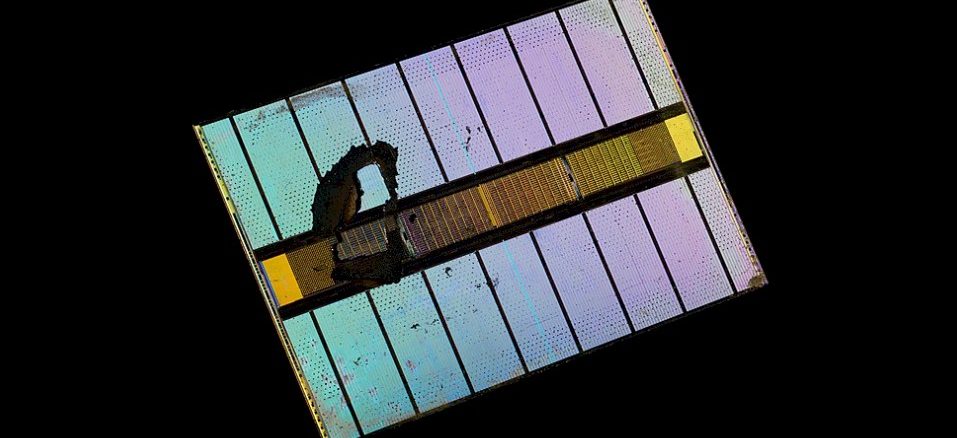

It is not an exaggeration to say that the market for GPU accelerators is gated to a large extent to the availability of HBM memory, which is linked to accelerator chips through various means, usually with the family of 2.5D CoWoS interposers from Taiwan Semiconductor Manufacturing Co. Manufacturing accelerator chips is fairly easy, even if you compose them out of chiplets with die-to-die (D2D) interconnects, sometimes called chip-to-chip (or C2C) interconnects, to create compute engines that are larger than the 800 mm2 area limit of the reticle of an modern immersion lithography machine.

The Commerce Department has finally figured out that curbing exports of GPUs and other accelerators with certain raw floating point performance and certain HBM memory capacities and bandwidths is not enough, hence the new rules to curb the distribution of the HBM memory itself. The original curbs on AI and HPC accelerators kept certain devices that have military uses from getting into China, and curbs on the export of advanced chip making technologies kept Chinese organizations from etching advanced compute engines themselves.

But as several Chinese exascale-class supercomputers show, you can do a lot with 14 nanometer processes on chips with DDR4 main memory, as the “Tianhe-3” system appears to do as does the ”OceanLight” system. Both of these were installed in 2021. As far as Hyperion Research knows, China installed a new exascale machine each year in 2022, 2023, and 2024, which makes five machines, and there is an outside chance that what all the kerfuffle is all about is that one or more of these machines used an accelerator with HBM memory instead of DDR5 memory. China is expected to install another one or two exascale machines in 2025, and two more in 2026. We think these machines almost certainly intended to use HBM memory.

That plan may now have to change. If China can’t get HBM memory, then that memory will be available for other uses, which will benefit Nvidia, AMD, and those making their own AI accelerators. More supply will be available for them to play with. Which is the real upside as far as we are concerned.

Under the new BIS export rules, which you can see here, HBM memory exported to China has to have a memory bandwidth density of less than 3.3 GB/sec per mm2 – which doesn’t sound very high at all but then again, we don’t know the specific area figures for HBM memory stacks used in the calculations. What we do know is that a DDR5 memory chip used in HBM memory is somewhere between 100 mm2 and 125 mm2 in size. HBM2E topped out at 461 GB/sec per stack, and divided by 100 mm2 would be a memory density of 4.6 GB/sec per mm2 and by 125 mm2 would be a memory density of 3.7 GB/sec per mm2. To our estimation, HBM2, which is now eight years old, would make the cut at 3.1 GB/sec per mm2. And China can forget about HBM3, HBM3E, and HBM4, if our estimations of what the Commerce Department is talking about are correct.

The new rules apply to HBM memory made in the United States – that is you, Micron – as well as that made overseas – that would be you, SK Hynix and Samsung. They are effective today with a compliance deadline of December 31 for certain components, which were not named.

In addition to the HBM memory restrictions, another 140 organizations in China were added to the entity list that prevents them from getting HBM memory, advanced accelerators, or advanced chip making gear because the United States wants to not just cut off supplies of chippery, but also China’s ability to make such chips itself. (You can see that updated list here.)

The US government is not only worried about the production of high-end compute devices because it helps the Chinese military design advanced weapons, but also because it helps in the development of AI technology that is also, in and of itself, an important weapon and also a part of advanced weapon systems these days, including cyberwarfare.

Having said all that, as we have said many times, all of this does in the long run is buy the United States and its allies a little time. In the long run, with the world’s second largest economy and one that is increasingly self sufficient, China can afford to build its own high performance systems, top to bottom and including things that look like HBM and CoWoS.