It has been an invaluable asset for AMD as it re-engaged in the datacenter in the past decade to have Forrest Norrod as the general manager of its datacenter business. Norrod ran the custom server business at Dell for many years after working on X86 processors at Cyrix and being a development engineer at Hewlett Packard.

In the wake of revelations about the impending “Turin” Epyc server CPUs and the Instinct GPU roadmap at Computex 2024, we had a playful chat with Norrod about the market for CPUs and GPUs in the datacenter these days.

We talked about the competitive threat of Arm server CPUs and joked about the lawsuit that would emerge if AMD decided to clone an Nvidia GPU so it could run the entire Nvidia software stack and thus remove the main barrier of adoption for AMD GPUs. But that was not really a serious part of the conversation.

What was serious was the discussion about the need for very powerful CPUs and GPUs in the datacenter, and why the appetite for capaciousness in compute engines is ravenous and getting even hungrier with time. And how buying powerful CPUs can make space and power in the datacenter for GPU-based AI systems.

Timothy Prickett Morgan: Let’s cut right to the chase scene after the announcement of Intel’s first “Sierra Forest” Xeon 6 CPUs and your revelations about the future “Turin” line of Epyc CPUs two weeks ago. It looks to me like until Intel has anything close to parity on process and packaging with Taiwan Semiconductor Manufacturing Co, that they can’t catch you on CPUs. Is the situation really that simple until 2025 or 2026?

Forrest Norrod: I don’t want to handicap Intel’s capabilities on process. Pat Gelsinger has got a very aggressive plan and we always assume they are going to do what they say. And so, all we can do is run as fast as we possibly can on both the design and the process of TSMC.

I really like our chances with TSMC. I think they are an amazing partner and an amazing execution machine, and we’re going to keep using their most advanced process for each generation. I like our chances of staying at the bleeding edge of process with that.

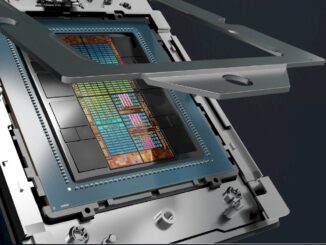

And likewise, on the design side, we are not slowing down one little bit. We are running as fast as we possibly can. You are going to continue to see design innovation and packaging and assembly innovation from us across all product lines. I can’t control what an Intel does. I just have to assume they are going to wake up tomorrow with badass boots and a vorpal sword and fight. I have to assume that Intel is going to always bring their best from this day forward.

TPM: One of the themes that we have had running in The Next Platform for the last year is that as the hyperscalers and cloud builders develop their own ARM processors, they will create a second, lower cost band of compute. Just as we saw with proprietary minicomputers in the wake of mainframes, and then Unix machines, and then X86 servers in their wake. There are still tens of millions of customers who will use X86 machinery for a long time, of course, just like there are thousands of customers who use big, wonking, expensive mainframes.

Our observation is that these price bands are separated, and they all stay relatively the same distance from each other and follow a Moore’s law curve down from their entry points. And what that means in the mid-2020s is that X86 is becoming the next legacy platform and Arm is the new upstart, and some day hence perhaps RISC-V will be the new upstart and Arm becomes the next legacy.

Do you think that’s an accurate description of what happens and what is still happening?

Forrest Norrod: That’s one potential way this could play out. At the end of the day, even for the internal properties, the question is: Can Arm get close enough and stay close enough in terms of price/performance and performance per watt, as seen by the mega datacenters and their end customers that justifies the continued investment in their own chips? Because the only reason this makes sense is if you can get that and maintain that.

I have made this observation to you before that the price of the CPU is 25 percent to 30 percent of the price of the server. If the CPU is 25 percent slower than the alternative, it doesn’t matter if it’s free, you’re losing TCO at a system level. By the way, that’s close to the hurdle that we’re talking about. Arm or an alternative has to have 20 percent to 25 percent better performance of the alternative or have that much lower cost or there is not enough room to have a real TCO.

Now, you may still do it for other reasons – you may still do it for fear of missing out, for having an alternative, for keeping Intel and AMD honest.

TPM: Here’s another observation I want to throw at you. For the longest time most server buyers bought middle bin parts and stayed away from the high end parts or even the low end of the high bin and the upper part of the middle bin.

If I was facing space and power constraints in the datacenter and I had a large fleet of generic infrastructure servers based on X86, I would be buying the N-2 or N-3 parts, and maybe sometimes N-1, and try to save power per core and try to keep that power savings to dedicate to AI projects. I would also be keeping machines in the field for five, six, or seven years, which would also argue for buying higher bin CPUs.

Forrest Norrod: I totally agree with everything you just said. And if you look at the enterprise side, that is starting to happen. By the way, that is what’s been going on cloud for a long time. At AMD, for example, our highest volume part to the clouds is our top end part. And it’s been that way for years.

The other reason is, which is under-appreciated, is that chiplets completely upturn the old assumptions around managing the cost of the stack. The yields on these chiplets are so damn high. The vast majority the cost of the cost of our 96-core “Genoa” – let’s not even talk about “Bergamo” and let’s keep this simple –versus the 64 core versus the 32 core is just a few CCDs different. That’s it. Everything else is the same.

TPM: Well, not the price to customer. . . . [Laughter]

Forrest Norrod: Now, when Intel was using monolithic dies, that was not at all true. Yielding the 64-core “Emerald Rapids” or the 60-core “Sapphire Rapids” or heaven forbid the 40-core “Ice Lake” was like finding hen’s teeth. And so the volume bin for Intel was several clicks down. But for us for a long time, the vast majority of our volume, certainly in the cloud, has been the top bin part.

And on the enterprise side, we do see people starting to move this way, particularly with the urgency to free up space and power for AI, we do see much more attention on consolidation.

The other thing that’s happened is VMware’s new pricing strategy, which has been greeted by the market with a number of different reactions. VMware pricing has now shifted entirely to a per-core license, so there’s no longer an incentive to buy a lower end part. Companies used to have to buy one license for up to 32 cores, and then you buy another one, if you go from 33 to 64 cores, and so on. If VMware is just charging purely by core, it’s cheaper to buy more cores in one server than it is to break that same number of cores over multiple servers.

TPM: Let’s shift and talk about UALink and the need for modestly sized, modestly performing AI clusters in the enterprise.

When I think about AI systems being built for enterprises – not hyperscalers and cloud builders – and the model sizes they will likely deploy in production, then the cluster aggregate performance that the hyperscalers and clouds had built a number of years ago to do tens of hundreds of billions of parameters will be good enough because they are going to use a pretrained model and retrain it or augment it. Enterprises have fairly small datasets and more modest parameter needs than what the AI titans are trying to do as they push towards artificial general intelligence.

Is that a reasonable prognostication of what will happen in the enterprise?

Forrest Norrod: You know, I honestly am not sure. I will tell you that some people that I know and that I respect a great deal agree with you completely. Other people that I know that I respect a great deal are like – no way. They say that the capability of the models continues to grow as you get larger and larger. It shows up in more nuanced ability to reason and to handle situations.

That’s the argument that the two sides make. Here’s an analogy: Not all jobs require advanced degrees today, and the same things can be true for AI. Plenty of jobs can be augmented or automated at much lower levels.

TPM: Do you think the world can make enough GPUs for the second scenario where everybody wants huge models with large parameter counts?

Forrest Norrod: I think so. Because, you know, candidly, even for the very largest models you’re talking about, it’s difficult for me to see inference models that are greater than, say, a few racks. Worst case. And I think, because there’s such an incentive, the overwhelming majority of even the largest models are going to fit into a node for inference.

But some of the training clusters that are being contemplated are truly mind boggling. . . .

TPM: What’s the biggest AI training cluster that somebody is serious about – you don’t have to name names. Has somebody come to you and said with MI500, I need 1.2 million GPUs or whatever.

Forrest Norrod: It’s in that range? Yes.

TPM: You can’t just say “it’s in that range.” What’s the biggest actual number?

Forrest Norrod: I am dead serious, it is in that range.

TPM: For one machine.

Forrest Norrod: Yes, I’m talking about one machine.

TPM: It boggles the mind a little bit, you know?

Forrest Norrod: I understand that. The scale of what’s being contemplated is mind blowing. Now, will all of that come to pass? I don’t know. But there are public reports of very sober people are contemplating spending tens of billions of dollars or even a hundred billion dollars on training clusters.

TPM: Let me rein myself in here a bit. AMD is t more than 30 percent share of CPU shipments into the datacenter and growing. When does AMD get to 30 percent share of GPUs? Does the GPU share gain happen quicker? I think it might. MI300 is the fastest ramping product in your history, so that begs the question as to whether you can do a GPU share gain in half the time that it took to get the CPU share. Or is it just too damn hard to catch Nvidia right now because they have more CoWoS packaging and more HBM memory than anyone else.

You could just do a bug-for-bug compatible clone of an Nvidia GPU. . . .

Forrest Norrod: Look, we’re going to run our game as fast as we possibly can. The name of the game is minimizing friction of adoption, look Nvidia is the default incumbent. And so it’s the default in any conversation that people have had to this point. So we have to minimize the friction to adoption of our technology. We can’t quite do what you suggested.

TPM: It would be a wonderful lawsuit, Forrest. We would all have so much fun. . . .

Forrest Norrod: I’m not sure we have the same definition of fun, TPM.

TPM: Fun is exciting in terrifying kind of way.

Forrest Norrod: But seriously. We’re going to keep making progress in the software. We’re keen to keep making progress on the hardware. I feel really good about the hardware, I feel pretty good about the software roadmap as well – particularly because we have a number of very large customers that are helping us out. And it’s clearly in their best interest to promote an alternative and to get differentiated product for themselves as well. So we’re going to try to harness the power of the open ecosystems as much as we possibly can, and grow it as fast as we can.

“I have made this observation to you before that the price of the CPU is 25 percent to 30 percent of the price of the server. If the CPU is 25 percent slower than the alternative, it doesn’t matter if it’s free, you’re losing TCO at a system level. ”

By his own logic then why would anyone buy the AMD MI Series?

Because Nvidia is supply constrained no matter what people are muttering about. If the world needs 3 million H100 equivalents but there is only 2 million — I am just making those numbers up — then any H100 equivalent, even one with a different architecture, can sell for an H100 compute and memory price. And, they are selling. AMD will sell $5 billion in MI300X and MI300A GPUs this year, more if they can get them made.

CPUs are not supply constrained and in high demand like GPUs are.

3 reasons I can think of:

1. The cost of GPUs is of much larger proportion relative to total server cost compared to CPU in traditional servers

2. MI300 is actually competitive or even ahead in some workloads. It has more compute, more memory capacity and bandwidth. The potential is there and software is decent for certain workloads as well.

3. Hyperscalers hate being at mercy of a single supplier. They want alternatives and are willing to dedicate resource to make sure alternatives become viable.

All true. But even if the MI300X was worse on performance and had less memory, AMD could still sell them at this point for a proportionately smaller price.

Ah-ha! Having done very well based on their bug-for-bug compatible architectural license for x86, could AMD execute similarly with CCD-baked chips under an architectural license for CUDA cores? … fascinating question of contemporary computational gastronomy (and kung-fu rodeo, with some competitve high-seas fishing mixed-in for good measure, as nominally intimated some time ago by hard-driving sea-gate barracudas, that naturally hunt by ambush)!

But seriously, the Top500 County Fair of local librarians provided book-ends of 2.8 and 271 GF/s/core (from HPCG bullriding, to HPL Rpeak BBQ) for the finesse of barracuda grasshoppers, of the Swiss cheese grater persuasion (Alps’ GH200), and a rather similar BBQ score of 249 GF/s/core for the instinctual kung-fu tango of the swashbuckling pirate of early delivery (ElCapitan, MI300A). I’d say they can stick to their guns, cannonballs, incense, and forked caudal fins at this rate … great fun shall be had either way in this high-seas rodeo kung-fu kitchen ballet of HPC-AI-BBQ gastronomy! 8^p

“Look in video” – was that supposed to mean vendor lock-in?

No, it was “Look, Nvidia” although your interpretation is funnier…..

Hi Timothy, is the MI500 you refer to a typo and did you mean MI300?

“TPM: What’s the biggest AI training cluster that somebody is serious about – you don’t have to name names. Has somebody come to you and said with MI500, I need 1.2 million GPUs or whatever.”

No, I was talking in the future and he understood what I meant.

Great conversation.

Can’t wait to see if Broadcom, Arista and Amd will take a (big?) bite of Nvidia revenue.

Looking forward to the Q2 of AMD, if they can turn those MI300 GPU into big margin/shipping item.

From what I’ve seen most of the competitor of Nvidia are working really hard to make sure every costumer can switch their equipment from nvidia without the need to buy a whole new infrastructure… And Nvidia is working hard to make sure they have to!

And an analysis of the equipment, power consuption (versus home etc..) and price of a fully equipt 1.2millions MI500 cluster will be great/fun to see.

In 2,3 years those project will for sure materialise (Microsoft, Oracle, Google, Grok, Tesla, Apple and co).

Maybe some food for next article: https://www.etched.com/announcing-etched

Will the market be disrupted by this YAAS (Yet Another AI Startup) and by a traditional player?

Yeah, I just saw that, too. I am chasing it.

“THE APPETITE FOR DATACENTER COMPUTE IS RAVENOUS” is a truism, is not even interesting. Why not entitle the piece “A Conversation With Forrest Norrod”? That is interesting. More people would click in to it.

I had already used that title, to be honest.