All of the major HPC centers of the world, whether they are funded by straight science or nuclear weapons management, have enough need and enough money to have two classes of supercomputers. They have a so-called capability-class machine, which stretches the performance envelope, and a capacity-class machine, which plays an eternal game of Tetris with hundreds or thousands of users who need to run much smaller jobs.

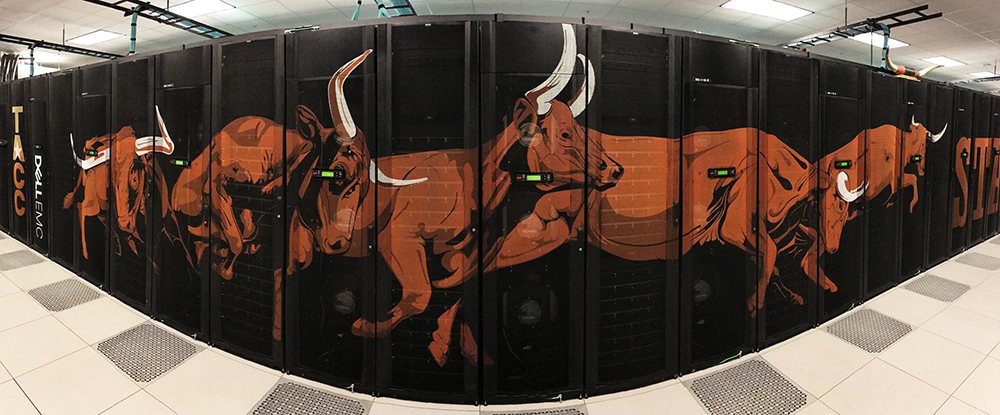

The Texas Advanced Computing Center at the University of Texas at Austin operates the flagship systems for the US National Science Foundation, and it is upgrading its capacity system and looking ahead to what its next capability system, code-named “Horizon,” might be.

In the summer of 2018, the NSF awarded a $60 million contract to Dell to work with Intel to build the $60 million “Frontera,” an all-CPU system that uses Intel’s “Cascade Lake” Xeon SP CPUs and Nvidia’s 100 GB/sec HDR InfiniBand interconnect in 8,008 nodes to deliver a peak 31.8 petaflops of performance at FP64 double precision. This is the largest academic supercomputer in the world, and it is widely expected that Horizon will be as well.

Dan Stanzione, associate vice president for research at UT and executive director of TACC, does not want to talk much about Frontera and not at all about Horizon today, but he does want to talk about the successor to Stampede2, the capacity-class machine in its fleet of systems that is used by thousands of researchers all over the world to run their HPC codes. Stampede3 will be a machine comprised of many different Intel CPUs across different generations, and in this sense it is a hybrid machine just like its predecessors, Stampede and Stampede2. However, Stampede3 will also have experimental nodes outfitted with “Ponte Vecchio” Max Series GPU accelerators, too, which is a first for the Stampede line of machines.

Stampede2 was expanded and upgraded over time with many different styles of compute, including nodes based on Intel’s “Knights Landing” Xeon Phi many-core processors, “Skylake” Xeon SP, and “Ice Lake” Xeon SP processors. The initial phases of the Stampede2 contract cost $30 million, and an additional $24 million award for operations and maintenance costs was added later as it was expanded in both capacity and time. The Xeon Phi processors were the first phase of Stampede2 to be installed in late 2016, with 4,200 single-socket nodes and a 100 Gb/sec Omni-Path interconnect from Intel lassoing them together to share work using MPI. A few months later in early 2017, 1,736 dual-socket Skylake nodes went in and the Omni-Path network was extended to corral them in. Stampede2 was expected to be sunsetted in September 2022, but in February last year the NSF decided to extend the life of Stampede2 by swapping out 448 of the Xeon Phi nodes and swapping in 224 nodes using the Ice Lake processors, which had more than 2X the performance and nearly 3X the memory of those Xeon Phi nodes. In its final instantiation, Stampede2 weighed in at 18.3 petaflops at FP64 precision across an aggregate of 367,024 cores.

At that time of the last upgrade to Stampede2, the machine’s capacity was distributed through the Extreme Science and Engineering Discovery Environment (XSEDE) program, which ended in August 2022 and which has been replaced by the NSF’s Advanced Cyberinfrastructure Coordination Ecosystem: Services and Support, or ACCESS, program. From late 2016 through early 2022, Stampede2 had run more than 9 million simulation and data analysis jobs and the machine had an uptime of 98 percent and a core utilization rate of more than 96 percent. Through this week, Stampede2 has run 10.8 million jobs for over 11,000 users in over 3,000 funded projects. Just for the sake of comparison, the Frontera system has run over 1.1 million jobs and has around 60 projects at any given time with about 150 projects total, according to Stanzione.

The Stampede3 contract is for a mere $10 million, which just goes to show you that there are still Moore’s Law forces at work even if it does take more time for them to have an effect. Luckily, supercomputers are being required to stay in the field for longer (for budgetary reasons as much as technical ones), so the timings are still sort of matching up for the upgrade cycles.

Just like Stampede2 had several sub-upgrade cycles and kept the more recent nodes in play as the oldest ones were swapped out for shiny new gear, Stampede3 is going to make use of a mix of existing and new nodes to build a new capacity-class system that occasionally also does capability-class work like Frontera. (As we were talking to Stanzione, ahead of the shutdown of many of the oldest Knights Landing nodes in Stampede2, there was a 2,000-node job running on the system, which is a big job by any standard.)

On July 15, all of the Knights Landing nodes were shut down and 560 nodes based on the “Sapphire Rapids” processors with HBM memory – what Intel calls the Max Series CPU line – were installed. The two-socket nodes used in this new partition use the 56-core Max CPU, which runs at 1.9 GHz, and have 128 GB of very fast (1.2 TB/sec) HBM2e memory across those 112 aggregate cores. That is a little bit better than 1 GB per core, which ain’t much in terms of capacity, but it does make up for it with speed that is about 4X that of regular DDR5 main memory. The Max CPU nodes do not have any DDR5 main memory attached to them to boost capacity a lot and bandwidth a little, and that is because doing so would cost around $4,000 per node, according to Stanzione.

Stampede3 keeps 1,064 of the Skylake Xeon SP nodes, which have a pair of these CPUs running at 2.5 GHz with 24 cores each, and 192 GB of main memory across the 48 cores, which is the traditional 4 GB per core that we see in the HPC space, but the memory bandwidth is 1/4th of the HBM2e memory runs. Stampede3 will also keep those existing 224 Ice Lake nodes that were added back in 2021, which have a pair of 40-core CPUs that run at 2.3 GHz, and with 256 GB per node that works out to 3 GB per core. It will be interesting to see the interplay between memory capacity, memory bandwidth, and flops play out across the different parts of the Stampede3 machine.

We expect a lot of HPC users will want the Max CPU nodes and the HBM2e memory because of the performance boost it gives.

“We did side by side comparisons of Sapphire Rapids with DDR5 memory versus Sapphire Rapids with HBM2e,” Stanzione, tells The Next Platform. “And depending on the code, we saw anywhere from a 1.2X to a factor of 2X or more performance improvement just based on the memory technology. The average was 60 percent or 70 percent improvement over a regular Sapphire Rapids. That means versus a Cascade Lake CPU on Frontera or a Skylake CPU on Stampede2 it’s like a factor of 5X per socket improvement.”

In theory, this might mean that TACC can push more work through this Max Series CPU part of the machine, which will weigh in at nearly 4 petaflops of peak FP64 performance. Nearly half of the aggregate cores in the Stampede3 machine – 45.5 percent – are in the nodes with the HBM2e memory, which by themselves have 4 petaflops of performance. But it might be a more usable 4 petaflops, and this is an important distinction.

“If we can put two Sapphire Rapids CPUs side by side, and the one with HBM goes twice as fast, the peak flops don’t change much between a 60 core and a 56 core chip, but the flops you get changes a lot,” Stanzione explains. “The realizable fraction of peak is much better with high bandwidth memory. So for a lot of our bigger MPI codes that are bandwidth sensitive, the Sapphire Rapids HBM will be the way to go. If you need more memory, we’re going to keep the Ice Lake and Skylake nodes. And even though the Skylake CPUs are getting a little long in the tooth, they are 2.5 GHz, which is a pretty good clock rate, and 48 cores per node, so they’re still not terrible.”

You go to simulation war with the core army you have.

That leaves the ten servers equipped with four Ponte Vecchio GPUs, which we presume have a pair of Sapphire Rapids HBM processors and Xe Link interconnects hooking them all together in a shared memory system. If TACC is able to get the top-end 52 teraflops parts for these nodes, this partition of the machine would add up to about 2.1 petaflops all by itself. By out math, the Skylake partition has about 2.8 petaflops peak and the Ice Lake partition has about 1.1 petaflops peak, and the whole Stampede System, with 137,952 cores (with 128 cores in each Ponte Vecchio GPU) and 330 TB of main memory yielding just under 10 petaflops of aggregate FP64 oomph.

On the networking front, TACC is going to stick with Omni-Path, but in this case, those new Sapphire Rapids HBM nodes and the Ponte Vecchio GPU nodes are going to be deployed using the forthcoming 400 Gb/sec kicker to Omni-Path that will be coming out from Cornelis Networks, which bought the Omni-Path business from Intel in July 2021 and which is skipping the 200 Gb/sec upgrade cycle to bring out this new 400 Gb/sec interconnect. The existing Skylake and Ice Lake nodes will stick with 100 Gb/sec Omni-Path.

In terms of storage, TACC has tapped all-flash, high performance storage maker Vast Data, which is a big win for that startup.

“We have a lot of aging disk on the Stampede2, which has been a great file system, but over six years of being beaten every single day at max capacity, that scratch file system has taken some wear and tear running those 10.8 million jobs. The so we’re going to Vast Data to try that as a scratch file system. We are going to hook it to the Frontera fabric and see if we can actually run it at the 8,000 to 10,000 clients looking towards the next system. We did a small-scale testbed with Vast Data and we really, really liked it. So this is our attempt to scale that up and break some dependence on things like Lustre and go all NVMe flash all the time.”

The Vast Data file system will weigh in at 13 PB and with data compression on, which is built in, it should yield about 20 PB of effective capacity. The storage system will deliver 50 GB/sec of write and 450 GB/sec of read bandwidth.

That new Vast Data file system will be installed at the end of September, and Stanzione says that new racks will be delivered from Dell in October with a few hundred Sapphire Rapids HBM nodes going in during November and December. The goal is to have all the nodes in during Q1 2024, and TACC is hoping this can be done before January comes to a close and the full Stampede3 machine can be in production by March.

You wrote “capability” twice in the intro. Probably mean “capacity” for the latter.

Correct! Thanks for the catch.

Nothing like a Texas-style, raw-hide, hogtied, cowtipping, honky tonk rodeo, of HPC, to get back in the saddle again I say (to nearly quote the Cowslingers’ “Off the Wagon” album; though it appears they’ve never played “Austin City Limits”)!

Unfortunately, Stanzione didn’t seem to mention TACC’s delicious (Louie Mueller?) barbecued steered Longhorn (POWER9+V100, 2.3 PF, #376 in June) in this wild west tale of the corraling and lassoing of the Stampede beast and its storage bit barns … could the POWER10 giant squid axon SerDes sauce deliver an extra oomph of tasty flavor to this hardy texan computational bovidae? Outrageously yummy if already under prep by HPC chefs!

Still, the herd of computational nodes suddenly taking-off in this specific SR+PV upgrade direction, for the computational cattle choreography that is Stampede3, is great news (flat-foot, pale-face, east-coast bioengineering protein folders use those TACC boxens from as far as Maryland, for example). But if Stampede2’s intensity was at 10.7 PF in June (at #56), I’d hope the upgrade would bring that up a bit (unless it’s too hot for that in TX right now, or I’m mis-reading — a lot lately…? –, or energy efficiency is the goal…).

I can’t speak to Stampede3’s eventual oomph (maybe an iso-perf upgrade) but feel compelled to express shock-and-horror at the notion that Texas Pete’s Original Hot Sauce is actually made in Winston-Salem, NC (strange coincidence with TNP Studio-Garage?)! I reckon Rustlin’ Rob’s Texas Gourmet Foods’ selection may be more genuine (Habanero Rodeo), or firey (Vicious Viper)! 8^d

P.S. That Texas hold’em Liqid disaggregation and composability poker bluff experiment that goes by the name of TAMU’s ACES (Phase II, NSF-sponsored, SR+H100) looks intriguing too. I wonder how this cephalopodic cowboy gamble is paying off for ’em High Plains Comancheros of academic HPC.