Sponsored Feature: It’s a decade and a half since researchers dazzled the tech world by demonstrating that GPUs could be used to dramatically accelerate key AI operations.

That realization continues to grip the imagination of enterprises. IDC has reported that when it comes to infrastructure, GPU accelerated compute and HPC like scale-up are amongst the top considerations for tech leaders and architects looking to build out their AI infrastructure.

But for all the organizations which have successfully applied AI to real world problems, many more struggle to get beyond the experimentation or pilot stage. IDC research in 2021 found that less than a third of respondents had moved their AI projects into production, and just a third of those had reached a “mature stage of production”.

Cited hurdles include problems with the processing and preparation of data and beefing up infrastructure to support AI at enterprise scale. Enterprises needed to invest in “purpose-built and right sized-infrastructure” IDC said.

What’s The AI problem Here?

So where are those organizations going wrong with AI? One factor might be that tech leaders and AI specialists are failing to take a holistic look at the broader AI pipeline while paying too much attention to GPUs compared to other compute engines, notably the venerable CPU.

Because ultimately, it’s not a question of backing CPUs versus GPUs versus ASICs. Rather, it’s about finding the optimal way to construct an AI pipeline that can get you from ideas and data and model building to deployment and inference. And that means appreciating the respective strengths of different processor architectures, so that you can apply the right compute engine at the right time.

As Intel’s senior director, Datacenter AI Strategy and Execution, Shardul Brahmbhatt explains, “The CPU has been used for microservices and traditional compute instances in the cloud. And GPUs have been used for parallel compute, like media streaming, gaming, and for AI workloads.”

So as hyperscalers and other cloud players have turned their attention to AI, it’s become clear that they are leveraging these same strengths for different tasks.

The parallel compute capabilities of GPUs make them highly suitable for training AI algorithms, for example. Meanwhile, CPUs have an edge when it comes to low batch, low latency real time inference, and using those algorithms to analyze live data and deliver results and predictions.

Again, there are caveats, Brahmbhatt explains, “There are places where you want to do more batch inference. And that batch inference is also something that is being done through GPUs or ASICs.”

Looking Down The Pipeline

But the AI pipeline extends beyond training and inference. At the left side of the pipeline, data has to be preprocessed, and algorithms developed. The generalist CPU has a significant role to play here.

In fact, GPUs account for a relatively small proportion of total processor activity across the AI pipeline, with CPU-powered “data stage” workloads accounting for two thirds overall, according to Intel (you can read a Solution Brief – Optimize Inference with Intel CPU Technology here).

And Brahmbhatt reminds us that the CPU architecture has other advantages, including programmability.

“Because CPUs have been used so broadly, there is already an existing ecosystem of developers and applications available, plus tools that deliver ease of use and programmability for general purpose compute,” he says.

“Second, CPUs provide faster access to the larger memory space. And then the third thing is it’s more unstructured compute versus GPUs, which are more parallel compute. For these reasons, CPUs operate as the data movers which feed the GPUs, thereby helping with recommender system models as well as evolving work loads like graph neural networks.”

An Open Plan For AI Development

So how should we view the roles of CPUs and GPUs respectively when planning an AI development pipeline, whether on-prem, in the cloud, or straddling both?

GPUs revolutionized AI development, because they offered a way of accelerating AI development by offloading operations from the CPU. But it doesn’t follow that this is the most sensible option for a given job.

As Intel platform architect Sharath Raghava explains “AI applications have vectorized computations. Vector computations are parallelizable. To run AI workload efficiently, one could exploit CPUs and GPUs capabilities considering the size of the vector computations, offload latency, parallelizability, and many other factors.” But he continues, for a “smaller” task, the “cost” of offloading will be excessive, and it may not make sense to run it on a GPU or accelerator.

CPUs can also benefit from closer integration with other system components that allow them to complete the AI job more quickly. Getting maximum value from AI deployments involves more than running just the models themselves – the insight sought depends on efficient preprocessing, inference, and postprocessing operations. Preprocessing requires data to be prepared to match the input expectations of the trained model before it is fed to generate inference. The useful information is then extracted from the inference results at the postprocessing stage.

If we think about a data center intrusion detection system (IDS) for example, it is important to act on the output of the model to protect and prevent any damage from a cyber attack in a timely manner. And typically, preprocessing and postprocessing steps are more efficient when they are carried out on the host system CPUs because they more closely integrated with the rest of architectural ecosystem.

Performance Boost Under Starters Orders

So, does that mean forgoing the benefits of GPU acceleration altogether? Not necessarily. Intel has been building AI acceleration into its Xeon Scalable CPUs for some years. The range already includes Deep Learning Boost for high performance inferencing on deep learning models, while Intel’s Advanced Vector Extensions 512 (AVX 512) and Vector Neural Network Extensions (VNNI) speed up INT8 inferencing performance. But DL Boost also uses brain floating point format (BF16) to boost performance on training workloads that do not require high levels of precision.

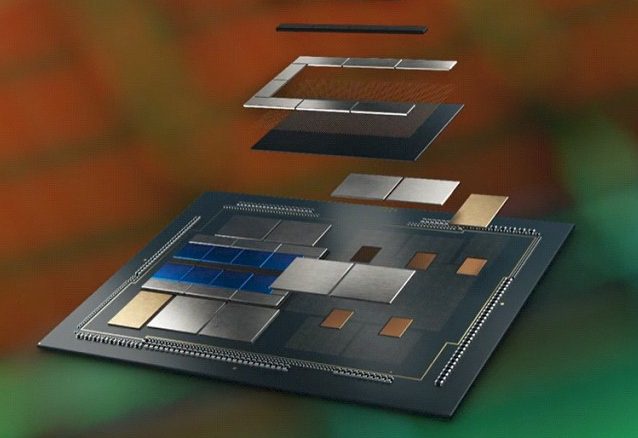

Intel’s upcoming Xeon Scalable fourth generation CPUs will add advanced matrix multiplication, or AMX. This will give a further 8 times boost over the AVX-512 VNNI x86 extensions implemented in earlier processors according to Intel’s calculations, and allow the 4th Generation Intel Xeon Scalable processors to “handle training workloads and DL algorithms like a GPU does”. But those same accelerators can also be applied to general CPU compute for AI and non-AI workloads.

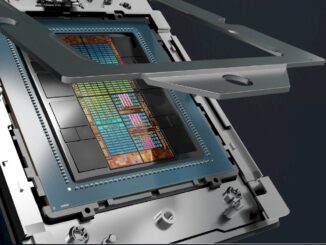

That doesn’t mean Intel expects AI pipelines to be x86 from start to finish. When it makes more sense to fully offload training workloads that will benefit from parallelization, Intel offers its Habana Gaudi AI Training Processor. Benchmark tests suggest that the latter power Amazon EC2 DL1 instances which can deliver up to 40 percent better price-performance than comparable Nvidia GPU-based training instances also hosted in the cloud.

At the same time, Intel’s Data Center GPU Flex Series is geared towards workloads and operations that benefit from parallelization such as Batch inference, with different implementations pitched at “lighter” and more complex AI models. Another Intel Data Center GPU, code-named “Ponte Vecchio” and now sold under the Max Series brand, will shortly begin powering the “Aurora” supercomputer at the Argonne National Laboratory. This is targeted for HPC & AI Training primarily including support for Xelink for scale up and scale-out.

Can We Go End To End?

Potentially, then, Intel’s silicon can underpin the entire AI pipeline, while minimizing the need to offload data between different compute engines unnecessarily. The company’s processors – whether GPU or CPU – also support a common software model based on open-source tooling and frameworks with Intel optimizations through its oneAPI development environment.

Brahmbhatt cites Intel’s heritage in building an x86 software ecosystem based on community and open source as another advantage. “The philosophy that Intel has is ‘let the ecosystem drive the adoption.’ And we need to ensure that we are fair and open to the ecosystem, and we provide any of our secret sauce back to the ecosystem.”

“We are using a common software stack, to basically make sure that developers don’t have to worry about the underlying differentiation of IP between CPU and GPU for AI.”

This combination of a common software stack and a focus on using the right compute engine for the right task is even more important in the enterprise. Businesses are relying on AI to help them solve some of their most pressing problems, whether that resides in the cloud or on prem. But mixed workloads require full featured software, as well as maintenance and management of the system stack, to run the code not included in the kernel that sits on the accelerator.

So, when it comes to answering the question “how do we get AI to enterprise scale” the answer might depend on taking a look at the bigger picture and making sure you use the full complement of hardware and software kit at your disposal.

Sponsored by Intel.

Be the first to comment