Machine learning techniques based on massive amounts of data and GPU accelerators to chew through it are now almost a decade old. They were originally deployed by the hyperscalers of the world, who had lots of data and a need to use it to provide better search engine or recommendation engine results. But now machine learning is going mainstream, and that means it is an entirely different game.

The enterprise and government organizations of the world do not have infinite amounts of money to spend on infrastructure. They need to figure out how they are going to use AI techniques in their applications and what kind of infrastructure to support it, and then find the budget to do this new thing when they already have a lot of other things to do.

Despite all of these issues, AI is going mainstream, and Inspur, which is now the number three maker of servers in the world behind Dell and Hewlett Packard Enterprise, turns out to be the largest shipper of Nvidia GPUs. As such, it brings some unique insight to the market and also brings its engineering to bear to create unique servers aimed at both machine learning training and machine learning inference workloads.

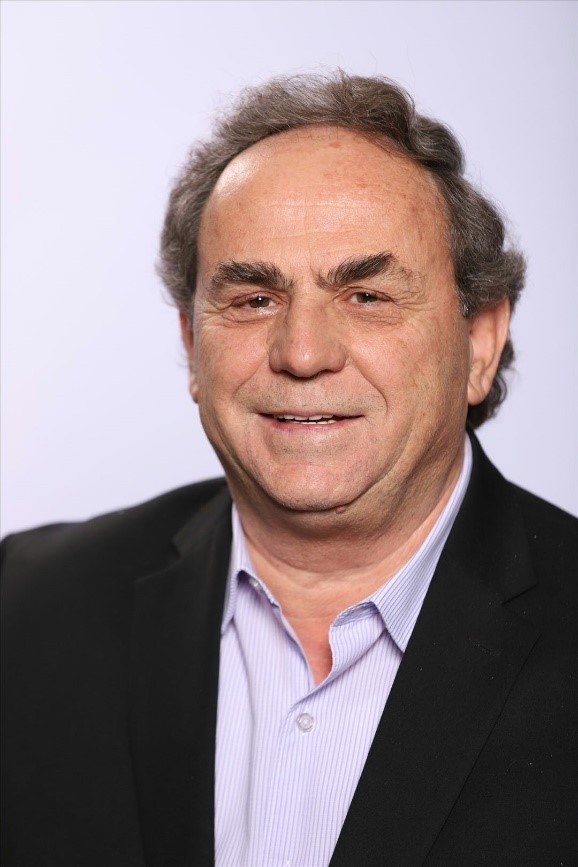

To find out more about how Inspur sees the AI market growing and changing, we sat down with Vangel Bojaxi, global AI and HPC director for Inspur Information, focuses on IT infrastructure solutions. Bojaxhi has been at Inspur Information for the past six years, and before that he was the principal IT solution architect for Schlumberger/WesternGeco, which is a famous provider of seismic services and software for the oil and gas industry, As an IT architect, he was naturally interested in applying AI techniques to seismic and reservoir modeling HPC applications.

Inspur, delivered its first X86 server for small businesses in China in 1993, and it has grown steadily since that time. In 2010, the company started its Joint Design Manufacturing approach, as the hyperscalers, cloud builders, and other service providers were rising in its home market and as Inspur was expanding into other geographies. This service gave very large customers a collaborative experience mixing the best parts of a high volume OEM business with the custom design options of an ODM.

Inspur is a member of the Open Compute Project started by Facebook, and the OpenPower Foundation started by IBM and Google. It is also a member of OpenStack, the cloud controller software project, the Open19 group started by LinkedIn that is driving a OCP technologies into standard 19-inch server racks, as well as the Open Data Center Committee (ODCC), a consortium working on common rack and datacenter designs started by Open Data Center Committee (ODCC) was jointly established by the original Tianzhu project members Alibaba, Baidu, Tencent, etc., and Intel shortly after OCP was founded.

So just how much of Inspur’s business these days is being drive by AI? Quite a bit.

“At this time, we can say that AI servers comprises about 20 percent of the overall X86 server shipment share in the company, and we anticipate that over the next five years that will gradually increase to more than 30 percent,” Bojaxhi tells The Next Platform. “We consider that AI will be the core of every Inspur system and is the catalyst for digital transformation in every industry. And for the past four years, we have focused on four pillars – continuous innovation in AI computing power, deep learning frameworks, AI algorithms, and application optimization – to propel Inspur to become a leader in AI. And as a result, the AI server line has become one of the fastest growing business segments in the company’s history.”

We know that Inspur and its rivals in the server industry have broad and deep portfolios, and Inspur is the only company among the OEMs and ODMs that sells servers based on X86 processors from Intel and AMD as well as Power processors from IBM, which are unique in that the Power9 chip has native NVLink ports to tightly couple the CPUs to Nvidia “Volta” and “Ampere” GPU accelerators. It is always interesting to see what vendors are offering, but it is enlightening to know what customers are actually doing to support AI training and AI inference workloads out there in the real world.

“While we sell some smaller configurations with two or four GPUs, customers are increasingly deploying servers with eight GPUs or more for AI training workloads,” Bojaxhi explains. “And that is because data volumes are exploding and the complexity of the models is growing exponentially, which puts tremendous performance increase pressure on systems. This is why Inspur designed servers like the NF5488A5, which is powered by eight Nvidia “Ampere” A100 GPUs, two AMD “Rome” Epyc 7002 processors, and the NVSwitch interconnect and which has broken many AI benchmarks and attracted the interest of many of our customers. That said, Inspur is not losing sight of the growing demand for AI inferencing. The analysts that we work with believe that inference accounted for 43 percent of all AI computing power in 2020, and inference is expected to exceed training – meaning be above 51 percent – by 2024.”

We think that in the long run, the compute driving AI inference could be 2X or 3X or even more Xs larger than that of AI training, but it is hard to put a number on that because we don’t know how much computing will be done at the edge and how much of that edge computing will be AI inference. Bojaxhi agrees that there is potential for it to dwarf the AI processing capacity back in the datacenter that is used for AI training. And it can spearhead the design of a machine through its JDM approach with hyperscalers, cloud builders, and other large service providers and then use that design as a foundation for the commercial lineup that it sells to other large enterprises or even, eventually, small and medium businesses that may start out training AI models in the cloud, but roll out production AI clusters on premises. And, ironically, without even knowing it, customers could start out with Inspur systems running on the cloud (because Inspur sells a lot of machines to Alibaba, Baidu, and Tencent) and end up on Inspur systems running in their own datacenters.

Three other popular AI training servers include the NF5468A5, which has two AMD “Milan” Epyc 7003 processors and eight Nvidia “Ampere” A30, A40,A100 GPU accelerators directly linked to the processors, and the NF5468M6, which has a pair of Intel’s “Ice Lake” Xeon SP processors and a PCI-Express 4.0 switch on the system that supports multiple interconnect topologies between the CPUs and GPUs. The 4U server chassis of the NF5468M6 supports eight Nvidia A30 or A100 accelerators, twenty Nvidia A10 accelerators, or twenty Nvidia T4 accelerators. The NF5280M6 has a pair of Ice Lake Xeon SP servers in a 2U form factor plus four Nvidia A10, A30, A40, or A100 accelerators or eight Nvidia T4 accelerators.

No matter what, Inspur can sell devices at the edge and in the datacenter, for either training or inference, and based on CPUs, GPUs, or FPGAs as necessary. The company can modified these systems to include FPGAs or custom ASICs that support PCI-Express links back to the processors.

Which brings us to the final point: The distribution of compute to support AI inference workloads.

It is common knowledge that most of the inference in the world is done on X86 servers, but there is a trend towards moving some of the inference workloads off CPUs and onto accelerators. Bojaxhi says that in 2020, based on its own customers, once customers decided to offload inference from the CPUs to get better price/performance and overall performance, around 70 percent of them went with Nvidia’s T4 accelerators, which are based on the “Turing” TU104 GPUs and which have advantages over the beefier GPUs designed to run AI training and HPC simulation and modeling workloads. The remaining accelerated inference is split 22 percent for FPGAs and 8 percent for custom ASICs. Looking ahead to 2024, the projections suggest that GPUs will drop a bit to 69 percent of inference compute, and FPGAs will drop to 7 percent while ASICs will rise to 16 percent.

Commissioned by Inspur

Be the first to comment