Creating the Tesla GPU compute platform has taken Nvidia the better part of a decade and a half, and it has culminated in a software stack comprised of various HPC and AI frameworks, the CUDA parallel programming environment, compilers from Nvidia’s PGI division and their OpenACC extensions as well as open source GCC compilers, and various other tools that together account for tens of millions of lines of code and tens of thousands of individual APIs. This GPU compute software stack is as complex as an operating system – and now it is coming to Arm.

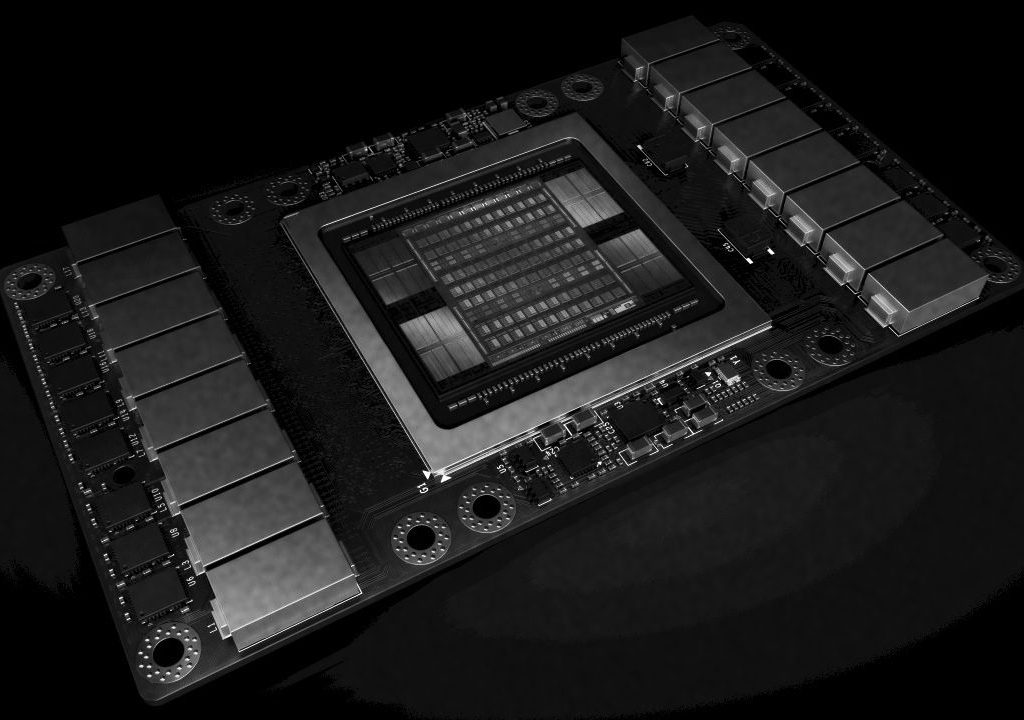

With this move, Nvidia will make Arm processors a peer to the X86 processors that were originally used as hosts for its GPU compute stack and to the Power processors that were added later in the game as Nvidia teamed with IBM and Mellanox Technologies, which is in the process of being acquired by Nvidia for $6.9 billion, to take down a few pre-exascale system deals with the US Department of Energy. Technically, IBM was the prime contractor for the “Summit” and “Sierra” supercomputers, but the vast majority of the compute is being done by the Tesla V100 accelerators, not IBM’s Power9 processors, which by the way have very respectable floating point performance for a CPU.

Arm has been gaining traction in a number of HPC centers around the world, so a move by Nvidia to embrace this processor architecture as a peer to X86 and Power is not a surprise, and neither is the timing for a number of reasons. Notably, the Post-K “Fugaku” exascale system at the RIKEN center in Japan, due in 2021, is based on a custom 48-core Arm processor, called the A64FX, that will sport the 512-bit Scalable Vector Extensions (SVE) that Japanese server maker Fujitsu and Arm Holdings created together. Hewlett Packard Enterprise has already built the “Astra” system at Sandia National Laboratories in the United States, which is based on Marvell’s 28-core “Vulcan” ThunderX2 processors, which does not support such massive vector processing capability. This is the one Arm-based supercomputer on the June 2019 Top500 list, with 138,096 cores that deliver 2.21 petaflops of peak theoretical performance and 1.76 petaflops of sustained performance without the help of any type of accelerator. The “Isambard” system being built by Cray – which is in the process of being acquired by HPE for $1.3 billion – for the University of Bristol in the United Kingdom, has over 10,000 cores based on the ThunderX2 processors linked by Cray’s “Aries” XC interconnect.

The funny thing about those three machines, which are at the vanguard of Arm in HPC, is that they do not have GPU acceleration. Nvidia wants to fix that, particularly with AMD getting credible Epyc CPUs and Radeon Instinct GPU accelerators out the door this year, and especially with Cray and AMD winning the “Frontier” CORAL-2 bid at Oak Ridge National Laboratory, which will be for a 1.5 exaflops system to be installed in late 2021 and operational in 2022. The Frontier system will be based on custom CPUs and GPUs from AMD linked by Cray’s “Slingshot” interconnect. Moreover, Intel is prepping its Xe discrete GPUs to be paired with future Xeon CPUs in the “Aurora” exascale system at Argonne National Laboratory, which is scheduled for delivery in 2021 if all goes well. The European exascale effort will include an Arm processor, as we recently reported, but it will be using a custom vector engine based on the RISC-V instruction set as well as a custom FPGA. There are no GPUs in this system because there are no European makers of GPUs. Nvidia did not get into these three big deals, although it did get a piece of the “Perlmutter” pre-exascale NERSC-9 system at Lawrence Berkeley National Laboratory, which pairs a future AMD Epyc processor with a future Nvidia Tesla GPU accelerator.

IBM has done all that it can to help push the idea of accelerated computing, including adding NVLink ports to its Power8’ and Power9 processors to bring memory coherency across the CPU-GPU complex, and that has helped Nvidia to a certain degree in the upper echelons of HPC. But there is no way that Intel or AMD are ever going to add NVLink ports to their processors, and that creates something of a problem for Nvidia, which knows this because it uses Xeon processors inside of its DGX-1 and DGX-2 supercomputer nodes. That leaves Arm, which is an architecture that, as it turns out, Nvidia knows really well.

Nvidia has been using Arm-based processors in its Tegra family ceepie-geepie systems for embedded devices and gaming systems for a long time. With much fanfare back in January 2011, Nvidia launched its “Denver” Arm server chip effort, which was not to develop a discrete CPU but rather to put Arm server cores on the GPU chips themselves, obviating the need for host processors. As that Denver effort was still underway Nvidia announced at the International Supercomputing conference in Germany back in 2013 that the compilers for its CUDA 5.5 environment would have native compilation on 32-bit and 64-bit Arm processors, rivaling the support for X86 architectures from Intel and AMD and predating support for the Power platform, by the way.

The announcement that Nvidia is making at ISC 2019 this week is much broader and deeper than that initial foray into Arm way back when. It goes way beyond the CUDA-X HPC and AI stack, which has over 40 libraries for GPU acceleration in it tuned for these numerically intensive workloads, to the entire GPU compute stack from Nvidia.

“It is the right time for us to announce support,” Ian Buck, vice president and general manager of accelerated computing at Nvidia, tells The Next Platform. “We are most of the way through it, and it has taken us two years to bring all this up and we expect to release the first version of the full stack by the end of this year.”

Nvidia is not being specific about how the players in the Arm server space plan to leverage this technology. Arm Holdings, with its Neoverse server chip designs, is an obvious partner. Just like Arm licensees buy PCI-Express controller and memory controller circuit blocks from Cadence Design Systems when they also license Arm server chip intellectual property (or create their own), Nvidia and Arm could strike up a partnership to make NVLink IP blocks available to those who buy Neoverse licenses, allowing for more tight coupling with GPUs, including memory atomics and memory coherency across the CPU-GPU compute complexes. Marvell, with the future “Triton” ThunderX3 processor, and Ampere, with its future eMag chips (based on the X-Gene chip designs it acquired from Applied Micro), could play along as well. As far as we know, Ampere will be embracing the CCIX coherent interconnect being pushed by Xilinx, Arm, and AMD, among others, and is not particularly interested in NVLink – at least not yet. We have no idea what Marvell plans in terms of coherency protocols for the Triton chip. It is not clear what intentions the HiSilicon division of Chinese chip maker and IT manufacturer Huawei Technologies has for GPU acceleration, either.

“NVLink integration is definitely one of the opportunities here, along with many others,” explains Buck. “Arm has a history of energy efficiency, which comes from its heritage in the mobile space, and it has investments in Linux kernels. The beauty of Arm is that it is open. You can you can add all sorts of interesting technologies that will be beneficial to supercomputing, such as being tightly integrated. By marrying an Arm CPU with a Tesla GPU, we can do a lot of the lifting of the heavy computation that is needed for HPC simulation and AI, and Arm can do a CPU with fast single threads.”

While Nvidia is making Arm a peer to X86 and Power, it is important to realize that being a peer does not mean being crowned king, and we suspect that more than a few people will misinterpret this announcement to mean that Nvidia is anointing Arm as its CPU of choice. This is wrong. Nvidia is just providing a choice of CPUs, and that is not the same thing. Now, if Nvidia actually blows the dust off Project Denver and creates Arm cores to embed in GPU packages, obviating the need for host processors entirely. . . . that will be a different story indeed.