A high-end supercomputer consumes nearly as much energy as a small city, which creates significant budgetary concerns for organizations that deploy and manage these systems.

This challenge is particularly serious for those seeking to push the boundaries of performance. Even with a projected ten-fold improvement in energy efficiency versus today’s top systems, the annual power bill for a future exascale system could easily exceed $20 million, according to a report from US Department of Energy. Not many organizations would be willing – or able – to take on such costs.

Many in the HPC community are working to address this challenge by increasing hardware efficiency at various levels of the solution stack, from silicon to systems to data centers. Many others are focusing on software advances to support new hardware innovations and to make more efficient use of available resources.

A prime example of this second approach is the READEX project, which is developing an automated toolset for dynamically tuning HPC hardware, system software, and applications. This project is backed by the European Union’s €80 billion Horizon 2020 program and is driven by a consortium of five major European universities, along with Intel and a leading European supplier of HPC-driven engineering solutions. Together, these organizations bring long experience and world-class expertise to the task of optimizing hardware and software in large-scale HPC environments.

READEX, which is short for Runtime Exploitation of Application Dynamism for Energy-Efficient Exascale Computing, brings together tools and strategies that have been used effectively in two very different computing domains: the energy-constrained and hardware-constrained world of embedded systems and the performance-hungry world of HPC. Despite continual improvements in component technologies, developers in both worlds have faced significant challenges as they seek to deliver acceptable performance for increasingly sophisticated applications. Given their different goals and constraints, it is not surprising they have come up with different strategies.

Optimization For Embedded: Systems Scenario Methodology

Best practices for embedded systems developers include profiling and inspecting code during design time to detect specific runtime situations (RTSs) and determine optimized configurations for each one. For example, an application might toggle between compute-intensive and data-intensive cycles. By dynamically lowering the voltages and frequencies of processor cores during data-intensive periods, development teams can reduce power consumption without reducing overall performance.

Embedded system developers also determine identifiers that indicate an upcoming RTS and develop low-overhead switching mechanisms. These mechanisms are triggered when real-time algorithms determine that the benefits of reconfiguration outweigh the costs. Employing these identifiers and switching mechanisms can reduce energy consumption by as much as 30 percent in production applications. However, this is a very labor-intensive approach and requires a high level of hardware and software expertise.

Optimization For HPC: Static Auto-Tuning

HPC developers have taken a different approach. They have developed software tools to collect and present information that allows users and developers to tune systems and applications.[i] An important addition to this toolset is the Periscope Tuning Framework (PTF), which automatically finds optimized system configurations based on collected information. However, in contrast to the embedded systems approach, PTF defines a static configuration based on the average resource consumption of an application. This automated but static approach has demonstrated improvements in energy efficiency of up to about 10 percent.

READEX: Bringing It All Together

READEX combines and extends the two approaches to provide a highly automated process for analyzing and dynamically tuning HPC systems and applications. It is a combined design time/runtime approach that takes advantage of application dynamism to increase energy savings.

Using READEX during application development (design time) is a relatively simple, three-step process:

- A software tool called Score-P is used to insert probe-functions into application code, so that data can be collected during runtime. Probes are inserted automatically via compiler instrumentation and library wrappers. Developers can also provide application-specific knowledge to further optimize data collection.

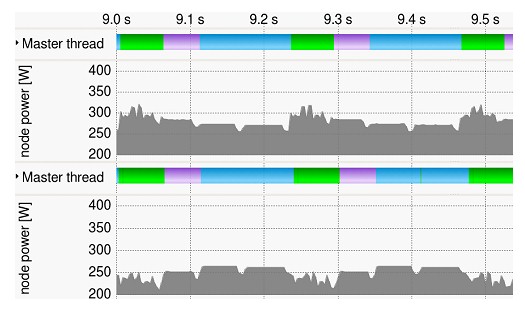

- The instrumented application is run once with a representative data set. These initial results characterize the dynamism of the application and provide an estimate of the performance and energy-efficiency gains that are possible. Following the first run, READEX removes instrumentation that does not provide estimated benefits above a specified threshold. This eliminates any perturbations caused by those probes on successive runs. The application is then run again using the same or an extended data set. This second run identifies the RTSs that exhibit sufficient dynamism to justify tuning during production runs. (As a side benefit, the resulting data and visualizations provide insights that can be used for additional, manual performance tuning if desired.)

- Deriving the Tuning Model. PTF is then used to evaluate the collected information in combination with the available tuning parameters to generate a tuning model. A classifier and configuration selector are also generated to help the system manage runtime situations that don’t match the predefined scenarios. The RTSs, classifier, and selector are stored within the tuning model.

Once the tuning model is created, the READEX Runtime Library (RRL) can use it during production runs. RRL re-utilizes the Score-P instrumentation to apply the configurations defined in the tuning model. A calibration mechanism within the RRL evaluates and adapts to new scenarios, which are then added to the tuning model. In this way, the model is continually refined to provide increasing efficiencies over time.

A Foundation For Growth

READEX is an extensible software platform that can readily accommodate new tuning opportunities. It uses PTF plug-ins to identify and control tunable parameters. Additional parameters can be added by creating new plug-ins. Current parameters include the following.

- Hardware and runtime parameters: Core frequencies, uncore frequencies and OpenMP number of threads.

- Application parameters, such as selecting pre-conditioners based on measured performance and energy-efficiency.

Intel engineers at the Exascale Computing Research (ECR) Centre in Paris, France, provided guidance to help ensure that READEX takes full advantage of the built-in telemetry in Intel Xeon processors. They continue to work with the READEX project to validate the hardware, runtime, and application parameters.

According to Marie-Christine Sawley, the Intel director of the ECR, “READEX is an excellent opportunity for us to work at the forefront of energy efficiency for real life HPC applications. We look forward to continuing our partnership as the team ports its framework onto HPC systems built with highly-parallel compute elements.”

Highly-parallel compute elements offer fundamental advantages in energy efficiency. However, even before READEX is applied, application code must be optimized to ensure it can efficiently utilize high core densities. Professor Wolfgang Nagel, director of the Center of Information Services and HPC (ZIH) at Technische Universität Dresden (TU Dresden), is leading a project that is focused on this complementary task. Within the context of the Intel Parallel Computing Center (IPCC) program, the ZIH has modernized TAU and TRACE, two leading CFD solvers in the European aerospace industry, for optimal performance on many-core processors. (For technical information on this work, read Dynamic SIMD Vector Lane Scheduling by Olaf Krzikalla, Florian Wende, and Markus Höhnerbach, and Code Vectorization Using Intel Array Notation by Olaf Krzikalla, and Georg Zitzlsberger.)

Additional opportunities for tuning will continue to emerge as hardware and software evolve. For example, applications can be expected to exhibit increased dynamism as data volumes rise and data movement increases between processing elements and an expanding hierarchy of memory options. Due to its plugin-based infrastructure, READEX can be extended to take advantage of this and other tuning opportunities as they emerge.

Conclusion

A beta version of READEX is available today for evaluation and testing (see the READEX website for more information). In the upcoming months, this version will be improved to simplify deployment and use, and to enable increasing efficiencies for dynamic HPC applications. The goal is to provide a tool that delivers high value across the widest range of systems and software and can be implemented quickly and easily, even by relatively inexperienced HPC users.

READEX alone will not solve the energy-efficiency challenges that lie ahead. However, it will provide a simple, cost-effective, and largely automated toolset that can help organizations reduce energy consumption. Using READEX, they will be able to spend less money on power, which may enable them to redirect investments toward human and computing resources that directly support their mission.

Be the first to comment