Today’s podcast episode of “The Interview” with The Next Platform will focus on an effort to standardize key neural network features to make development and innovation easier and more productive.

While it is still too early to standardize across major frameworks for training, for instance, portability for new architectures via a common file format is a critical first step toward more interoperability between frameworks and between training and inferencing tools.

To explore this, we are joined by Neil Trevett, Vice President of the Developer Ecosystem at Nvidia and President of the Khronos Group, an industry consortium focused on creating open standards for key technology areas. Many recognize this group from work on OpenCL but the groups efforts extend far beyond that—now as far as neural networks.

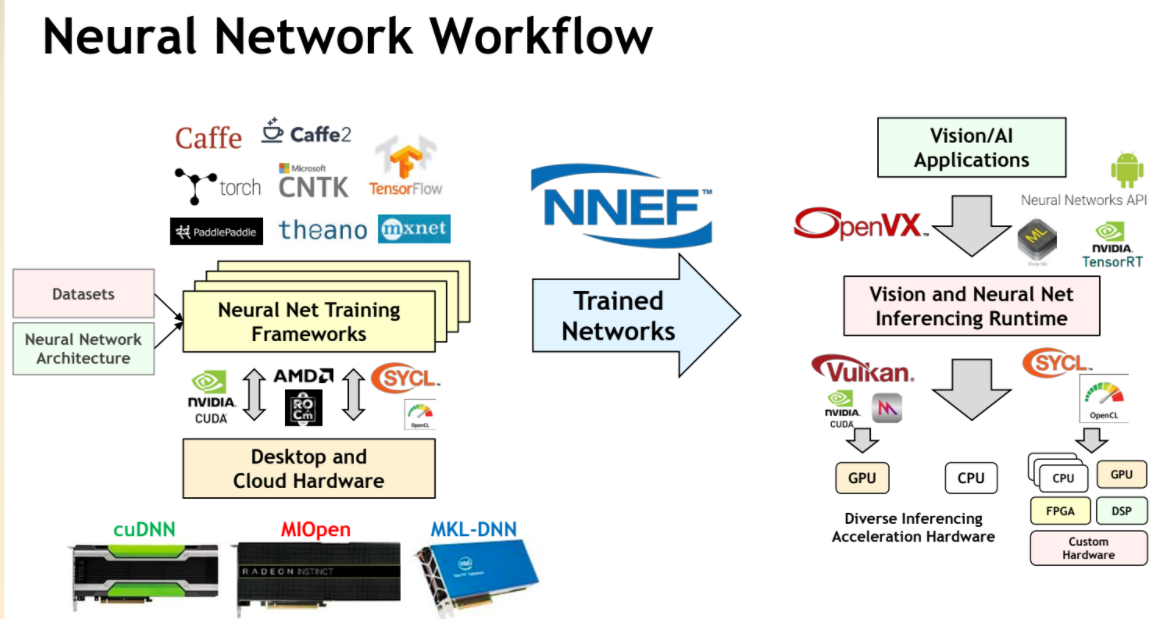

Machine learning is gaining ground quickly, but behind the scenes, fragmentation is costing developers, researchers, and data scientists valuable time. There’s no commonality between the way neural network weights and datatypes are described, and they need different exporters to get across to each inference engine. Where differentiation in the way companies approach machine learning can drive innovation, it’s instead slowed down by fragmentation.

It’s a complex issue that can be solved with a simple solution: a standard. Global consortium, The Khronos Group, is creating a “PDF format for neural networks,” called NNEF (Neural Network Exchange Format) that will simplify this process by uniformly describing and transferring neural networks. The standard format NNEF is independent of frameworks, so it will allow researchers and creators to transfer trained neural networks and interfaces to new frameworks.

Khronos is responsible for creating over 25 standards that are in everything from your cell phone to automotive heads-up displays. They’ve analyzed the current market situation, and NNEF will help move adoption forward for more machine learning applications.

In our conversation with Trevett, we cover what the standard is and isn’t—for instance, this is not an overarching guideline on a specific framework. With an understanding that there are many frameworks for the two sides of the coin—training and inference—the effort is more focused on a data format.

“NNEF has been designed to be reliably exported and imported across tools and engines such as Torch, Caffe, TensorFlow, Theano, Chainer, Caffe2, PyTorch, and MXNet. The NNEF 1.0 Provisional specification covers a wide range of use-cases and network types with a rich set of functions and a scalable design that borrows syntactical elements from Python but adds formal elements to aid in correctness. NNEF includes the definition of custom compound operations that offers opportunities for sophisticated network optimizations. Future work will build on this architecture in a predictable way so that NNEF tracks the rapidly moving field of machine learning while providing a stable platform for deployment.”

The goal is to create a stable universal neural network exchange specification that enables import from tools to hardware accelerated inferencing. The roadmap includes adding new network types and authoring interchange capabilities for cross-framework use.

NNEF 1.0 has been released as a provisional spec and the Khronos team is gathering input on GitHub.

Be the first to comment