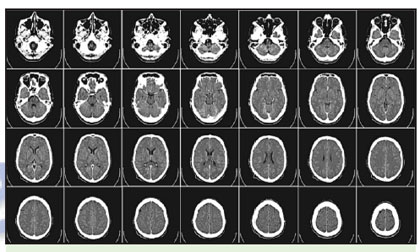

Computed tomography (CT) is a widely-used process in medicine and industry. Many X-ray images taken around a common axis of rotation are combined to create a three-dimensional view of an object, including the interior.

In medicine, this technique is commonly used for non-invasive diagnostic applications such as searching for cancerous masses. Industrial applications include examining metal components for stress fractures and comparing produced materials to the original computer-aided design (CAD) specifications. While this process provides invaluable insight, it also presents an analytical challenge.

State-of-the-art CT scanners use synchrotron light, which enables very fine resolution in four dimensions. For example, the Advanced Photon Source at Argonne National Laboratory can scan at micrometer-to-nanometer resolution and produce 2,000 4 megapixel frames per second. This results in 16 gigabytes of data produced each second. In describing the challenges of processing this amount of data,Tekin Bicer and colleagues use a high-resolution mouse brain dataset as an example. This dataset is composed of 4501 slices and consumes 4.2 terabytes of disk space, and the reconstructed 3-D image weighs in at a whopping 40.9 terabytes.

A single sinogram (an array of projections from different angles) from this dataset would take 63 hours to compute on a single core. This means the full dataset, comprised of 22,400 sinograms, would take a prohibitively long time to compute. GPUs can provide improved processing time, and vendors like NVIDIA have offerings specifically targeted at the medical CT market. However, the code tends to be device- and application-specific, which means a multi-purpose platform is better served by CPUs. This is particularly true for the massive datasets produced by synchrotron devices.

For more generally-applicable computation, the approach is one likely familiar to those who have performed large-scale computation. Bicer and colleagues developed a platform called Trace to provide a high-throughput environment for tomographic reconstruction. As a single-core environment is hardly a representative benchmark in 2017, their first step was to expand to multiple cores. They used machines with two six-core CPUs and tested with a subset of the mouse brain dataset described above. With a variety of combinations of processes and threads per process, they found that running two processes with 6 threads per process provided the best overall throughput (approximately 158 times faster than the single-core benchmark).

Expanding to multiple nodes provided an additional increase — a speedup factor of 21.6 when moving from 1 to 32 nodes. This also highlights another important consideration. The inter-node communication does not parallelize as well as the computation. This again will come as no surprise to the reader who has experience with parallel code execution. At eight nodes and above, the communication cost exceeds that of the computation. Where two nodes spent 19.7% of time on communication, that rose to 60.1% at 32 nodes. It’s unclear what interconnect was used for this study, but the 2.5 terabytes of of inter-node communication performed for the computation in this subset argues strongly for a high-bandwidth, low-latency interconnect.

The specifics of tomographic data call for further optimization as well. Given the incredible size of the data, efficient use of CPU cache can provide a meaningful improvement in performance. However, the sinusoidal data access pattern of the reconstruction process results in high rates of cache misses — up to 75% in some cases. To address this, Xiao Wang and colleagues have worked on algorithmic approaches to transform the data prior to reconstruction. In their work, they observed a reduction in L2 cache misses from 75% to 17%. Given the large volume and long runtimes associated with large-scale computed tomography, any performance improvement is critical.

Computed tomography plays an increasing role in medical and industrial diagnostics. Argonne’s Advanced Photon Source is currently capable of creating panoramas from multiple frames, resulting in datasets 100 times larger than the single-frame. It’s reasonable to expect that equipment will continue to increase in capability. As a result, high-throughput reconstruction environments will become ever more critical in providing results quickly.

Be the first to comment