Putting legacy monolithic applications into production is like moving giant boulders across the landscape. Orchestrating applications coded in a microservices style is a bit more like creating weather, with code in a constant state of flux and containers flitting in an out of existence as that code changes, carrying it into the production landscape and out again as it expires.

Because container deployment is going to be such an integral part of the modern software stack – it basically creates a coordinated and distributed runtime environment, managing the dependencies between chunks of code – the effort and time that it takes to deploy collections of containers, usually called pods, is one of the bottlenecks in the system. This is a bottleneck that Google and its partners in the development of the Kubernetes container controller are well aware of, and as they have explained in the past, the idea with Kubernetes is to get the architecture and ecosystem right and then get to work to scale the software so it is suitable for even the largest enterprises, cloud builders, hyperscalers, and even HPC organizations. The Mesosphere distributed computing platform can also be used to manage containers (as well as have Kubernetes run atop it) and is working to push its scale up and to the right, too.

One of the reasons that containers were long since used at hyperscalers like Google and Facebook is that a container is, by its very nature, a much more lightweight form of virtualization than a virtual machine implemented atop hypervisors such as ESXi, Hyper-V, KVM, or Xen. (We realize that some companies will use a mix of containers and virtualization, particularly if they are worried about security and want to ensure another level of isolation for classes of workloads. Google, for instance, uses homegrown containers that predate Docker for its internal applications, but it puts KVM inside of containers to create instances on the Google Compute Engine public cloud and then allows this capacity to be further diced and sliced using Docker containers and the Kubernetes controller.)

Ultimately, whether the containers run on bare metal or virtualized iron, the schedulers inside of controllers like Kubernetes need to scale well, not only being able to fire up containers quickly but also to be able to juggle large numbers of containers and pods because microservices-style applications will require this.

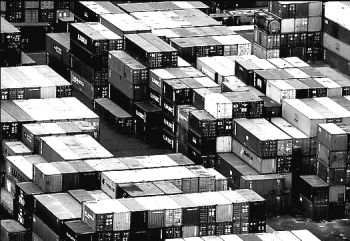

Stack ‘Em High

CoreOS has put Kubernetes at the heart of its Tectonic container management system, and is keen on helping both the Kubernetes container scheduler and its own key/value data store, which is called etcd and which is used as a pod and container configuration management backend. Last fall, Google showed how Kubernetes could scale to around 100 nodes with 30 Docker containers per node, and the CoreOS team, working with other members of the Kubernetes community, has been able to ratchet up the scale by a factor of ten and is looking to push it even further. CoreOS engineers have just published a performance report outlining how it was able to goose the scheduler so it could load up 30,000 container pods in 587 seconds across 1,000 nodes, which is a dramatic improvement in scale from the 100 nodes running 3,000 pods that Google showed off last fall that took 8,780 seconds to schedule. That is a factor of 10X improvement in the scale of the scheduler and a factor of 150X lower latency in the time to schedule the container pods. These performance metrics are based on the Kubemark benchmark test, which was introduced recently by the Kubernetes community.

These kinds of performance jumps are what is needed for Kubernetes to compete against Mesos, which is also being positioned as a container management system and are precisely what the Kubernetes community said was in the works from the get-go.

“The focus of the Kubernetes community has been to lay down a solid foundation,” Brandon Philips, chief technology officer at CoreOS, tells The Next Platform. “There was some low hanging fruit that we could grab to increase the performance of the scheduler and I think there are some easy wins that we inside of Kubernetes to increase the scale over time. But like any complex distributed system, there are a lot of moving parts and so the initial work we did focused on the Kubernetes scheduler itself, and we are pretty happy with the performance of 1,000 nodes with 30 pods per node and scheduling all of that work in under a minute.”

Philips says that the impending Kubernetes 1.2 release should include the updates have been made to improve the performance of the scheduler. The next focus for the team will be on the etcd configuration database caching layer, which is based on a key/value store created by CoreOS for its Tectonic container management system that has also been picked up by Google as the backend for its Kubernetes container service on its Compute Engine public cloud.

Phillips says that in a typical Kubernetes setup, the control plane part of Kubernetes stack is usually run on three to five systems, with the worker machines that actually run Docker containers ranging from a few hundred to a thousand nodes. The focus now is reducing the memory and CPU requirements on those control plane servers, and also reducing the chatter between Kubernetes and the etcd backend. One way this is being accomplished is by replacing the JSON interfaces between the two bits of code with protocol buffers, which also helps cut down on CPU and memory usage in the control plane. The upstream Kubernetes code should get these improvements with the 1.3 release in three months or so, and then will cascade down to Tectonic, Google Cloud Platform, and other Kubernetes products soon thereafter. (Univa, which sells its popular Grid Engine cluster job scheduler for HPC environments, has a modified version of its tools that brings together Docker containers, Red Hat’s Atomic Host, Kubernetes, and elements of Grid Engine to create a stack Univa calls Navops, which presumably will also have very high performance.)

Having pushed Kubernetes and etcd so they can scale to 1,000 nodes, CoreOS is now figuring out how to scale this up so it can support 2,000 to 3,000 machines. The tweaks to the etcd layer will go into alpha testing in April with etcd V3, work that the CoreOS team has been doing for the past six months or so. These later round of enhancements are being targeted for the Kubernetes 1.3 release, and should scale a cluster to 60,000 to 90,000 container pods.

With Kubernetes scale now ramping fast, the question now is how fast will scalability ramp and how far does it actually need to scale for most enterprise customers? Even Google’s clusters, which are arguable the largest in the world, average around 10,000 nodes, with some being as large as 50,000 or 100,000 in rare cases.

“I think that once we get to 5,000 to 10,000 nodes, we are to the point where most people will be in a discomfort zone and they will want to shard the control plane and not have one gigantic API in charge of the infrastructure,” says Philips. “By the end of the year, I think we will be able to get Kubernetes to that point, and it will have sufficient scale for the vast majority of use cases.”

The sharding of the control plane for very large scale Kubernetes clusters is being undertaken by the Ubernetes project, and in an analog to what Google has done internally, that makes Kubernetes akin to the Borgmaster cluster scheduler and Ubernetes a higher-level abstraction for management across clusters and schedulers akin to Google’s Borg.

It is important to not take that analogy above too far. Just like Kubernetes is not literally Borgmaster code that has been open sourced, Ubernetes is inspired by Borg but not literally based on it. Borg is a very Google-specific beast, while Kubernetes and its Ubernetes cluster federation overlay are aimed at a more diverse set of workloads and users.

Not Just About Scale Out

The thing to remember about Kubernetes scale is that the software engineers are not just trying to push up the number of machines that can support long-running workloads like web application servers or databases inside of containers. Scale also means decreasing the latencies in setting up workloads with Kubernetes, particularly for more job-oriented work like MapReduce and other analytics or streaming workloads.

It can take seconds to schedule such work now, and it needs to be lower so Kubernetes can juggle many short running jobs. The etcd V3 work that the CoreOS team is working on also includes some help so the Kubernetes scheduler can do more sophisticated bin packing routines, adding support for low and high priority jobs.

As for Kubernetes having multiple job schedulers like Borg does, Philips thinks that it is likely that Kubernetes will ultimately end up with a single, general purpose job scheduler but that it will get more sophisticated over time, adding features for quality of service distinctions and load balancing and live migration of workloads across the nodes in Kubernetes clusters.

>> Orchestrating applications coded in a microservices style is a bit more like creating weather, with code in a constant state of flux and containers flitting in an out of existence as that code changes, carrying it into the production landscape and out again as it expires.

This is actually how ‘monolithic’ applications work as well. Instead of containers you have objects ( allocated memory ) flitting in and out of existence instead of containers. You also have notifications about objects as they are created and destroyed.

But I suppose tech journalists wouldn’t be expected to know those sorts of things.