DataDirect Networks got its start as a storage provider for media companies back in 1998, but it sold its first products in into the supercomputing base that was looking for high capacity and screaming performance. The company broke through $100 million in sales in 2008, and only three years later it pushed on up through $200 million in revenues. With a new hardware architecture and a key partnership with VMware, DDN is gearing up to take a larger slice out of the enterprise storage market.

The enterprise push has a number of facets, but one of the most interesting of them will see DDN emerge as a vendor of hyperconverged storage akin to that peddled by Nutanix and a bunch of smaller firms such as SimpliVity, Scale Computing, Pivot3, and Maxta. And unlike those firms, DDN will be focusing on extreme-scale server-storage hybrids that put virtual machines on virtualized storage area networks, ones capable of supporting more than 100,000 virtual machines in a single cluster.

On The Hunt For Growth

DDN is privately held and does not divulge its financials, but Molly Rector, chief marketing officer and EVP of product management at DDN, says that the company is growing its revenues at a double digit rate compared to last year and that its customer base is also growing at a double digit pace as well. DDN, she says, is maintaining its presence in two-thirds of the Top 100 supercomputer installations in the world. But as traditional enterprise storage vendors like EMC, Hewlett-Packard, IBM, and Hewlett-Packard are struggling against each other and against a raft of upstarts in the enterprise, DDN is getting more traction in the enterprise and, in fact, its future roadmap is being shaped by those enterprise customers.

“Over the last two or three years, our biggest growth has been among the Fortune 100, financial services companies, and cloud companies, including both the big Bay area Web companies and also the big tier two cloud companies that are using our technology to build their services,” Rector tells The Next Platform. “They all like our technology because of its ability to deliver performance, to deliver scale. And there is a perception that many of the big Web-scale companies are all building their own storage, but many of them actually are not.”

DDN, as it turns out, has its storage inside some of these name-brand Web scale shops, but it can’t talk about them as it can for the big supercomputer centers, which like to outline in great detail the technology choices they make because these are shared systems and those writing applications for HPC iron need to know the details. (They also like to brag, and their vendors like them to as well.) Rector says that these Webscale companies are always looking at the open source alternatives and considering creating their own storage software, as Google, Facebook, Yahoo, Amazon, and others have done. This is healthy in that it keeps DDN innovating. Very large enterprises are looking at open hardware and software and looking for partners who can package and maintain it, much as DDN does with the Lustre parallel file system for HPC customers. Or, even for closed source software, DDN adds value by making IBM’s GPFS file system easier to install and maintain.

“The big shift this year, which we decided to do over the past year, is to add some of the enterprise-class features to our storage that we have not added in the past. There has always been a decision tree that customers have to go through – they want DDN performance, but they sometimes want these enterprise features. So, for instance, when you look at DDN against NetApp, we could always have the most IOPS and throughput, but we didn’t have inline compression or encryption, and in the use cases where we were coming from, customers didn’t need them.” But now, as DDN is expanding more aggressively into the enterprise, it will be adding such features and it will be rolling them out on a new storage hardware platform code-named “Wolfcreek.”

DDN did not make a lot of noise about it last year at the SC14 supercomputing conference in New Orleans, but the Wolfcreek nodes were on display in its booth. They are based on two-socket “Haswell” Xeon E5 server nodes using DDR4 main memory that are the building block for all future file and object storage systems coming out of DDN.

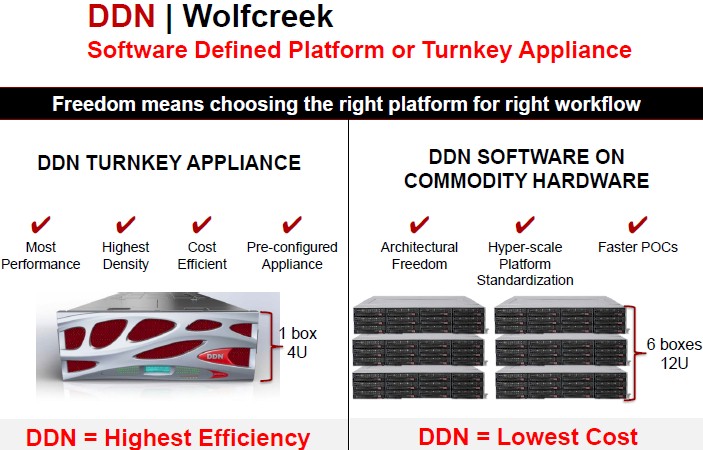

At the same time, DDN understands that some customers want to run storage software on their own hardware, with very specific tweaks and tunings for their own workloads or to meet certain price points, and that will also be an option for the Wolfcreek generations of DDN’s Web Object Scaler (WOS) object storage, Storage Fusion Architecture (SFA) file system arrays and related Scaler appliance stacks, and Infinite Memory Engine burst buffer.

The precise feeds and speeds on the Wolfcreek iron have not been divulged, but Rector gave The Next Platform a few tidbits. The Xeon processors, DDR4 memory, and storage type will be customized depending on the workload, says Rector.

The Wolfcreek machine has two active-active storage controllers in the node and supports up to 72 SAS drives with 12 Gb/sec interfaces or 72 SATA drives, all in a 2.5-inch form factor. Wolfcreek will use a PCI-Express fabric within the system and support the NVM-Express protocol to cut down on the latency between the processors and main memory in the controllers and the storage in the enclosure. With 2.5-inch NVMe SSDs, DDN can have two dozen SAS drives plus up to 48 NVMe drives in the enclosure. In the IME burst buffer, the Wolfcreek storage node will support the full complement of 48 NVMe SSDs. The Wolfcreek machine will also be the basis of its future SFA arrays and will support a mix of disk drives and SSDs, too.

In an all-SSD configuration that is designed as an all-flash array – not as a burst buffer between a supercomputer and its parallel file system – DDN says it will be able to deliver a Wolfcreek system that can deliver 60 GB/sec of throughput and more than 5 million I/O operations per second (IOPS). The top-ranked single system on the SPC-1 storage benchmark at the moment is the Hitachi Virtual Storage Platform G1000, which can deliver just a tad over 2 million IOPS. Kaminario’s K2 all-flash storage comes in at 1.24 million IOPS, and Huawei Technologies’ OceanStor 18800 goes just a bit over 1 million IOPS using a mix of disk and flash. The all-flash version of NetApp’s FAS8080 hits 658,282 IOPS. Rector tells The Next Platform that she reckons DDN has more than a 2X performance advantage over leadership products and will have a 4X advantage over whitebox storage arrays. We would point out that many of the all-flash upstarts like Pure Storage and EMC XtremIO have not been tested on the SPC-1 or SPC-2 benchmarks, and it would be a good thing for customers if they were along with a number of other products.

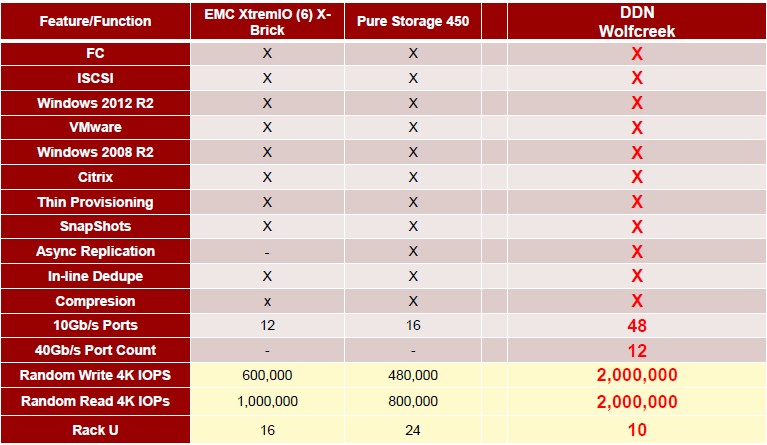

On the feature stack, here is how DDN compares itself to the two rivals it expects to see most in the enterprise with all-flash array configurations:

It is a bit of a mystery how DDN will be creating a 10U setup with 4U enclosures, but it is probably a mix of controller nodes and JBOF (short for Just a Box Of Flash) enclosures that hang off it. What is interesting to note is that whatever is in this 10U Wolfcreek setup, it will be able to handle 2 million random read IOPS or 2 million random write IOPS in an all-flash configuration. This table above compares Wolfcreek to the prior generation FA-450 arrays from Pure Storage, and not to the new FlashArray//m series that was announced back in early June. Pure Storage used 32 KB file sizes on the latest arrays, so we can’t easily guess what the performance will be for the high-end FlashArray//m70 on 4 KB file sizes for either random reads or writes.

The PCI-Express fabric inside the Wolfcreek box will lash together the disks, SSDs, and NVMe SSDs in the system to the processor complex. To scale out the Wolfcreek storage clusters for performance and capacity, InfiniBand adapters will be in the nodes and will use InfiniBand switches, presumably from Mellanox Technologies. The exact speed will depend on customer needs, but Rector says DDN could cluster with 100 Gb/sec EDR InfiniBand, which is just now entering the market, as well as the more established 56 Gb/sec FDR InfiniBand if that made technical and economic sense. The storage can talk to the outside server world through InfiniBand as well, but Wolfcreek has 100 Gb/sec Ethernet as an external networking option and DDN has also forged a partnership with Intel and is designing a custom Wolfcreek system board that will support dual-port Omni-Path links, which run at 100 Gb/sec, too.

As far as the scalability of the storage goes, DDN has parallel file systems running on its current SFA clusters that can deliver 1 TB/sec of bandwidth and scale up to 80 PB of capacity. Wolfcreek will step that up a notch or two, but the scale limits have less to do with technology than they do with budgets, says Rector.

The big news is that these Wolfcreek arrays will be tuned up to run various enterprise software stacks. Having parity with all-flash arrays from EMC, Pure Storage, and IBM, who are the ones to beat in this market, will expand DDN’s addressable market considerably. So will supporting vSAN virtual SAN software alongside of the vSphere server virtualization stack on Wolfcreek iron, which potentially gives DDN a chunk of this fast growing hyperconverged storage market. (And presumably such setups will use a mix of disk and flash to support vSAN, as VMware itself does in a lot of cases.) Being able to support over 100,000 virtual machines on a single storage cluster is something of a feat, given the limitations of VMware’s own vCenter management tools. VMware’s own sizing guides say a 64-node cluster running vSAN on vSphere can host about 6,400 virtual machines in either a hybrid or all-flash setup. Perhaps DDN has a trick for clustering these together to scale them further – the company is not saying.

“You don’t think of hyperconverged as high performance, typically, and we have both,” says Rector. “This gives us the ability to compete strongly. We didn’t want to go into the hyperconverged market half way. If we were going to enter it, we needed all the right features and performance. What we have with this platform is higher storage density, higher IOPS density, higher performance density than a Nutanix, and when you put all of this into an appliance, it is a hyperconverged array. ”

VMware is not the only software stack that will be supported on the enterprise-grade Wolfcreek storage. DDN is hinting that the OpenStack cloud controller and the Hadoop distributed data analytics platform will also run natively on the box, as well as unspecified virtual storage relating to products from Citrix Systems and Microsoft. (Probably virtual desktop infrastructure from both and database and middleware acceleration from Microsoft, all running atop their respective Xen and Hyper-V hypervisors.)

Here is the order of rollouts based on Wolfcreek coming this year. In September, the HPC-centric SFA line will be boosted with Wolfcreek iron, running either Lustre or GPFS as usual from DDN. At the same time, the company will also roll out a version of the machines running OpenStack and its companion Cinder block storage. Also in September, DDN will launch the IME burst buffer that it has been previewing in one form or another for the past year and a half. Towards the end of 2015, DDN will put out an all-flash version of the Wolfcreek storage to take on EMC, Pure Storage, IBM. And also sometime in the second half of this year, the enterprise variants of the Wolfcreeks will come to market to support vSAN and other virtualized datacenter workloads.

Be the first to comment