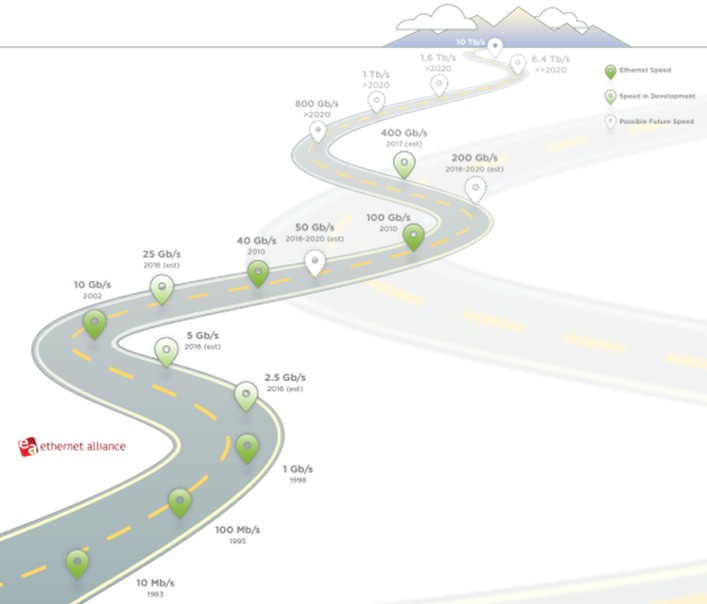

Except for some edge cases where extreme low-latency or high bandwidth is absolutely required, the Ethernet protocol absolutely rules networking in the datacenter, around the campus, and in our homes. Here at The Next Platform we are mainly concerned with what is going on in the datacenter, and in the very high end ones in particular that are always driving compute, storage, and networking technologies forwards and upwards. It is with this in mind that we are pouring over the new roadmap from the Ethernet Alliance, which sets a goal for 10 Tb/sec speeds about a decade from now.

That is a factor of 1,000X more oomph than the 10 Gb/sec bandwidth of server ports and network switches being deployed in datacenters today, and 10,000X that of 1 Gb/sec Ethernet, which is still the most commonly deployed server port and switch speed out there in the datacenter and on the campus these days.

It is funny in this industry that we call the courses we plot out into the future for various technologies roadmaps, since they are not anything as firm as an actual roadmap. (Roadmaps are done after the roads are built, not a decade before.) Any technology roadmap has to be taken with a grain of salt because it is very hard to extrapolate to the future with absolute certainty. (You can’t predict the Rockies if you drive west from Kansas.) That said, it takes a technology roadmap to focus the dozens of companies and key organizations that pour many hundreds of millions of dollars of development into Ethernet switching and routing technologies each year.

The Ethernet protocol, which was invented in 1973 by Bob Metcalfe and was productized as a local area network protocol in 1980, starting with 10 Mb/sec links between servers and between clients and servers, and it is nothing short of amazing that Ethernet has been able to displace so many other network protocols in that time. It is a resilient protocol, one that steals all the best ideas it can to remain competitive.

These days, the IEEE and the Ethernet Alliance do their parts to steer the development of the Ethernet protocol, but big IT shops are no longer sitting on the sidelines receiving the word from on high as to where the Ethernet protocol will go and how it will get there.

Last summer, hyperscalers Google and Microsoft gave the Ethernet community a nudge when they joined up with switch chip makers Broadcom and Mellanox Technologies to established the 25 GB/sec Ethernet effort, which was initially rejected by the IEEE and which is now becoming a standard because the hyperscalers have enough purchasing power to get the technology they need, one way or the other. These hyperscalers were unhappy with the 40 Gb/sec and 100 Gb/sec roadmaps, particularly for cost-sensitive hyperscale datacenters, and so they took technology developed for 100 Gb/sec switches and routers and cut it down to the size they needed and got the better bang for the buck their warehouse-scale datacenters require.

Here’s the short math: a 25 Gb/sec lane derived from a four-lane 100 Gb/sec switch is much better for linking servers and storage than the 10 Gb/sec lanes that were used in the initial 40 Gb/sec switches and 100 Gb/sec routers (and now some switches). Servers are swamped with east-west traffic between devices in the datacenter (rather than talking out to users and the Internet through north-south links), and that means the bandwidth between devices needs to be increased, but at a cost point and within thermal ranges that are appropriate for a hyperscale datacenter. By backporting and cutting down the 100 Gb/sec technology, the hyperscalers can get server and storage interfaces that have 2.5X the bandwidth of 10 Gb/sec Ethernet at maybe 1.5 times the cost (so a lower cost per bit moved) in about half the power consumption, and they can similarly feed up to switches with either 25 Gb/sec or 50 Gb/sec ports that have much higher port density.

We bring up the 25 Gb/sec effort merely to show that it is difficult to predict the future, because this was definitely not on the roadmap, but also to point out that the best way to predict the future is to create it. Up until now, the switch and router makers were setting the pace, but now the largest IT buyers in the world are having their say and their way, too.

Scott Kipp, who is the principle technologist at Brocade, a maker of Fibre Channel and Ethernet switches, as well as the new president of the Ethernet Alliance, walked The Next Platform down the winding Ethernet roadmap, which stretches off into the far off and fuzzy horizon of 10 Tb/sec – yes, that is terabits per second – speeds sometime vaguely around 2025. If all goes well.

“The hard part is to break progress down into manageable pieces,” Kipp explains. “You have some companies working on the SERDES, others working on new cabling, still others working on the lasers or vixels. Everyone has their own piece that they improve a little bit. We find new materials and processes and we refine them. It is really challenging, and it is only getting harder because of the physics. But each step so far was very difficult, too, and just to get to 10 Mb/sec, there were fundamental challenges on how to do the signaling.” And, Kipp adds, having a 300 meter cable length for an OM3 optical multimode fiber pushing the Ethernet protocol at 10 Gb/sec was no walk in the park, either. But engineers figured it out.

Up until five years ago, the rule for generational leaps for Ethernet so simple everyone could chant it: “Ten times the speed for three times the cost.” Ethernet speeds increased by factors of ten: 10 Mb/sec in 1983, 100 Mb/sec in 1995 and with the rise of the Internet and what we now call hyperscale datacenters and the need to link them to users all over the world, a very quick jump to the 1 Gb/sec standard in 1998. The Ethernet standard for 10 Gb/sec came around in 2002 and in 2010, 100 Gb/sec came along.

Then, all hell broke loose. The leap to 100 Gb/sec was going to take too long and be too expensive, and so 40 Gb/sec Ethernet became a non-standard exit on the power of ten roadmap. Then hyperscalers and cloud providers wanted incremental increases in Ethernet speeds in less time and price points that the switching and routing industry was not accustomed to, and the 25 Gb/sec stop emerged. The industry has literally tangled itself up in its own Ethernet cables at the low-end of the market. Over 70 billion meters of CAT5 and CAT6 Ethernet cables will have been sold between 2003 and 2015, according to network watchers Dell’Oro Group, with 7 billion meters of CAT5 sold last year alone. A lot of this cable has been used for campus networks, but a fair amount of it is in datacenters, too. And the tricky bit is that this cabling cannot support 10 Gb/sec protocols. That is why 2.5 Gb/sec and 5 Gb/sec stops are being added to the Ethernet roadmap.

Between here and 10 Tb/sec Ethernet, there can be – and very likely will be – some other unexpected stops. The good news is that the Ethernet industry is reacting to conditions in the datacenter and on the campus and not just sticking to its old “power of ten” rule.

From The Stream To The Ocean

So what is driving the demand for ever-increasing bandwidth for Ethernet networks? The short answer is that there are richer kinds of data behind applications and more and more people linking into those applications all over the globe. That’s why millions of ports of 40 Gb/sec switching are selling today, and why Kipp says the industry expects to sell over 1 million ports of 100 Gb/sec switching in 2015. According to Dell’Oro, says Kipp, the datacenter and campus markets represent around 480 million Ethernet ports sold a year, and only about 2 million of those ports are for routers. But it won’t be too long before hyperscalers, cloud providers, and telecom companies will be crying for 400 Gb/sec routers and switches, and the paint is barely dry – by Ethernet standards – on the 100 Gb/sec standard.

“The bandwidth demand is coming from every direction,” Kipp tells The Next Platform. “The processors keep getting wider and wider and their I/O throughput increases, and that is the input driving it. Then you have thousands or hundreds of thousands of servers in these megadatacenters. So it aggregates, forms from little streams to creeks and then rivers and flows into the ocean of the Internet. That’s one way to imagine it.”

(The image of networking that the modern datacenter and Internet conjures is more like a family reunion, with people of all ages all trying to talk to each other all at once and still somehow expecting to be heard.)

Within the datacenter, this chart detailing a portion of the Ethernet roadmap is probably the most important one:

In this chart, the SFP+ line shows the port speeds on servers, the QSFP speeds show the speeds of switches above the servers, and the CFP line on top of that shows the speeds of routers. It is important to note that this is the timing of the ratification of finished, developing, or expected possible future Ethernet standards, not when the products come to market.

Just like 10 Gb/sec ports on servers drove demand for 40 Gb/sec and 100 Gb/sec on the switches, the advent of 25 Gb/sec networking will similarly drive 100 Gb/sec switching and, higher up the stack, for 400 Gb/sec routing. Looking far out to 2020, the Ethernet Alliance is evaluating potential standards for 50 Gb/sec on the server and 200 Gb/sec on the switch, and you will notice that there is a gaping hole above it where routers will still be at 400 Gb/sec. Looking out to maybe 2025 or so, everything takes a step function up again, with 100 Gb/sec on the server, 400 Gb/sec on the switch, and 1 Tb/sec on the router. This is not necessarily how it will all play out, of course. There is a possible 800 Gb/sec exit on the Ethernet roadmap and a kicker 1.6 Tb/sec bump followed by a possible 6.4 Tb/sec bump.

A lot depends on the speed of the lanes on the Ethernet roadmap and the ability to push data through ports without melting the server or the switch. The initial 40 Gb/sec and 100 Gb/sec products were based on lanes running at 10 Gb/sec, but with the advent of 25 Gb/sec lanes (which the hyperscalers have pushed down into servers and switches with fewer lanes to make their 25 Gb/sec and 100 Gb/sec products with the help of Broadcom and Mellanox), it is possible to not only improve 100 Gb/sec switches and routers, but get affordable switching with 25 Gb/sec on the server with SFP+ ports and 100 Gb/sec on the switch with QSFP28 ports. The next lane speed bump is to 50 Gb/sec, which will enable SFP28 ports running at 50 Gb/sec out of the servers, QSFP28 ports running at 200 Gb/sec, and CFP2 ports in the routers running at 400 Gb/sec.

The next lane shift is to 100 Gb/sec, and that will happen as we approach 2020 or so. The idea now is to group ten or sixteen lanes together to get those 1 Tb/sec or 1.6 Tb/sec ports. Kipp says that it will take a lot of investment to make 100 Gb/sec lanes, and adds that a low-cost 100 Gb/sec lane that can fit into an SFP+ port is not even expected until after 2020.

Getting to 1 Tb/sec still seems pretty daunting, and 10 Tb/sec may sound physically impossible. The road ahead could be a bit bumpier than the one we already drove down, but it is important to remember that Ethernet has always faced seriously difficult physics and engineering challenges as speeds were ramped, and that is one of the reasons why Kipp says he is optimistic about the Ethernet industry’s ability to boost bandwidth by a factor of 100X in the next decade or so.

“Things work out,” Kipp says. “You can be an optimist, and I think I am more optimistic than some. But I think we will work it out. The 100 Gb/sec signaling technology already exists in the optical transport networks, it is just more expensive.”

Be the first to comment