Sometime before the end of this year, it will be possible to get a petabyte of raw flash storage capacity that fits within a 3U rack enclosure, and if current trends persist, such a device will cost the same or less than a disk array equipped with reasonably capacious and zippy disk drives. At what point, then, excepting for workloads that have heavy write requirements where flash performance lags, will such flash arrays be able to displace disk for a lot of workloads?

Very few people are willing to call this technology transition, mainly because enterprises are risk adverse and change very slowly. But once they make up their minds about the safety of a new technology, the change at enterprises happens at an accelerating pace. For instance, the adoption of server virtualization, which is nearly universal in the datacenter excepting those workloads where bare metal performance still matters. This is perhaps a good analogy in that regard, as might be the nearly complete adoption of Linux in the HPC space two decades ago and its eventual deployment for maybe a third of workloads across large enterprises as a whole, and its very large market share among hyperscaler and cloud providers.

Calling the technology transition from mechanical storage to solid state storage would be easier if there were not so many variables. Making comparisons and generalizations across such a wide variety of all-flash arrays and hybrid disk-flash arrays is difficult. If the choice was simple, disk drives would be eliminated from the datacenter within the past few years – or at least as soon as the cost of the disk arrays had been fully amortized or the need for performance or density was so great that it compelled a radical change in storage architecture. Flash is making its way into datacenters over time, and found early adoption among hyperscalers – Facebook and Apple were early and enthusiastic adopters that we know about – that wanted to accelerate the databases and caching servers that back-end their web applications; flash then got traction among small and medium businesses that wanted to accelerate a particular existing workload by adding some flash to disk storage – generally a database, but not always.

But large enterprises have only recently (as in the past several years) tried to figure out how to weave flash into their storage, and the reason they are taking more time is not just because they are cautious, but because they have many different systems, lots of different applications, and many different storage arrays servicing them. It is just tougher for them, plain and simple, because they do not have only a few different workloads. In a sense, a hyperscaler is like a small business in that it has relatively few workloads – they just happen to be really, really big, distributed ones that require thousands to tens of thousands of servers.

Flash memory chips and the controllers that manage the wear leveling and distribution of data across banks of those chips are now sophisticated enough to give flash cards or flash drives that are used in arrays very long life – something on par with mechanical disk drives, which are lucky to last more than five years in the field. The issue for flash adoption in large enterprises then becomes making comparisons easier between disk arrays and making the flash affordable enough, without resorting to data compression and deduplication technologies that can have an adverse effect on performance of an array. Many of the all-flash array makers have relied on such deduplication and compression technologies to close the gap between flash and disk on a per unit of effective capacity basis. But SanDisk wants flash-based arrays to be able to stand on their own and compete with disk – and its InfiniFlash array puts a stake in the ground and sets the stage for when flash will offer much better density than disk at comparable prices while at the same time being able to accelerate the performance of analytics data stores such as Hadoop, MongoDB, and Cassandra and object and block file systems based on Ceph.

Each and every all-flash array maker wants to displace disk arrays, and The Next Platform will obviously be keeping a keen eye on what the major players are doing as they create products for the enterprise, for hyperscalers, for clouds, and for HPC. This is but one tale in the storybook, and one that is interesting because SanDisk controls its own stack, from the NAND flash chips all the way up to the complete flash array for the first time in its history.

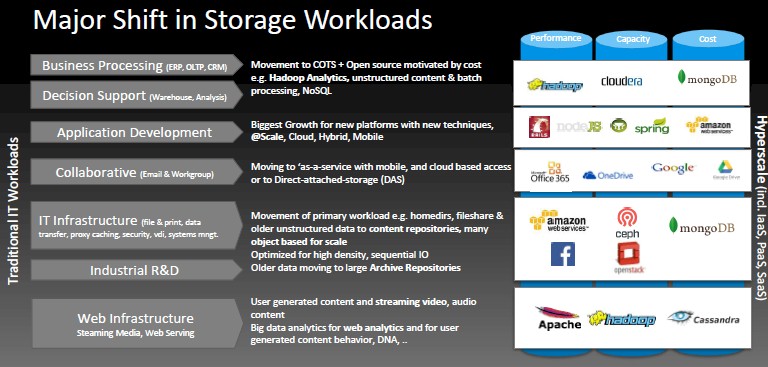

What is also interesting is that the development of the InfiniFlash array was driven by enterprise, hyperscale, cloud, and HPC customers – the full gamut at the high end of the IT sector. Creating a product that hyperscalers will buy is a bit of an accomplishment, since many have gone so far as to buy their own raw NAND flash memory, designed their own flash cards, and had them manufactured by original design manufacturing (ODM) partners, Gary Lyng, senior director of product management and marketing, tells The Next Platform. The way that SanDisk sees it, the storage market is morphing from old-style applications to new ones, and this workload transition is coinciding with the move from disk to flash (or, to be more precise, any suitably low-cost non-volatile storage when it emerges). Here’s the lay of the land as SanDisk sees it in the datacenter:

If you break the storage capacity by the workloads on the chart above, you can get a sense of how much the shift from old-style applications to new ones will affect IT shops. By SanDisk’s estimates, business processing accounts for about 18 percent of the capacity out there, and decision support is another 21 percent. Basic IT infrastructure, as outlined above, is about a quarter of the capacity, with collaboration software taking up about 14 percent. Web infrastructure (meaning web serving and media streaming) is only about 9 percent, and application development is about 8 percent, followed by industrial R&D at 4 percent. (There is some rounding in there.)

While we are used to everyone talking about flash as an acceleration technology, SanDisk is talking about InfiniFlash arrays as primary storage devices to back-end content repositories of all kinds. Flash has always had the performance, but it did not have the high capacity or the low price.

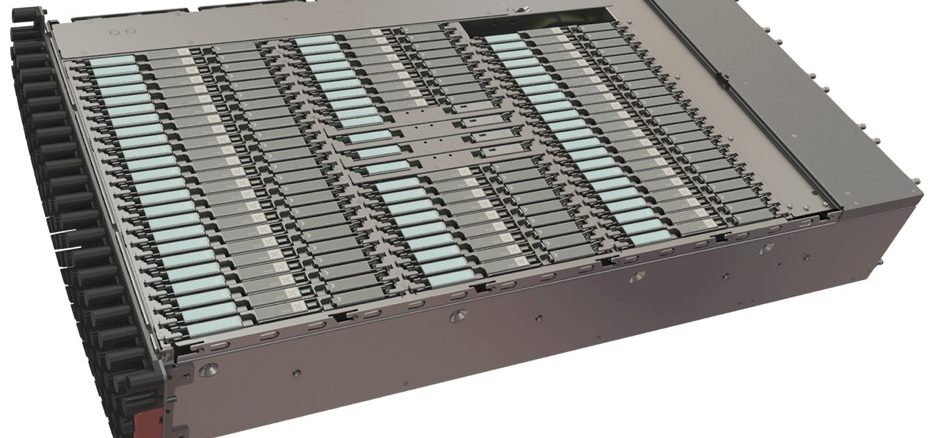

The new InfiniFlash array is based on SanDisk’s 1YXZ 2D NAND flash memory chips, were debuted last year in its Optimus Max SSD flash drives. But instead of implementing the flash memory in an SSD form fact, SanDisk put it on a flash card, nicknamed “Icechip,” and to be precise, this card has 64 NAND packages, each with 128 GB capacity, for a total capacity of 8 TB. The InfiniFlash array has 64 of these Icechip cards crammed into a 3U enclosure. There’s a certain amount of intelligence on each card to run the InfiniFlash OS software, but there is no compute capacity other than this in the chassis. The InfiniFlash chassis has two power supplies, four fans, and two SAS modules that plug in the back, and the latter provides a total of eight 6 Gb/sec links out to as many as eight individual servers that will have full access to all of the capacity in the enclosure. (An upgrade to 12 Gb/sec SAS 3.0 links is coming down the road.)

This base InfiniFlash is capable of delivering up to 1 million I/O operations per second (presumably on a 100 percent read workload with 4 KB files, as is standard) with under 1 millisecond latencies on average. The array can deliver about 6 GB/sec of throughput, and with large blocks of data, can push as much as 9 GB/sec.

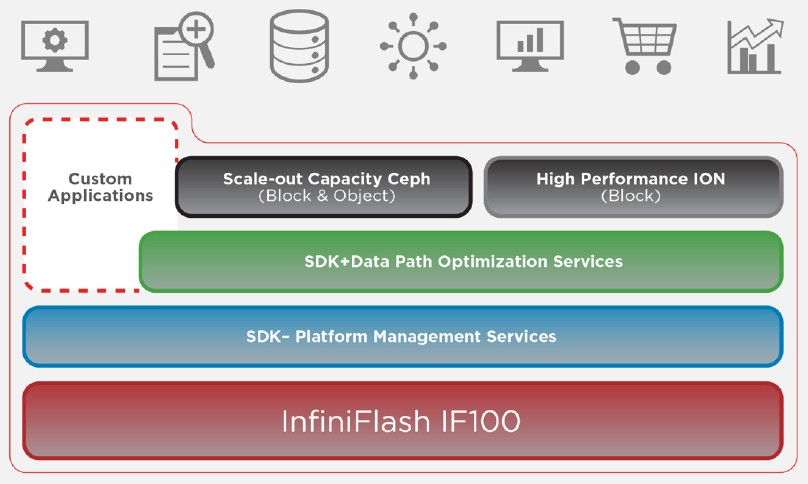

This base machine is known as the InfiniFlash IF100, and this is what SanDisk says costs under $1 per GB, and does so without resorting to data deduplication on compression. That does not mean SanDisk may not add such deduplication or compression features in the future, or that one of SanDisk’s OEM partners might not. It is just that there is no heavy-duty X86 controller or coprocessor in the array to add such compute-intensive software features and, importantly, that initial hyperscale customers will either not use or will have created on their own.

SanDisk says that this $1 per GB price is on the same order of magnitude as a disk array using 15K RPM disk drives. But the real math for such a comparison will come down to very specific cases and generalities are dangerous.

“The calculations are very difficult,” Tim Stammers, storage analyst at 451 Research, tells The Next Platform. “You have to ask about what RAID levels are being used in the disk array, if there is thin provisioning, as well as what level of short stroking of the data on the drive is being used to boost the performance.” (Short stroking is only using a portion of the capacity of the disk drive, and specifically, only using the outside edge of the disk platter where the rotational speed is higher and therefore the data can be read onto a disk reading head that much quicker than for portions of the drive closer to the center of the platter, where the rotational speed is lower.) “But SanDisk is right. We can say generally that all flash arrays are now cheaper than disk arrays using high performance 15K RPM disk drives.”

It is not clear how SanDisk intends to scale out the InfiniFlash arrays – they are not clustered like the popular all-flash arrays from SolidFire and Kaminario are, just to name two vendors that peddle petabyte-scale flash. But Lyng tells The Next Platform that it won’t be long before SanDisk can double up the capacity of the InfiniFlash’s Icechip cards to 16 TB, which would yield 1 PB of capacity in a 3U enclosure. (It is reasonable to wonder if the future Icechip card will make use of the three-level-cell, or TLC, memory that SanDisk has created with NAND partner Toshiba and that debuted last August in SanDisk’s Ultra II SSDs.) No matter what chip technology SanDisk employs to double the capacity of the InfiniFlash, this is a very large amount of data to pack in a small space – and even more impressive, would burn under 500 watts under average loads and about 750 watts running full out.

Just to give you a feel for the density, take a very dense SuperStorage storage server from Supermicro, which has room for 36 3.5-inch SAS or SATA drives. At the highest capacities available today, with 8 TB SATA drives, that array can put 288 TB in a 4U enclosure, or about 72 TB per rack unit. Granted, that machine also has a dual-socket Xeon E5-2600 server embedded in it, which the InfiniFlash array does not. But at 512 TB using the current Icechip cards, the InfiniFlash is delivering over 170 TB per rack unit of space, and even if you add a 1U rack server to it for equivalency sake, that is still 128 TB per rack unit.

In places where low latency, low power consumption, and high density matter, arrays like the one that SanDisk has crafted are going to find homes. Just think about a rack with 14 PB of capacity that only burns 7 kilowatts. Generally speaking, Lyng says that the InfiniFlash arrays have five times the density and 50 times the performance as comparable disk arrays, and burn one-fifth the power and are four times as reliable at petabyte scales because disks fail a lot more frequently than do flash cards.

While SanDisk will be counting on its flash chips to scale up the InfiniFlash arrays, it will be using the open source Ceph object and block storage software, now controlled by Red Hat after it acquired Inktank last year, to build scale-out object and block storage. The base flash array is known as the IF100 when it is just sold with bare metal, and is called the IF500 when it is equipped with Ceph object storage, This is aimed at enterprise and hyperscale customers who want 2 PB or more of object storage, and the IF500 ends up being the direct-attached storage for the Ceph nodes. “We have been working on Ceph for the past 15 months, and what we have done there is enhanced the performance so that when you, for example, are doing block reads off of an SSD of some kind, you will likely get around 20,000 IOPS, and we have optimized Ceph so you are getting 250,000 IOPS for block-based reads,” explains Lyng. Significantly, Ceph includes enterprise-class features like snapshotting and cloning, which the raw IF100 does not have built in. Further down the line, SanDisk will be adding support for OpenStack’s Swift object storage protocols to the IF500.

SanDisk is also packaging up the ION Accelerator block storage software it got through its acquisition of Fusion-io last year to make the IF700. This appliance is aimed at accelerating the performance of very large Oracle, SQL Server, and DB2 relational databases as well as at NoSQL data stores like Cassandra and MongoDB.

With this additional software and support layered on to the raw InfiniFlash arrays for the IF500 and the IF700, the pricing is under $2 per GB, says Lyng.

This is the first time that SanDisk has created and sold its own appliance, although the InfiniFlash arrays will be sold by OEMs and value added resellers downstream in the channel. SanDisk has been working with selected customers for more than a year on this product as it was developed, and a larger number for the past six months; the run the gamut from hyperscalers to media and entertainment companies to game hosters to service providers and clouds and government agencies. All of them have one thing in common: petabyte-scale data storage requirements with lots of speed.

These are, of course, the same customers that other all-flash array makers will be targeting, but none of them have their own flash foundry like SanDisk shares with Toshiba.

Be the first to comment