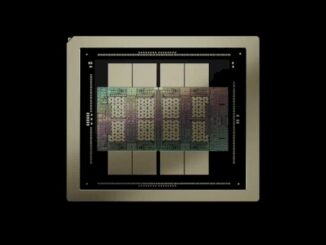

Is Nvidia Assembling The Parts For Its Next Inference Platform?

No, we did not miss the fact that Nvidia did an “acquihire” of AI accelerator and system startup and rival Groq on Christmas Eve. …

No, we did not miss the fact that Nvidia did an “acquihire” of AI accelerator and system startup and rival Groq on Christmas Eve. …

If the GenAI expansion runs out of gas, Taiwan Semiconductor Manufacturing Co, the world’s most important foundry for advanced chippery, will be the first to know. …

If GenAI is going to go mainstream and not just be a bubble that helps prop up the global economy for a couple of years, AI inference is going to have to come down in price – and do so faster than it has done thus far. …

As the year came to an end, we tore apart IDC’s assessments for server spending, including the huge jump in accelerated supercomputers for running GenAI and more traditional machine learning workloads and as this year got started, we did forensic analysis and modeling based on the company’s reckoning of Ethernet switching and routing revenues. …

But virtue of its scale out capability, which is key for driving the size of absolutely enormous AI clusters, and to its universality, Ethernet switch sales are booming, and if the recent history is any guide, we can expect Ethernet revenues will climb exponentially higher in the coming quarters as well. …

Having an annual cadence for the improvement of AI systems is a great thing if you happen to be buying the newest iron at exactly the right time. …

The number of AI inference chip startups in the world is gross – literally gross, as in a dozen dozens. …

A total addressable market is a forecast of what will be sold – more precisely, what can be manufactured and sold. …

It has always been funny to us that anyone can acquire control of an open source project. …

AI is changing what “good” looks like in the modern datacenter. …

All Content Copyright The Next Platform